Mohamed Elawady

Automatic Classification of Bright Retinal Lesions via Deep Network Features

Jul 28, 2017

Abstract:The diabetic retinopathy is timely diagonalized through color eye fundus images by experienced ophthalmologists, in order to recognize potential retinal features and identify early-blindness cases. In this paper, it is proposed to extract deep features from the last fully-connected layer of, four different, pre-trained convolutional neural networks. These features are then feeded into a non-linear classifier to discriminate three-class diabetic cases, i.e., normal, exudates, and drusen. Averaged across 1113 color retinal images collected from six publicly available annotated datasets, the deep features approach perform better than the classical bag-of-words approach. The proposed approaches have an average accuracy between 91.23% and 92.00% with more than 13% improvement over the traditional state of art methods.

Wavelet-based Reflection Symmetry Detection via Textural and Color Histograms

Jul 22, 2017

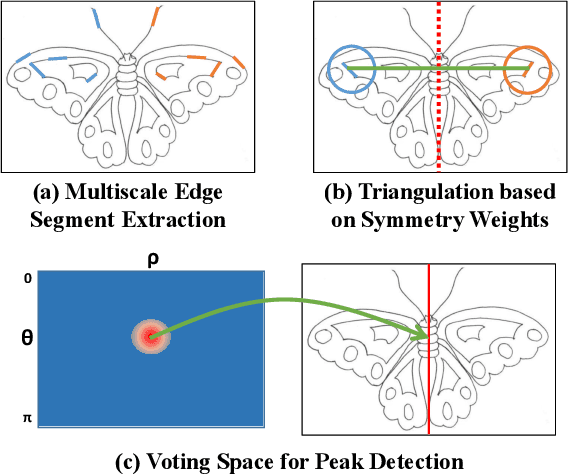

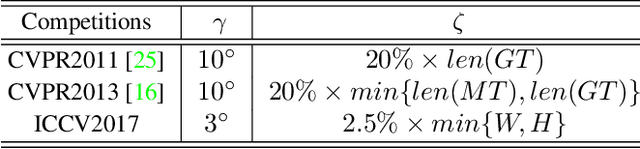

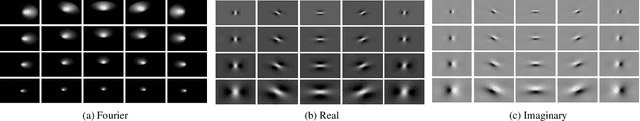

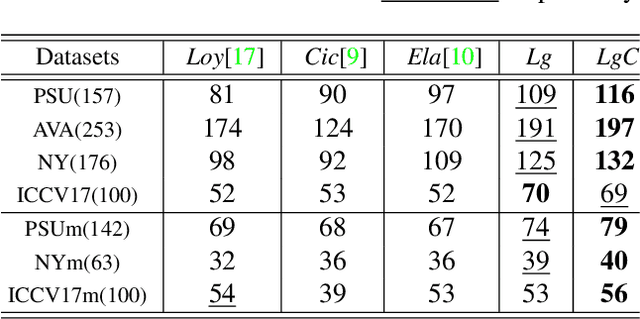

Abstract:Symmetry is one of the significant visual properties inside an image plane, to identify the geometrically balanced structures through real-world objects. Existing symmetry detection methods rely on descriptors of the local image features and their neighborhood behavior, resulting incomplete symmetrical axis candidates to discover the mirror similarities on a global scale. In this paper, we propose a new reflection symmetry detection scheme, based on a reliable edge-based feature extraction using Log-Gabor filters, plus an efficient voting scheme parameterized by their corresponding textural and color neighborhood information. Experimental evaluation on four single-case and three multiple-case symmetry detection datasets validates the superior achievement of the proposed work to find global symmetries inside an image.

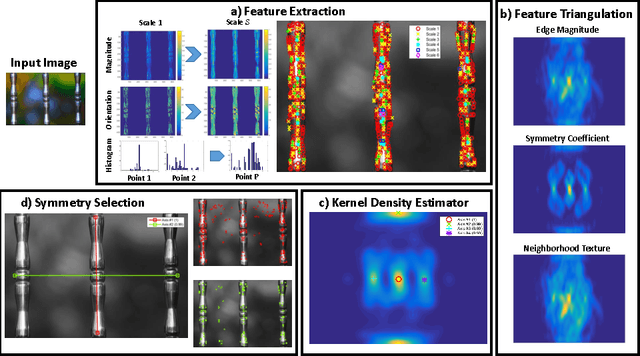

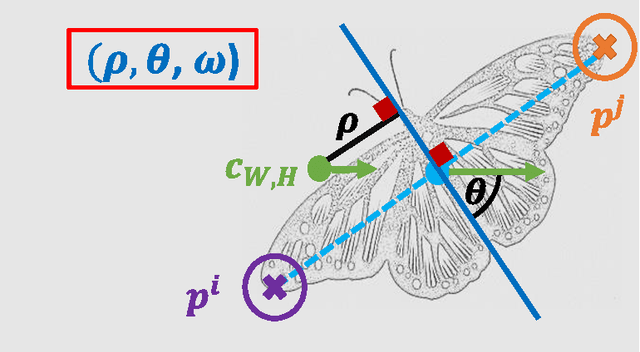

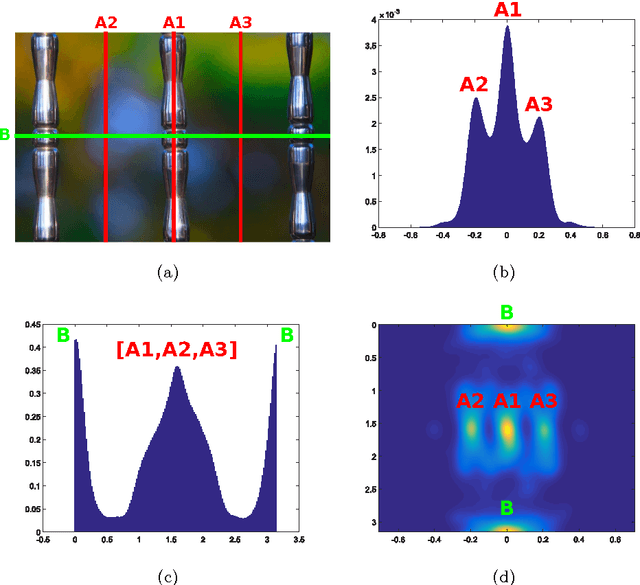

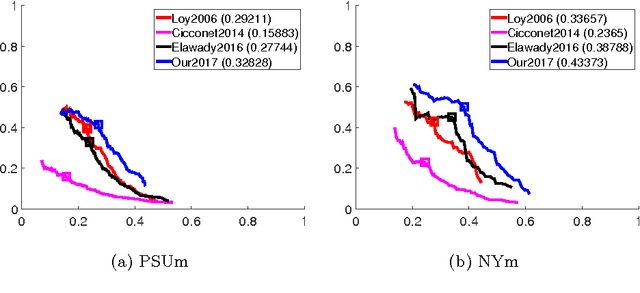

Multiple Reflection Symmetry Detection via Linear-Directional Kernel Density Estimation

Apr 21, 2017

Abstract:Symmetry is an important composition feature by investigating similar sides inside an image plane. It has a crucial effect to recognize man-made or nature objects within the universe. Recent symmetry detection approaches used a smoothing kernel over different voting maps in the polar coordinate system to detect symmetry peaks, which split the regions of symmetry axis candidates in inefficient way. We propose a reliable voting representation based on weighted linear-directional kernel density estimation, to detect multiple symmetries over challenging real-world and synthetic images. Experimental evaluation on two public datasets demonstrates the superior performance of the proposed algorithm to detect global symmetry axes respect to the major image shapes.

Automated Breast Lesion Segmentation in Ultrasound Images

Sep 27, 2016

Abstract:The main objective of this project is to segment different breast ultrasound images to find out lesion area by discarding the low contrast regions as well as the inherent speckle noise. The proposed method consists of three stages (removing noise, segmentation, classification) in order to extract the correct lesion. We used normalized cuts approach to segment ultrasound images into regions of interest where we can possibly finds the lesion, and then K-means classifier is applied to decide finally the location of the lesion. For every original image, an annotated ground-truth image is given to perform comparison with the obtained experimental results, providing accurate evaluation measures.

Detecting and avoiding frontal obstacles from monocular camera for micro unmanned aerial vehicles

Apr 03, 2016Abstract:In literature, several approaches are trying to make the UAVs fly autonomously i.e., by extracting perspective cues such as straight lines. However, it is only available in well-defined human made environments, in addition to many other cues which require enough texture information. Our main target is to detect and avoid frontal obstacles from a monocular camera using a quad rotor Ar.Drone 2 by exploiting optical flow as a motion parallax, the drone is permitted to fly at a speed of 1 m/s and an altitude ranging from 1 to 4 meters above the ground level. In general, detecting and avoiding frontal obstacle is a quite challenging problem because optical flow has some limitation which should be taken into account i.e. lighting conditions and aperture problem.

Sparse Coral Classification Using Deep Convolutional Neural Networks

Nov 29, 2015

Abstract:Autonomous repair of deep-sea coral reefs is a recent proposed idea to support the oceans ecosystem in which is vital for commercial fishing, tourism and other species. This idea can be operated through using many small autonomous underwater vehicles (AUVs) and swarm intelligence techniques to locate and replace chunks of coral which have been broken off, thus enabling re-growth and maintaining the habitat. The aim of this project is developing machine vision algorithms to enable an underwater robot to locate a coral reef and a chunk of coral on the seabed and prompt the robot to pick it up. Although there is no literature on this particular problem, related work on fish counting may give some insight into the problem. The technical challenges are principally due to the potential lack of clarity of the water and platform stabilization as well as spurious artifacts (rocks, fish, and crabs). We present an efficient sparse classification for coral species using supervised deep learning method called Convolutional Neural Networks (CNNs). We compute Weber Local Descriptor (WLD), Phase Congruency (PC), and Zero Component Analysis (ZCA) Whitening to extract shape and texture feature descriptors, which are employed to be supplementary channels (feature-based maps) besides basic spatial color channels (spatial-based maps) of coral input image, we also experiment state-of-art preprocessing underwater algorithms for image enhancement and color normalization and color conversion adjustment. Our proposed coral classification method is developed under MATLAB platform, and evaluated by two different coral datasets (University of California San Diego's Moorea Labeled Corals, and Heriot-Watt University's Atlantic Deep Sea).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge