Mohamed Dahy Elkhouly

LIGHTS: LIGHT Specularity Dataset for specular detection in Multi-view

Jan 26, 2021

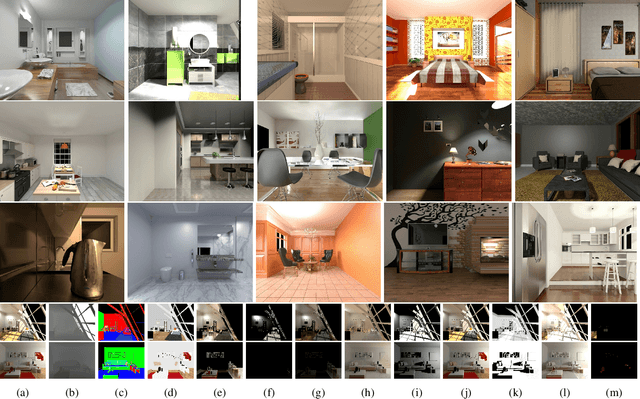

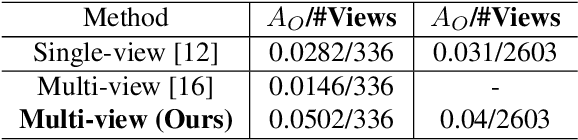

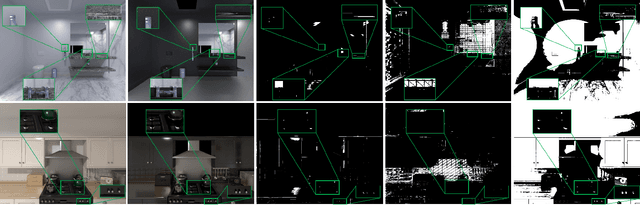

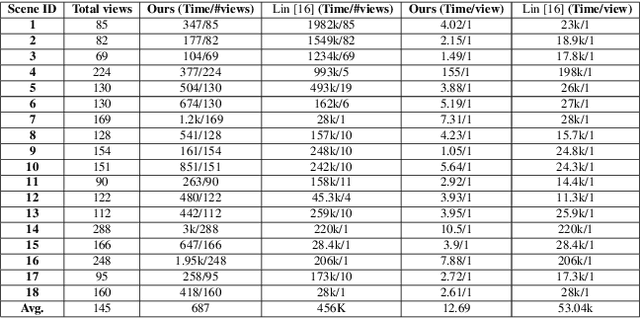

Abstract:Specular highlights are commonplace in images, however, methods for detecting them and in turn removing the phenomenon are particularly challenging. A reason for this, is due to the difficulty of creating a dataset for training or evaluation, as in the real-world we lack the necessary control over the environment. Therefore, we propose a novel physically-based rendered LIGHT Specularity (LIGHTS) Dataset for the evaluation of the specular highlight detection task. Our dataset consists of 18 high quality architectural scenes, where each scene is rendered with multiple views. In total we have 2,603 views with an average of 145 views per scene. Additionally we propose a simple aggregation based method for specular highlight detection that outperforms prior work by 3.6% in two orders of magnitude less time on our dataset.

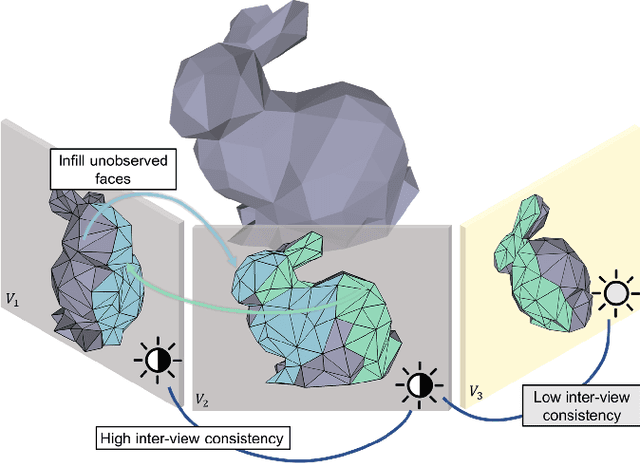

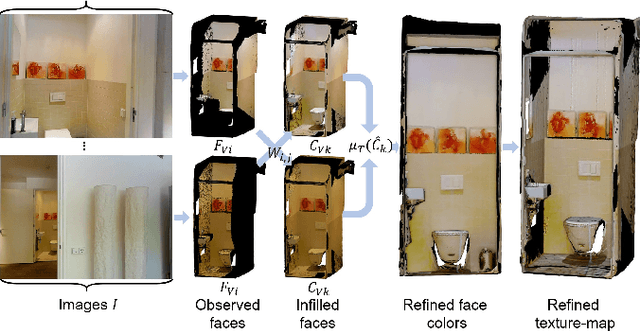

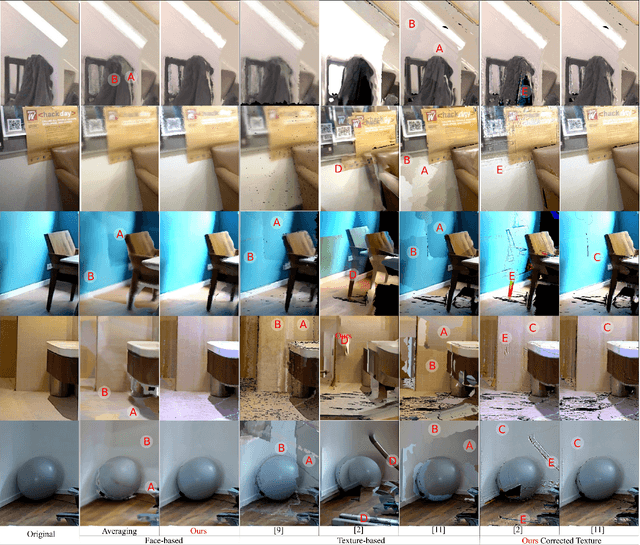

Consistent Mesh Colors for Multi-View Reconstructed 3D Scenes

Jan 26, 2021

Abstract:We address the issue of creating consistent mesh texture maps captured from scenes without color calibration. We find that the method for aggregation of the multiple views is crucial for creating spatially consistent meshes without the need to explicitly optimize for spatial consistency. We compute a color prior from the cross-correlation of observable view faces and the faces per view to identify an optimal per-face color. We then use this color in a re-weighting ratio for the best-view texture, which is identified by prior mesh texturing work, to create a spatial consistent texture map. Despite our method not explicitly handling spatial consistency, our results show qualitatively more consistent results than other state-of-the-art techniques while being computationally more efficient. We evaluate on prior datasets and additionally Matterport3D showing qualitative improvements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge