Mohamed A. Ismail

Detection of road traffic crashes based on collision estimation

Jul 26, 2022

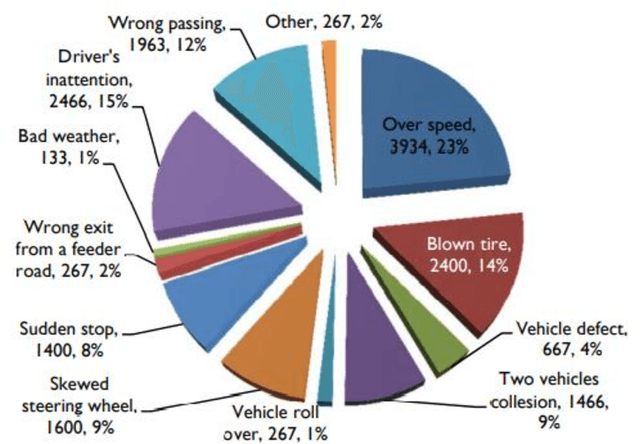

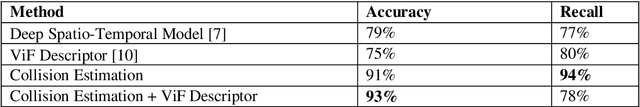

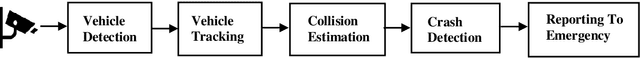

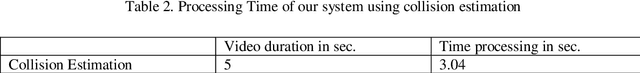

Abstract:This paper introduces a framework based on computer vision that can detect road traffic crashes (RCTs) by using the installed surveillance/CCTV camera and report them to the emergency in real-time with the exact location and time of occurrence of the accident. The framework is built of five modules. We start with the detection of vehicles by using YOLO architecture; The second module is the tracking of vehicles using MOSSE tracker, Then the third module is a new approach to detect accidents based on collision estimation. Then the fourth module for each vehicle, we detect if there is a car accident or not based on the violent flow descriptor (ViF) followed by an SVM classifier for crash prediction. Finally, in the last stage, if there is a car accident, the system will send a notification to the emergency by using a GPS module that provides us with the location, time, and date of the accident to be sent to the emergency with the help of the GSM module. The main objective is to achieve higher accuracy with fewer false alarms and to implement a simple system based on pipelining technique.

* 11 pages , 9 figures

VSCAN: An Enhanced Video Summarization using Density-based Spatial Clustering

May 01, 2014

Abstract:In this paper, we present VSCAN, a novel approach for generating static video summaries. This approach is based on a modified DBSCAN clustering algorithm to summarize the video content utilizing both color and texture features of the video frames. The paper also introduces an enhanced evaluation method that depends on color and texture features. Video Summaries generated by VSCAN are compared with summaries generated by other approaches found in the literature and those created by users. Experimental results indicate that the video summaries generated by VSCAN have a higher quality than those generated by other approaches.

miRNA and Gene Expression based Cancer Classification using Self- Learning and Co-Training Approaches

Jan 18, 2014

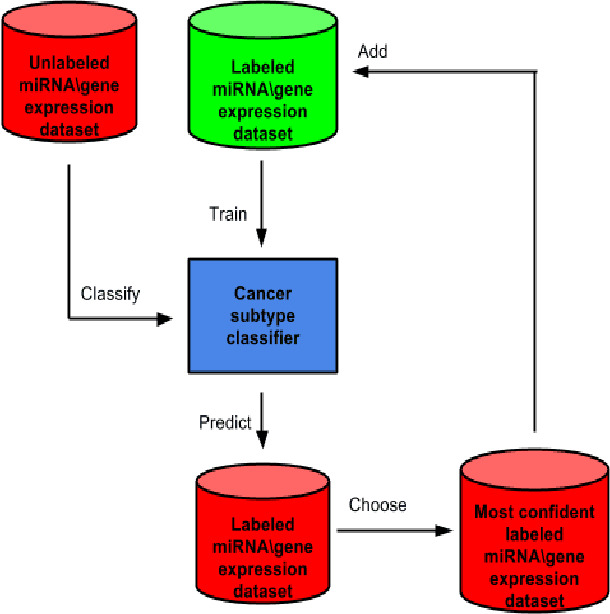

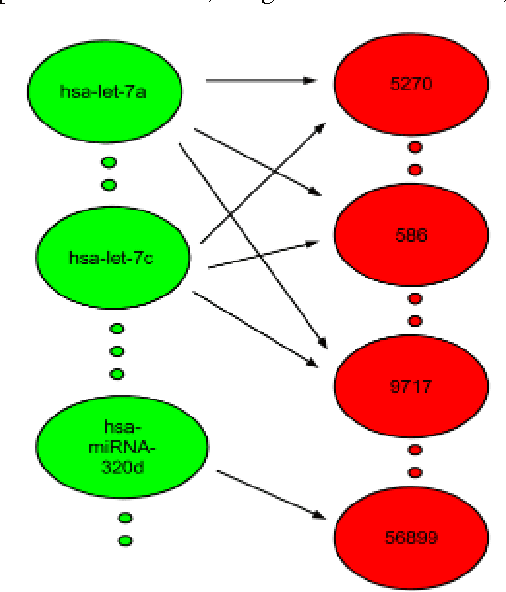

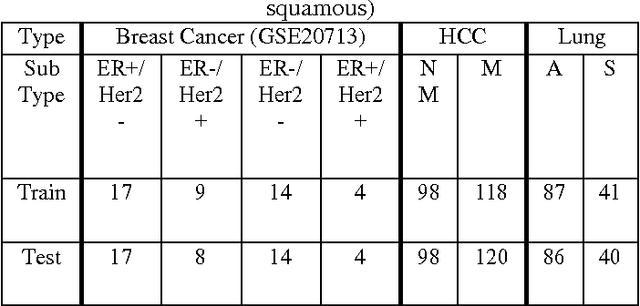

Abstract:miRNA and gene expression profiles have been proved useful for classifying cancer samples. Efficient classifiers have been recently sought and developed. A number of attempts to classify cancer samples using miRNA/gene expression profiles are known in literature. However, the use of semi-supervised learning models have been used recently in bioinformatics, to exploit the huge corpuses of publicly available sets. Using both labeled and unlabeled sets to train sample classifiers, have not been previously considered when gene and miRNA expression sets are used. Moreover, there is a motivation to integrate both miRNA and gene expression for a semi-supervised cancer classification as that provides more information on the characteristics of cancer samples. In this paper, two semi-supervised machine learning approaches, namely self-learning and co-training, are adapted to enhance the quality of cancer sample classification. These approaches exploit the huge public corpuses to enrich the training data. In self-learning, miRNA and gene based classifiers are enhanced independently. While in co-training, both miRNA and gene expression profiles are used simultaneously to provide different views of cancer samples. To our knowledge, it is the first attempt to apply these learning approaches to cancer classification. The approaches were evaluated using breast cancer, hepatocellular carcinoma (HCC) and lung cancer expression sets. Results show up to 20% improvement in F1-measure over Random Forests and SVM classifiers. Co-Training also outperforms Low Density Separation (LDS) approach by around 25% improvement in F1-measure in breast cancer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge