Mirko Meboldt

DeGauss: Dynamic-Static Decomposition with Gaussian Splatting for Distractor-free 3D Reconstruction

Mar 17, 2025

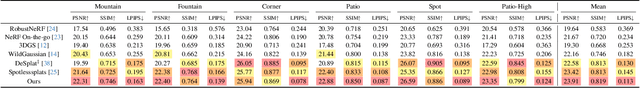

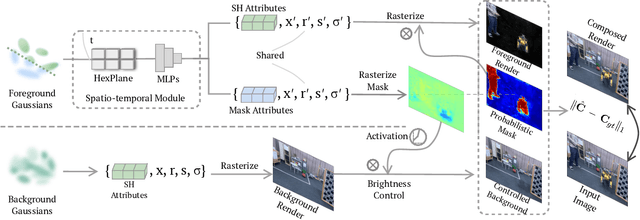

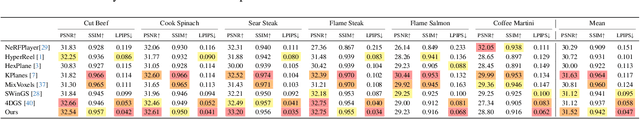

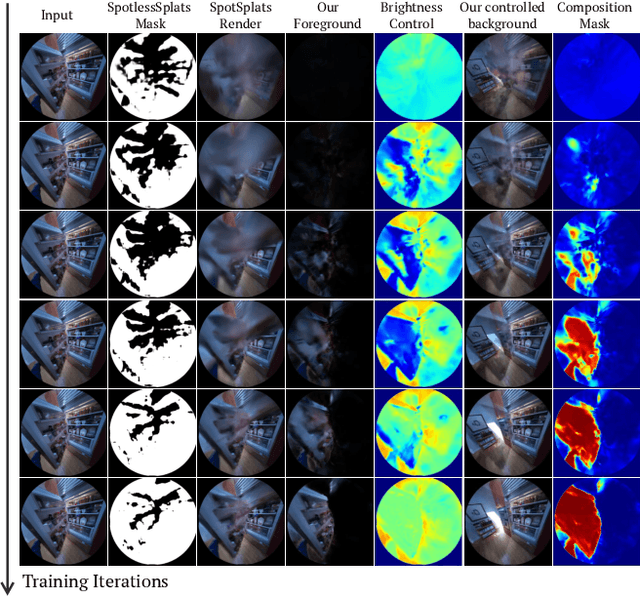

Abstract:Reconstructing clean, distractor-free 3D scenes from real-world captures remains a significant challenge, particularly in highly dynamic and cluttered settings such as egocentric videos. To tackle this problem, we introduce DeGauss, a simple and robust self-supervised framework for dynamic scene reconstruction based on a decoupled dynamic-static Gaussian Splatting design. DeGauss models dynamic elements with foreground Gaussians and static content with background Gaussians, using a probabilistic mask to coordinate their composition and enable independent yet complementary optimization. DeGauss generalizes robustly across a wide range of real-world scenarios, from casual image collections to long, dynamic egocentric videos, without relying on complex heuristics or extensive supervision. Experiments on benchmarks including NeRF-on-the-go, ADT, AEA, Hot3D, and EPIC-Fields demonstrate that DeGauss consistently outperforms existing methods, establishing a strong baseline for generalizable, distractor-free 3D reconstructionin highly dynamic, interaction-rich environments.

Automatized Self-Supervised Learning for Skin Lesion Screening

Nov 11, 2023Abstract:The incidence rates of melanoma, the deadliest form of skin cancer, have been increasing steadily worldwide, presenting a significant challenge to dermatologists. Early detection of melanoma is crucial for improving patient survival rates, but identifying suspicious lesions through ugly duckling (UD) screening, the current method used for skin cancer screening, can be challenging and often requires expertise in pigmented lesions. To address these challenges and improve patient outcomes, an artificial intelligence (AI) decision support tool was developed to assist dermatologists in identifying UD from wide-field patient images. The tool uses a state-of-the-art object detection algorithm to identify and extract all skin lesions from patient images, which are then sorted by suspiciousness using a self-supervised AI algorithm. A clinical validation study was conducted to evaluate the tool's performance, which demonstrated an average sensitivity of 93% for the top-10 AI-identified UDs on skin lesions selected by the majority of experts in pigmented skin lesions. The study also found that dermatologists confidence increased, and the average majority agreement with the top-10 AI-identified UDs improved to 100% when assisted by AI. The development of this AI decision support tool aims to address the shortage of specialists, enable at-risk patients to receive faster consultations and understand the impact of AI-assisted screening. The tool's automation can assist dermatologists in identifying suspicious lesions and provide a more objective assessment, reducing subjectivity in the screening process. The future steps for this project include expanding the dataset to include histologically confirmed melanoma cases and increasing the number of participants for clinical validation to strengthen the tool's reliability and adapt it for real-world consultation.

POV-Surgery: A Dataset for Egocentric Hand and Tool Pose Estimation During Surgical Activities

Jul 19, 2023Abstract:The surgical usage of Mixed Reality (MR) has received growing attention in areas such as surgical navigation systems, skill assessment, and robot-assisted surgeries. For such applications, pose estimation for hand and surgical instruments from an egocentric perspective is a fundamental task and has been studied extensively in the computer vision field in recent years. However, the development of this field has been impeded by a lack of datasets, especially in the surgical field, where bloody gloves and reflective metallic tools make it hard to obtain 3D pose annotations for hands and objects using conventional methods. To address this issue, we propose POV-Surgery, a large-scale, synthetic, egocentric dataset focusing on pose estimation for hands with different surgical gloves and three orthopedic surgical instruments, namely scalpel, friem, and diskplacer. Our dataset consists of 53 sequences and 88,329 frames, featuring high-resolution RGB-D video streams with activity annotations, accurate 3D and 2D annotations for hand-object pose, and 2D hand-object segmentation masks. We fine-tune the current SOTA methods on POV-Surgery and further show the generalizability when applying to real-life cases with surgical gloves and tools by extensive evaluations. The code and the dataset are publicly available at batfacewayne.github.io/POV_Surgery_io/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge