Milind Kulkarni

RT-kNNS Unbound: Using RT Cores to Accelerate Unrestricted Neighbor Search

May 26, 2023

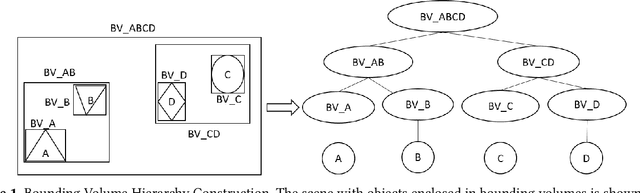

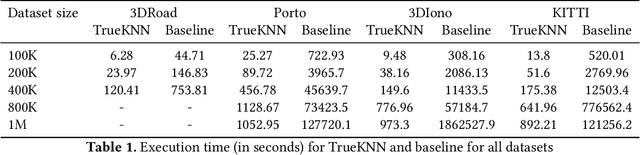

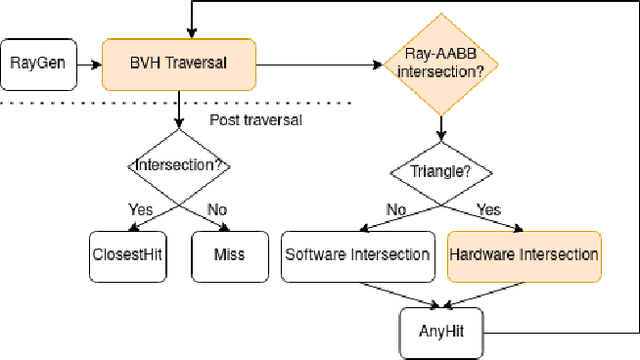

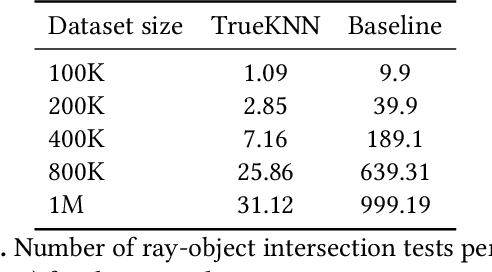

Abstract:The problem of identifying the k-Nearest Neighbors (kNNS) of a point has proven to be very useful both as a standalone application and as a subroutine in larger applications. Given its far-reaching applicability in areas such as machine learning and point clouds, extensive research has gone into leveraging GPU acceleration to solve this problem. Recent work has shown that using Ray Tracing cores in recent GPUs to accelerate kNNS is much more efficient compared to traditional acceleration using shader cores. However, the existing translation of kNNS to a ray tracing problem imposes a constraint on the search space for neighbors. Due to this, we can only use RT cores to accelerate fixed-radius kNNS, which requires the user to set a search radius a priori and hence can miss neighbors. In this work, we propose TrueKNN, the first unbounded RT-accelerated neighbor search. TrueKNN adopts an iterative approach where we incrementally grow the search space until all points have found their k neighbors. We show that our approach is orders of magnitude faster than existing approaches and can even be used to accelerate fixed-radius neighbor searches.

Quantum Computing Methods for Supervised Learning

Jun 22, 2020

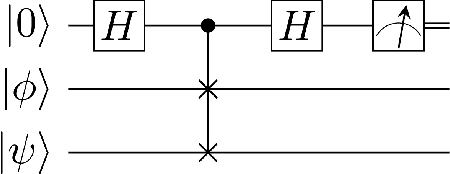

Abstract:The last two decades have seen an explosive growth in the theory and practice of both quantum computing and machine learning. Modern machine learning systems process huge volumes of data and demand massive computational power. As silicon semiconductor miniaturization approaches its physics limits, quantum computing is increasingly being considered to cater to these computational needs in the future. Small-scale quantum computers and quantum annealers have been built and are already being sold commercially. Quantum computers can benefit machine learning research and application across all science and engineering domains. However, owing to its roots in quantum mechanics, research in this field has so far been confined within the purview of the physics community, and most work is not easily accessible to researchers from other disciplines. In this paper, we provide a background and summarize key results of quantum computing before exploring its application to supervised machine learning problems. By eschewing results from physics that have little bearing on quantum computation, we hope to make this introduction accessible to data scientists, machine learning practitioners, and researchers from across disciplines.

Survey of Personalization Techniques for Federated Learning

Mar 19, 2020Abstract:Federated learning enables machine learning models to learn from private decentralized data without compromising privacy. The standard formulation of federated learning produces one shared model for all clients. Statistical heterogeneity due to non-IID distribution of data across devices often leads to scenarios where, for some clients, the local models trained solely on their private data perform better than the global shared model thus taking away their incentive to participate in the process. Several techniques have been proposed to personalize global models to work better for individual clients. This paper highlights the need for personalization and surveys recent research on this topic.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge