Mikhail Katkov

Random Tree Model of Meaningful Memory

Dec 02, 2024

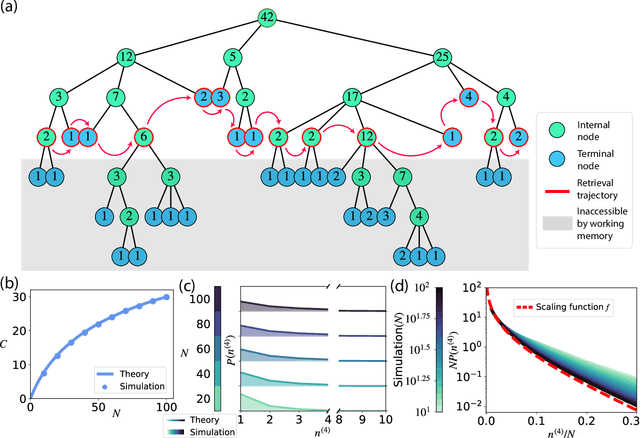

Abstract:Traditional studies of memory for meaningful narratives focus on specific stories and their semantic structures but do not address common quantitative features of recall across different narratives. We introduce a statistical ensemble of random trees to represent narratives as hierarchies of key points, where each node is a compressed representation of its descendant leaves, which are the original narrative segments. Recall is modeled as constrained by working memory capacity from this hierarchical structure. Our analytical solution aligns with observations from large-scale narrative recall experiments. Specifically, our model explains that (1) average recall length increases sublinearly with narrative length, and (2) individuals summarize increasingly longer narrative segments in each recall sentence. Additionally, the theory predicts that for sufficiently long narratives, a universal, scale-invariant limit emerges, where the fraction of a narrative summarized by a single recall sentence follows a distribution independent of narrative length.

Hierarchical Working Memory and a New Magic Number

Aug 14, 2024Abstract:The extremely limited working memory span, typically around four items, contrasts sharply with our everyday experience of processing much larger streams of sensory information concurrently. This disparity suggests that working memory can organize information into compact representations such as chunks, yet the underlying neural mechanisms remain largely unknown. Here, we propose a recurrent neural network model for chunking within the framework of the synaptic theory of working memory. We showed that by selectively suppressing groups of stimuli, the network can maintain and retrieve the stimuli in chunks, hence exceeding the basic capacity. Moreover, we show that our model can dynamically construct hierarchical representations within working memory through hierarchical chunking. A consequence of this proposed mechanism is a new limit on the number of items that can be stored and subsequently retrieved from working memory, depending only on the basic working memory capacity when chunking is not invoked. Predictions from our model were confirmed by analyzing single-unit responses in epileptic patients and memory experiments with verbal material. Our work provides a novel conceptual and analytical framework for understanding the on-the-fly organization of information in the brain that is crucial for cognition.

Using large language models to study human memory for meaningful narratives

Nov 28, 2023

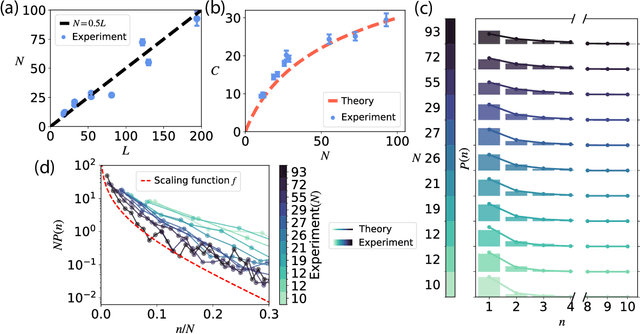

Abstract:One of the most impressive achievements of the AI revolution is the development of large language models that can generate meaningful text and respond to instructions in plain English with no additional training necessary. Here we show that language models can be used as a scientific instrument for studying human memory for meaningful material. We developed a pipeline for designing large scale memory experiments and analyzing the obtained results. We performed online memory experiments with a large number of participants and collected recognition and recall data for narratives of different lengths. We found that both recall and recognition performance scale linearly with narrative length. Furthermore, in order to investigate the role of narrative comprehension in memory, we repeated these experiments using scrambled versions of the presented stories. We found that even though recall performance declined significantly, recognition remained largely unaffected. Interestingly, recalls in this condition seem to follow the original narrative order rather than the scrambled presentation, pointing to a contextual reconstruction of the story in memory.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge