Miguel A. Lago

Medical Image Quality Metrics for Foveated Model Observers

Feb 09, 2021

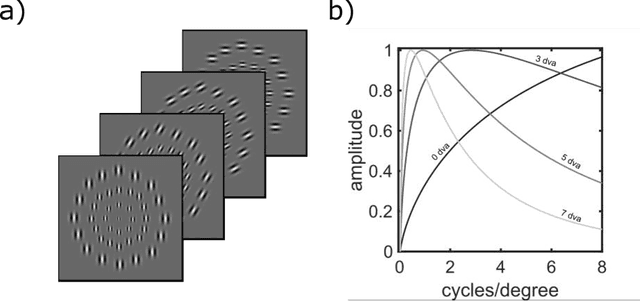

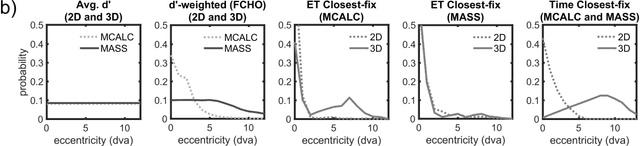

Abstract:A recently proposed model observer mimics the foveated nature of the human visual system by processing the entire image with varying spatial detail, executing eye movements and scrolling through slices. The model can predict how human search performance changes with signal type and modality (2D vs. 3D), yet its implementation is computationally expensive and time-consuming. Here, we evaluate various image quality metrics using extensions of the classic index of detectability expressions and assess foveated model observers for location-known exactly tasks. We evaluated foveated extensions of a Channelized Hotelling and Non-prewhitening model with an eye filter. The proposed methods involve calculating a model index of detectability (d') for each retinal eccentricity and combining these with a weighting function into a single detectability metric. We assessed different versions of the weighting function that varied in the required measurements of the human observers' search (no measurements, eye movement patterns, and size of the image and median search times). We show that the index of detectability across eccentricities weighted using the eye movement patterns of observers best predicted human performance in 2D vs. 3D search performance for a small microcalcification-like signal and a larger mass-like. The metric with weighting function based on median search times was the second best at predicting human results. The findings provide a set of model observer tools to evaluate image quality in the early stages of imaging system evaluation or design without implementing the more computationally complex foveated search model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge