Michael Resch

Deep Reinforcement Learning for Computational Fluid Dynamics on HPC Systems

May 13, 2022

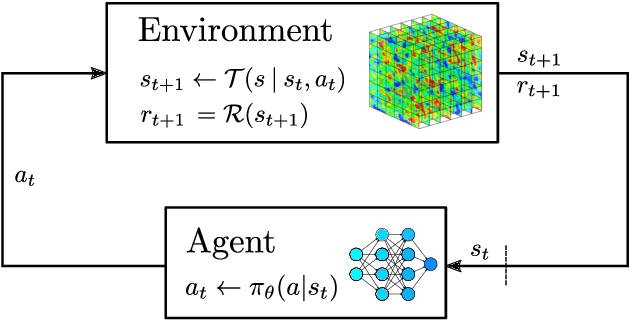

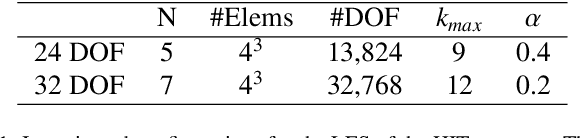

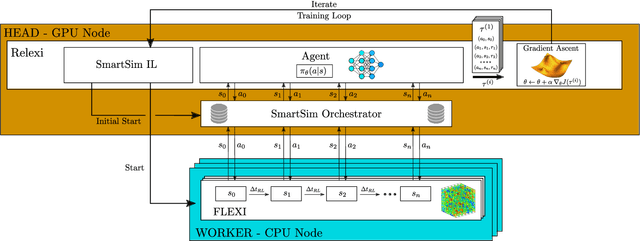

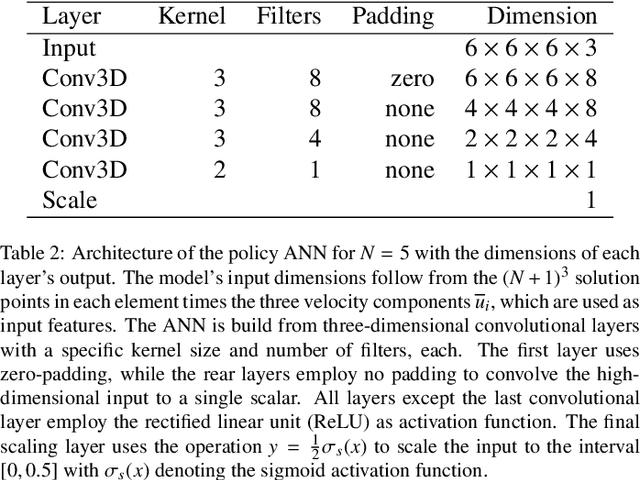

Abstract:Reinforcement learning (RL) is highly suitable for devising control strategies in the context of dynamical systems. A prominent instance of such a dynamical system is the system of equations governing fluid dynamics. Recent research results indicate that RL-augmented computational fluid dynamics (CFD) solvers can exceed the current state of the art, for example in the field of turbulence modeling. However, while in supervised learning, the training data can be generated a priori in an offline manner, RL requires constant run-time interaction and data exchange with the CFD solver during training. In order to leverage the potential of RL-enhanced CFD, the interaction between the CFD solver and the RL algorithm thus have to be implemented efficiently on high-performance computing (HPC) hardware. To this end, we present Relexi as a scalable RL framework that bridges the gap between machine learning workflows and modern CFD solvers on HPC systems providing both components with its specialized hardware. Relexi is built with modularity in mind and allows easy integration of various HPC solvers by means of the in-memory data transfer provided by the SmartSim library. Here, we demonstrate that the Relexi framework can scale up to hundreds of parallel environment on thousands of cores. This allows to leverage modern HPC resources to either enable larger problems or faster turnaround times. Finally, we demonstrate the potential of an RL-augmented CFD solver by finding a control strategy for optimal eddy viscosity selection in large eddy simulations.

A Multi-Layered Approach for Measuring the Simulation-to-Reality Gap of Radar Perception for Autonomous Driving

Jun 20, 2021

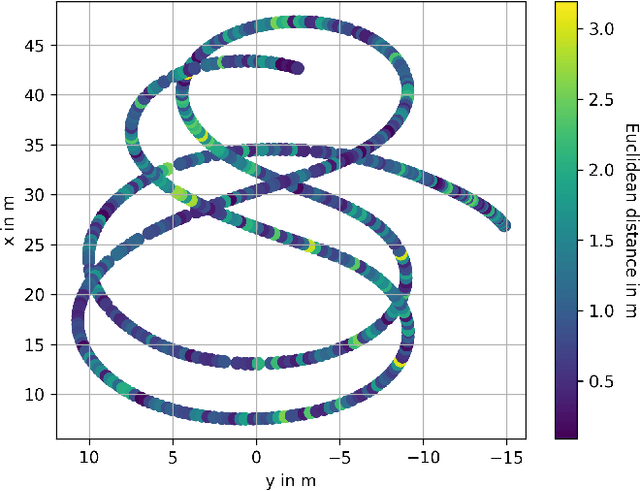

Abstract:With the increasing safety validation requirements for the release of a self-driving car, alternative approaches, such as simulation-based testing, are emerging in addition to conventional real-world testing. In order to rely on virtual tests the employed sensor models have to be validated. For this reason, it is necessary to quantify the discrepancy between simulation and reality in order to determine whether a certain fidelity is sufficient for a desired intended use. There exists no sound method to measure this simulation-to-reality gap of radar perception for autonomous driving. We address this problem by introducing a multi-layered evaluation approach, which consists of a combination of an explicit and an implicit sensor model evaluation. The former directly evaluates the realism of the synthetically generated sensor data, while the latter refers to an evaluation of a downstream target application. In order to demonstrate the method, we evaluated the fidelity of three typical radar model types (ideal, data-driven, ray tracing-based) and their applicability for virtually testing radar-based multi-object tracking. We have shown the effectiveness of the proposed approach in terms of providing an in-depth sensor model assessment that renders existing disparities visible and enables a realistic estimation of the overall model fidelity across different scenarios.

Deep Evaluation Metric: Learning to Evaluate Simulated Radar Point Clouds for Virtual Testing of Autonomous Driving

Apr 14, 2021

Abstract:The usage of environment sensor models for virtual testing is a promising approach to reduce the testing effort of autonomous driving. However, in order to deduce any statements regarding the performance of an autonomous driving function based on simulation, the sensor model has to be validated to determine the discrepancy between the synthetic and real sensor data. Since a certain degree of divergence can be assumed to exist, the sufficient level of fidelity must be determined, which poses a major challenge. In particular, a method for quantifying the fidelity of a sensor model does not exist and the problem of defining an appropriate metric remains. In this work, we train a neural network to distinguish real and simulated radar sensor data with the purpose of learning the latent features of real radar point clouds. Furthermore, we propose the classifier's confidence score for the `real radar point cloud' class as a metric to determine the degree of fidelity of synthetically generated radar data. The presented approach is evaluated and it can be demonstrated that the proposed deep evaluation metric outperforms conventional metrics in terms of its capability to identify characteristic differences between real and simulated radar data.

A Sensitivity Analysis Approach for Evaluating a Radar Simulation for Virtual Testing of Autonomous Driving Functions

Aug 13, 2020

Abstract:Simulation-based testing is a promising approach to significantly reduce the validation effort of automated driving functions. Realistic models of environment perception sensors such as camera, radar and lidar play a key role in this testing strategy. A generally accepted method to validate these sensor models does not yet exist. Particularly radar has traditionally been one of the most difficult sensors to model. Although promising as an alternative to real test drives, virtual tests are time-consuming due to the fact that they simulate the entire radar system in detail, using computation-intensive simulation techniques to approximate the propagation of electromagnetic waves. In this paper, we introduce a sensitivity analysis approach for developing and evaluating a radar simulation, with the objective to identify the parameters with the greatest impact regarding the system under test. A modular radar system simulation is presented and parameterized to conduct a sensitivity analysis in order to evaluate a spatial clustering algorithm as the system under test, while comparing the output from the radar model to real driving measurements to ensure a realistic model behavior. The presented approach is evaluated and it is demonstrated that with this approach results from different situations can be traced back to the contribution of the individual sub-modules of the radar simulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge