Michael J. Morris

Interpretable Models for Detecting and Monitoring Elevated Intracranial Pressure

Mar 04, 2024

Abstract:Detecting elevated intracranial pressure (ICP) is crucial in diagnosing and managing various neurological conditions. These fluctuations in pressure are transmitted to the optic nerve sheath (ONS), resulting in changes to its diameter, which can then be detected using ultrasound imaging devices. However, interpreting sonographic images of the ONS can be challenging. In this work, we propose two systems that actively monitor the ONS diameter throughout an ultrasound video and make a final prediction as to whether ICP is elevated. To construct our systems, we leverage subject matter expert (SME) guidance, structuring our processing pipeline according to their collection procedure, while also prioritizing interpretability and computational efficiency. We conduct a number of experiments, demonstrating that our proposed systems are able to outperform various baselines. One of our SMEs then manually validates our top system's performance, lending further credibility to our approach while demonstrating its potential utility in a clinical setting.

MobilePTX: Sparse Coding for Pneumothorax Detection Given Limited Training Examples

Dec 08, 2022

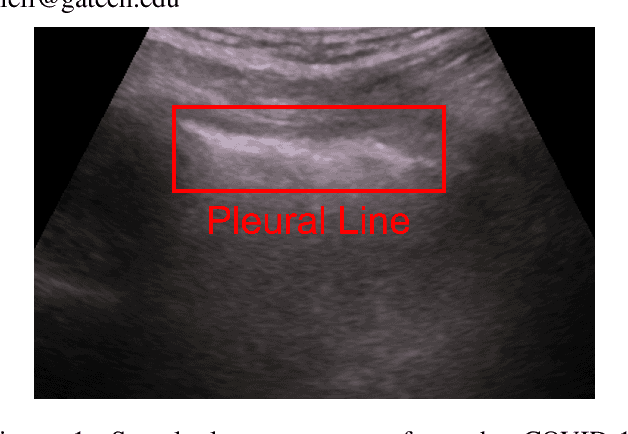

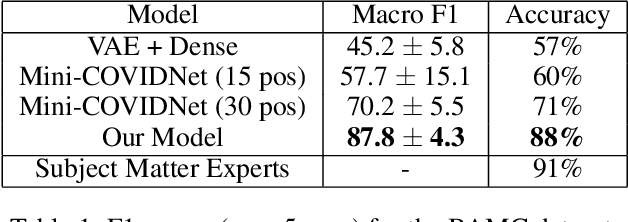

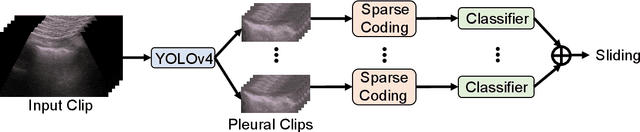

Abstract:Point-of-Care Ultrasound (POCUS) refers to clinician-performed and interpreted ultrasonography at the patient's bedside. Interpreting these images requires a high level of expertise, which may not be available during emergencies. In this paper, we support POCUS by developing classifiers that can aid medical professionals by diagnosing whether or not a patient has pneumothorax. We decomposed the task into multiple steps, using YOLOv4 to extract relevant regions of the video and a 3D sparse coding model to represent video features. Given the difficulty in acquiring positive training videos, we trained a small-data classifier with a maximum of 15 positive and 32 negative examples. To counteract this limitation, we leveraged subject matter expert (SME) knowledge to limit the hypothesis space, thus reducing the cost of data collection. We present results using two lung ultrasound datasets and demonstrate that our model is capable of achieving performance on par with SMEs in pneumothorax identification. We then developed an iOS application that runs our full system in less than 4 seconds on an iPad Pro, and less than 8 seconds on an iPhone 13 Pro, labeling key regions in the lung sonogram to provide interpretable diagnoses.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge