Michael C. Koval

Configuration Lattices for Planar Contact Manipulation Under Uncertainty

Apr 30, 2016

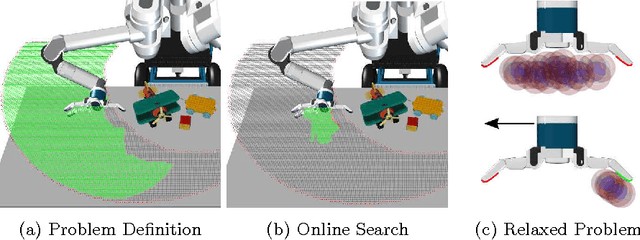

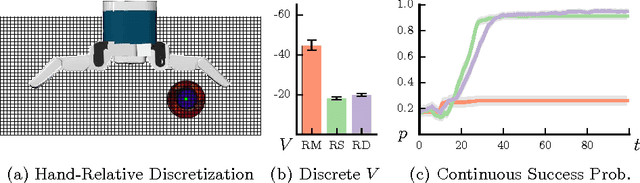

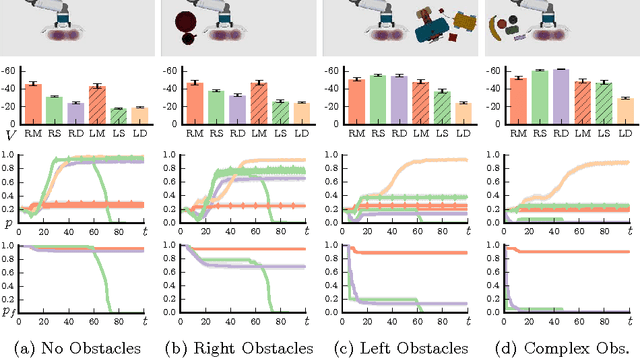

Abstract:This work addresses the challenge of a robot using real-time feedback from contact sensors to reliably manipulate a movable object on a cluttered tabletop. We formulate contact manipulation as a partially observable Markov decision process (POMDP) in the joint space of robot configurations and object poses. The POMDP formulation enables the robot to actively gather information and reduce the uncertainty on the object pose. Further, it incorporates all major constraints for robot manipulation: kinematic reachability, self-collision, and collision with obstacles. To solve the POMDP, we apply DESPOT, a state-of-the-art online POMDP algorithm. Our approach leverages two key ideas for computational efficiency. First, it performs lazy construction of a configuration-space lattice by interleaving construction of the lattice and online POMDP planning. Second, it combines online and offline POMDP planning by solving relaxed POMDP offline and using the solution to guide the online search algorithm. We evaluated the proposed approach on a seven degree-of-freedom robot arm in simulation environments. It significantly outperforms several existing algorithms, including some commonly used heuristics for contact manipulation under uncertainty.

The Manifold Particle Filter for State Estimation on High-dimensional Implicit Manifolds

Apr 25, 2016

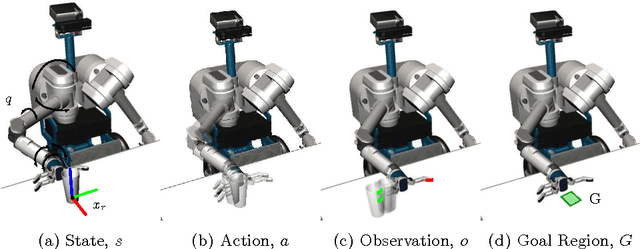

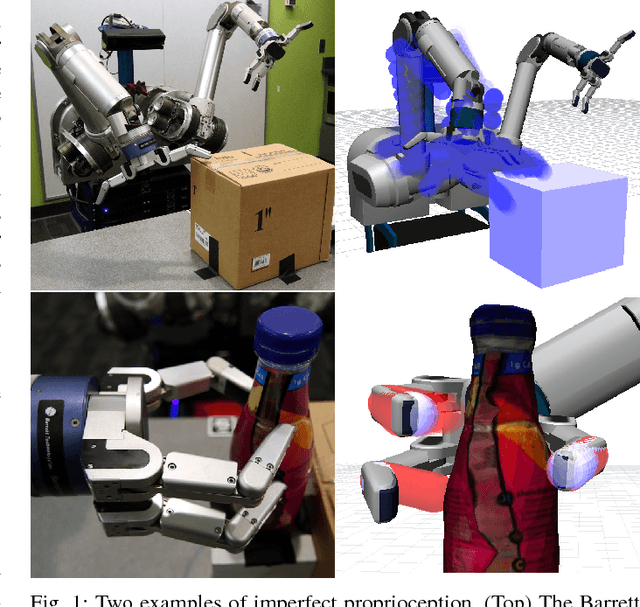

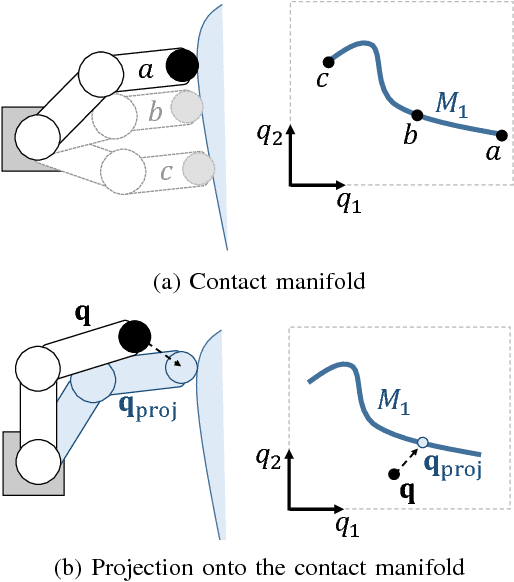

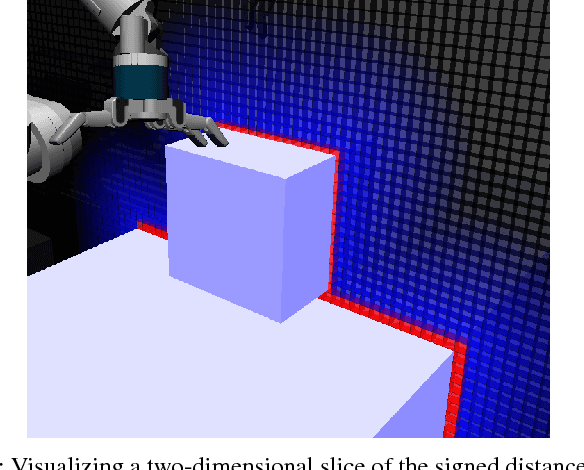

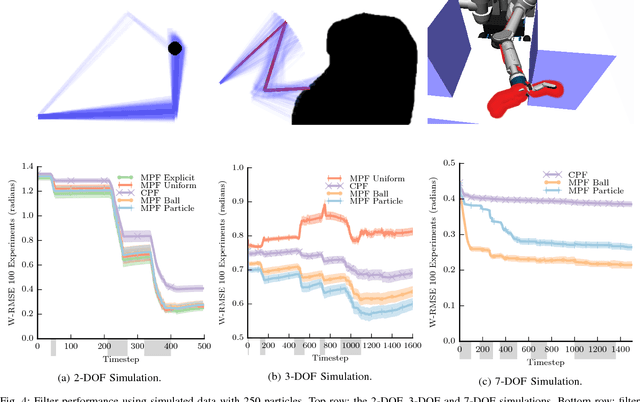

Abstract:We estimate the state a noisy robot arm and underactuated hand using an Implicit Manifold Particle Filter (MPF) informed by touch sensors. As the robot touches the world, its state space collapses to a contact manifold that we represent implicitly using a signed distance field. This allows us to extend the MPF to higher (six or more) dimensional state spaces. Earlier work (which explicitly represents the contact manifold) only shows the MPF in two or three dimensions. Through a series of experiments, we show that the implicit MPF converges faster and is more accurate than a conventional particle filter during periods of persistent contact. We present three methods of sampling the implicit contact manifold, and compare them in experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge