Michael A. Warren

Self-Satisfied: An end-to-end framework for SAT generation and prediction

Oct 18, 2024

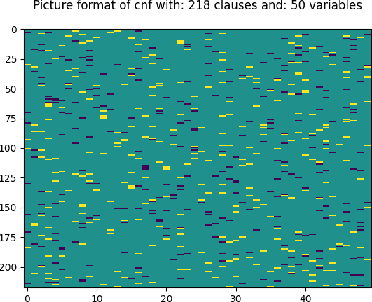

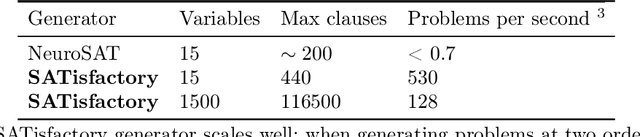

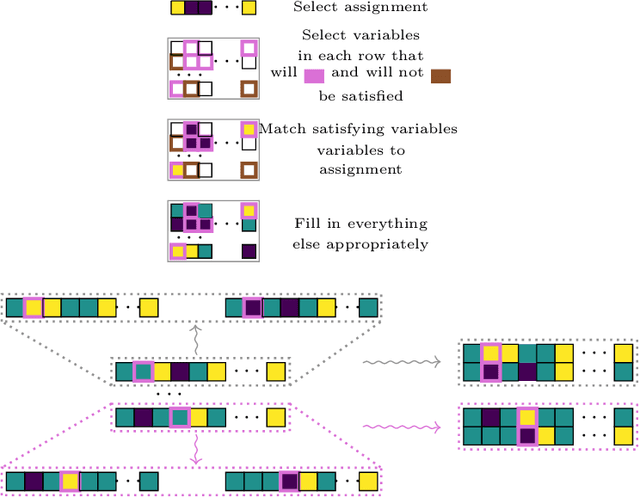

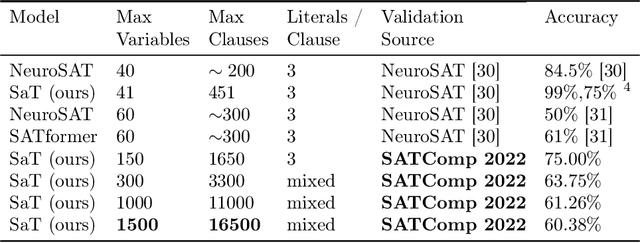

Abstract:The boolean satisfiability (SAT) problem asks whether there exists an assignment of boolean values to the variables of an arbitrary boolean formula making the formula evaluate to True. It is well-known that all NP-problems can be coded as SAT problems and therefore SAT is important both practically and theoretically. From both of these perspectives, better understanding the patterns and structure implicit in SAT data is of significant value. In this paper, we describe several advances that we believe will help open the door to such understanding: we introduce hardware accelerated algorithms for fast SAT problem generation, a geometric SAT encoding that enables the use of transformer architectures typically applied to vision tasks, and a simple yet effective technique we term head slicing for reducing sequence length representation inside transformer architectures. These advances allow us to scale our approach to SAT problems with thousands of variables and tens of thousands of clauses. We validate our architecture, termed Satisfiability Transformer (SaT), on the SAT prediction task with data from the SAT Competition (SATComp) 2022 problem sets. Prior related work either leveraged a pure machine learning approach, but could not handle SATComp-sized problems, or was hybrid in the sense of integrating a machine learning component in a standard SAT solving tool. Our pure machine learning approach achieves prediction accuracies comparable to recent work, but on problems that are an order of magnitude larger than previously demonstrated. A fundamental aspect of our work concerns the very nature of SAT data and its suitability for training machine learning models. We both describe experimental results that probe the landscape of where SAT data can be successfully used for learning and position these results within the broader context of complexity and learning.

Generate and Verify: Semantically Meaningful Formal Analysis of Neural Network Perception Systems

Dec 16, 2020

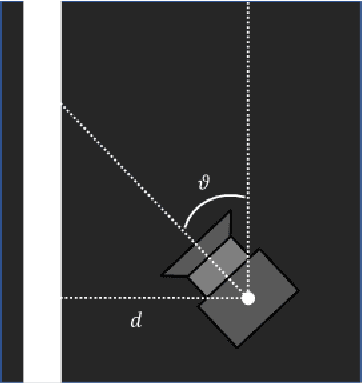

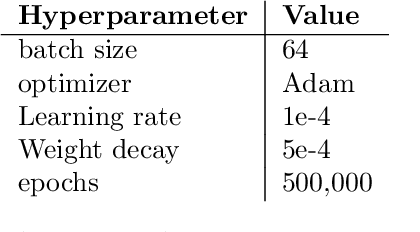

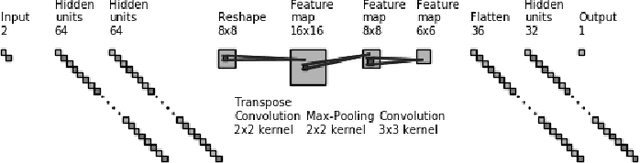

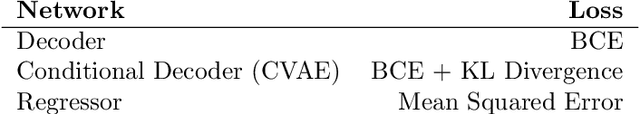

Abstract:Testing remains the primary method to evaluate the accuracy of neural network perception systems. Prior work on the formal verification of neural network perception models has been limited to notions of local adversarial robustness for classification with respect to individual image inputs. In this work, we propose a notion of global correctness for neural network perception models performing regression with respect to a generative neural network with a semantically meaningful latent space. That is, against an infinite set of images produced by a generative model over an interval of its latent space, we employ neural network verification to prove that the model will always produce estimates within some error bound of the ground truth. Where the perception model fails, we obtain semantically meaningful counter-examples which carry information on concrete states of the system of interest that can be used programmatically without human inspection of corresponding generated images. Our approach, Generate and Verify, provides a new technique to gather insight into the failure cases of neural network perception systems and provide meaningful guarantees of correct behavior in safety critical applications.

Introspection Learning

Feb 27, 2019

Abstract:Traditional reinforcement learning agents learn from experience, past or present, gained through interaction with their environment. Our approach synthesizes experience, without requiring an agent to interact with their environment, by asking the policy directly "Are there situations X, Y, and Z, such that in these situations you would select actions A, B, and C?" In this paper we present Introspection Learning, an algorithm that allows for the asking of these types of questions of neural network policies. Introspection Learning is reinforcement learning algorithm agnostic and the states returned may be used as an indicator of the health of the policy or to shape the policy in a myriad of ways. We demonstrate the usefulness of this algorithm both in the context of speeding up training and improving robustness with respect to safety constraints.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge