Meisam Yadollahzadeh-Tabari

Complex Emotion Recognition System using basic emotions via Facial Expression, EEG, and ECG Signals: a review

Sep 09, 2024

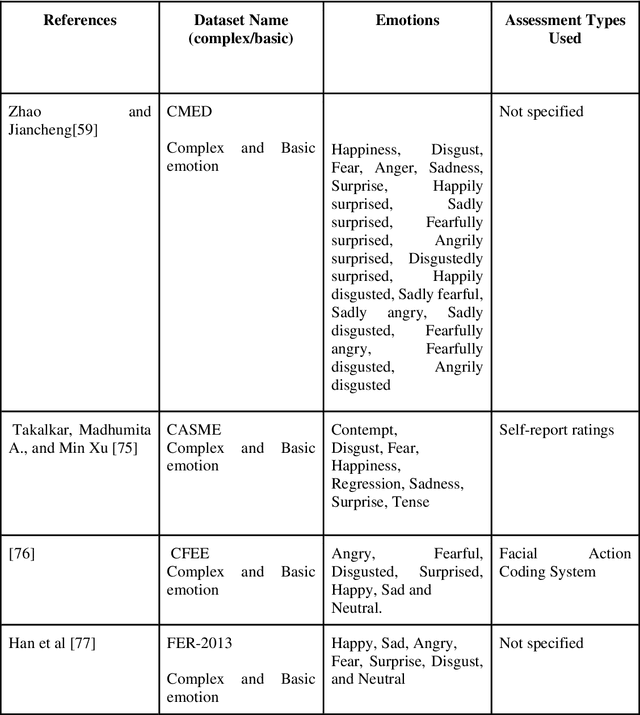

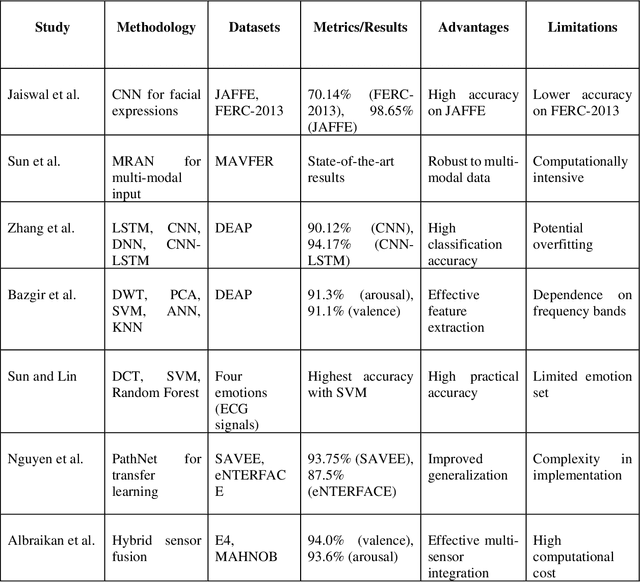

Abstract:The Complex Emotion Recognition System (CERS) deciphers complex emotional states by examining combinations of basic emotions expressed, their interconnections, and the dynamic variations. Through the utilization of advanced algorithms, CERS provides profound insights into emotional dynamics, facilitating a nuanced understanding and customized responses. The attainment of such a level of emotional recognition in machines necessitates the knowledge distillation and the comprehension of novel concepts akin to human cognition. The development of AI systems for discerning complex emotions poses a substantial challenge with significant implications for affective computing. Furthermore, obtaining a sizable dataset for CERS proves to be a daunting task due to the intricacies involved in capturing subtle emotions, necessitating specialized methods for data collection and processing. Incorporating physiological signals such as Electrocardiogram (ECG) and Electroencephalogram (EEG) can notably enhance CERS by furnishing valuable insights into the user's emotional state, enhancing the quality of datasets, and fortifying system dependability. A comprehensive literature review was conducted in this study to assess the efficacy of machine learning, deep learning, and meta-learning approaches in both basic and complex emotion recognition utilizing EEG, ECG signals, and facial expression datasets. The chosen research papers offer perspectives on potential applications, clinical implications, and results of CERSs, with the objective of promoting their acceptance and integration into clinical decision-making processes. This study highlights research gaps and challenges in understanding CERSs, encouraging further investigation by relevant studies and organizations. Lastly, the significance of meta-learning approaches in improving CERS performance and guiding future research endeavors is underscored.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge