Meie Fang

Drawing2CAD: Sequence-to-Sequence Learning for CAD Generation from Vectorized Drawings

Aug 26, 2025

Abstract:Computer-Aided Design (CAD) generative modeling is driving significant innovations across industrial applications. Recent works have shown remarkable progress in creating solid models from various inputs such as point clouds, meshes, and text descriptions. However, these methods fundamentally diverge from traditional industrial workflows that begin with 2D engineering drawings. The automatic generation of parametric CAD models from these 2D vector drawings remains underexplored despite being a critical step in engineering design. To address this gap, our key insight is to reframe CAD generation as a sequence-to-sequence learning problem where vector drawing primitives directly inform the generation of parametric CAD operations, preserving geometric precision and design intent throughout the transformation process. We propose Drawing2CAD, a framework with three key technical components: a network-friendly vector primitive representation that preserves precise geometric information, a dual-decoder transformer architecture that decouples command type and parameter generation while maintaining precise correspondence, and a soft target distribution loss function accommodating inherent flexibility in CAD parameters. To train and evaluate Drawing2CAD, we create CAD-VGDrawing, a dataset of paired engineering drawings and parametric CAD models, and conduct thorough experiments to demonstrate the effectiveness of our method. Code and dataset are available at https://github.com/lllssc/Drawing2CAD.

MsaMIL-Net: An End-to-End Multi-Scale Aware Multiple Instance Learning Network for Efficient Whole Slide Image Classification

Mar 12, 2025Abstract:Bag-based Multiple Instance Learning (MIL) approaches have emerged as the mainstream methodology for Whole Slide Image (WSI) classification. However, most existing methods adopt a segmented training strategy, which first extracts features using a pre-trained feature extractor and then aggregates these features through MIL. This segmented training approach leads to insufficient collaborative optimization between the feature extraction network and the MIL network, preventing end-to-end joint optimization and thereby limiting the overall performance of the model. Additionally, conventional methods typically extract features from all patches of fixed size, ignoring the multi-scale observation characteristics of pathologists. This not only results in significant computational resource waste when tumor regions represent a minimal proportion (as in the Camelyon16 dataset) but may also lead the model to suboptimal solutions. To address these limitations, this paper proposes an end-to-end multi-scale WSI classification framework that integrates multi-scale feature extraction with multiple instance learning. Specifically, our approach includes: (1) a semantic feature filtering module to reduce interference from non-lesion areas; (2) a multi-scale feature extraction module to capture pathological information at different levels; and (3) a multi-scale fusion MIL module for global modeling and feature integration. Through an end-to-end training strategy, we simultaneously optimize both the feature extractor and MIL network, ensuring maximum compatibility between them. Experiments were conducted on three cross-center datasets (DigestPath2019, BCNB, and UBC-OCEAN). Results demonstrate that our proposed method outperforms existing state-of-the-art approaches in terms of both accuracy (ACC) and AUC metrics.

Boosting Illuminant Estimation in Deep Color Constancy through Enhancing Brightness Robustness

Feb 18, 2025

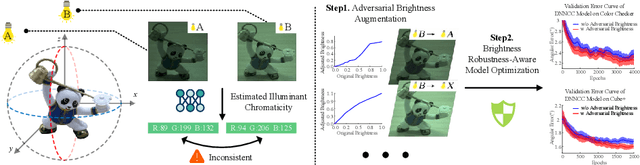

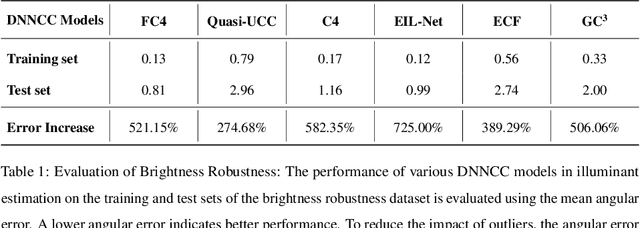

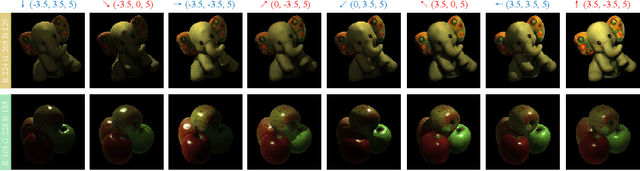

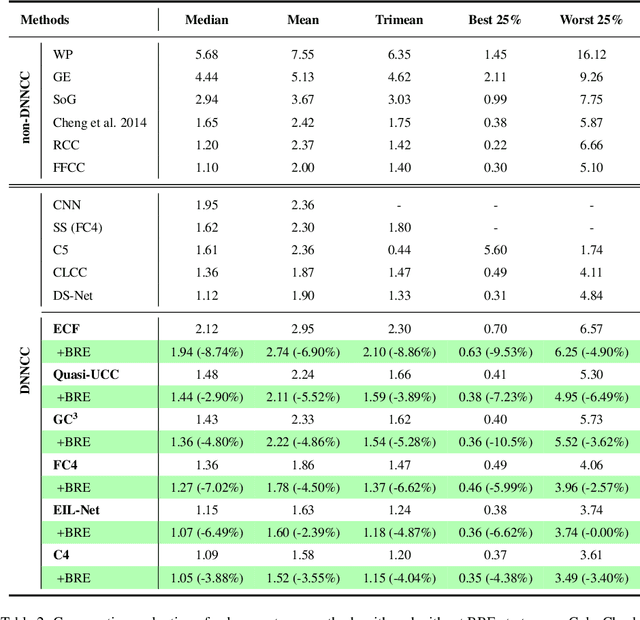

Abstract:Color constancy estimates illuminant chromaticity to correct color-biased images. Recently, Deep Neural Network-driven Color Constancy (DNNCC) models have made substantial advancements. Nevertheless, the potential risks in DNNCC due to the vulnerability of deep neural networks have not yet been explored. In this paper, we conduct the first investigation into the impact of a key factor in color constancy-brightness-on DNNCC from a robustness perspective. Our evaluation reveals that several mainstream DNNCC models exhibit high sensitivity to brightness despite their focus on chromaticity estimation. This sheds light on a potential limitation of existing DNNCC models: their sensitivity to brightness may hinder performance given the widespread brightness variations in real-world datasets. From the insights of our analysis, we propose a simple yet effective brightness robustness enhancement strategy for DNNCC models, termed BRE. The core of BRE is built upon the adaptive step-size adversarial brightness augmentation technique, which identifies high-risk brightness variation and generates augmented images via explicit brightness adjustment. Subsequently, BRE develops a brightness-robustness-aware model optimization strategy that integrates adversarial brightness training and brightness contrastive loss, significantly bolstering the brightness robustness of DNNCC models. BRE is hyperparameter-free and can be integrated into existing DNNCC models, without incurring additional overhead during the testing phase. Experiments on two public color constancy datasets-ColorChecker and Cube+-demonstrate that the proposed BRE consistently enhances the illuminant estimation performance of existing DNNCC models, reducing the estimation error by an average of 5.04% across six mainstream DNNCC models, underscoring the critical role of enhancing brightness robustness in these models.

RetouchUAA: Unconstrained Adversarial Attack via Image Retouching

Nov 27, 2023Abstract:Deep Neural Networks (DNNs) are susceptible to adversarial examples. Conventional attacks generate controlled noise-like perturbations that fail to reflect real-world scenarios and hard to interpretable. In contrast, recent unconstrained attacks mimic natural image transformations occurring in the real world for perceptible but inconspicuous attacks, yet compromise realism due to neglect of image post-processing and uncontrolled attack direction. In this paper, we propose RetouchUAA, an unconstrained attack that exploits a real-life perturbation: image retouching styles, highlighting its potential threat to DNNs. Compared to existing attacks, RetouchUAA offers several notable advantages. Firstly, RetouchUAA excels in generating interpretable and realistic perturbations through two key designs: the image retouching attack framework and the retouching style guidance module. The former custom-designed human-interpretability retouching framework for adversarial attack by linearizing images while modelling the local processing and retouching decision-making in human retouching behaviour, provides an explicit and reasonable pipeline for understanding the robustness of DNNs against retouching. The latter guides the adversarial image towards standard retouching styles, thereby ensuring its realism. Secondly, attributed to the design of the retouching decision regularization and the persistent attack strategy, RetouchUAA also exhibits outstanding attack capability and defense robustness, posing a heavy threat to DNNs. Experiments on ImageNet and Place365 reveal that RetouchUAA achieves nearly 100\% white-box attack success against three DNNs, while achieving a better trade-off between image naturalness, transferability and defense robustness than baseline attacks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge