Mehdi Mirakhorli

SIExVulTS: Sensitive Information Exposure Vulnerability Detection System using Transformer Models and Static Analysis

Aug 26, 2025Abstract:Sensitive Information Exposure (SIEx) vulnerabilities (CWE-200) remain a persistent and under-addressed threat across software systems, often leading to serious security breaches. Existing detection tools rarely target the diverse subcategories of CWE-200 or provide context-aware analysis of code-level data flows. Aims: This paper aims to present SIExVulTS, a novel vulnerability detection system that integrates transformer-based models with static analysis to identify and verify sensitive information exposure in Java applications. Method: SIExVulTS employs a three-stage architecture: (1) an Attack Surface Detection Engine that uses sentence embeddings to identify sensitive variables, strings, comments, and sinks; (2) an Exposure Analysis Engine that instantiates CodeQL queries aligned with the CWE-200 hierarchy; and (3) a Flow Verification Engine that leverages GraphCodeBERT to semantically validate source-to-sink flows. We evaluate SIExVulTS using three curated datasets, including real-world CVEs, a benchmark set of synthetic CWE-200 examples, and labeled flows from 31 open-source projects. Results: The Attack Surface Detection Engine achieved an average F1 score greater than 93\%, the Exposure Analysis Engine achieved an F1 score of 85.71\%, and the Flow Verification Engine increased precision from 22.61\% to 87.23\%. Moreover, SIExVulTS successfully uncovered six previously unknown CVEs in major Apache projects. Conclusions: The results demonstrate that SIExVulTS is effective and practical for improving software security against sensitive data exposure, addressing limitations of existing tools in detecting and verifying CWE-200 vulnerabilities.

A Large-Scale Exploit Instrumentation Study of AI/ML Supply Chain Attacks in Hugging Face Models

Oct 06, 2024

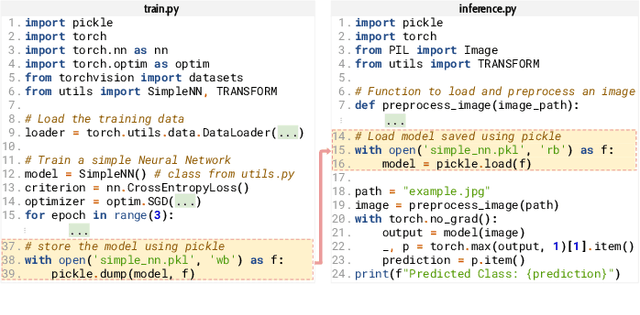

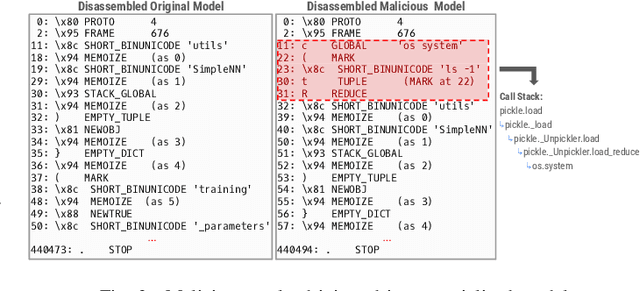

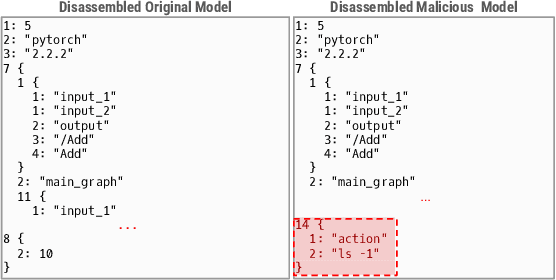

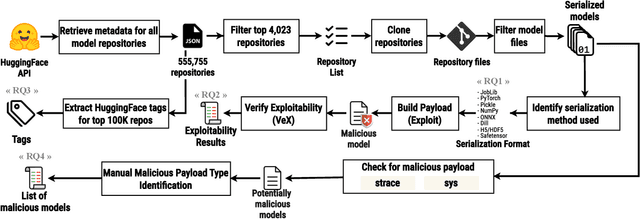

Abstract:The development of machine learning (ML) techniques has led to ample opportunities for developers to develop and deploy their own models. Hugging Face serves as an open source platform where developers can share and download other models in an effort to make ML development more collaborative. In order for models to be shared, they first need to be serialized. Certain Python serialization methods are considered unsafe, as they are vulnerable to object injection. This paper investigates the pervasiveness of these unsafe serialization methods across Hugging Face, and demonstrates through an exploitation approach, that models using unsafe serialization methods can be exploited and shared, creating an unsafe environment for ML developers. We investigate to what extent Hugging Face is able to flag repositories and files using unsafe serialization methods, and develop a technique to detect malicious models. Our results show that Hugging Face is home to a wide range of potentially vulnerable models.

Lessons from the Use of Natural Language Inference in Requirements Engineering Tasks

Apr 24, 2024

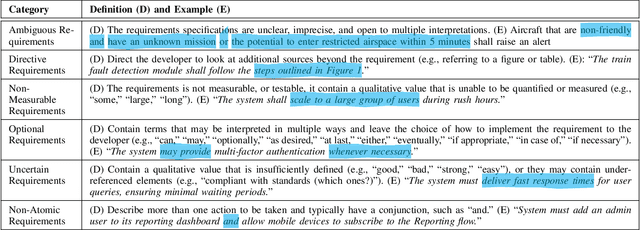

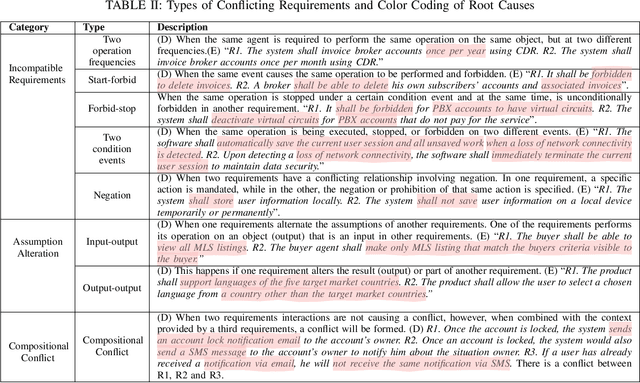

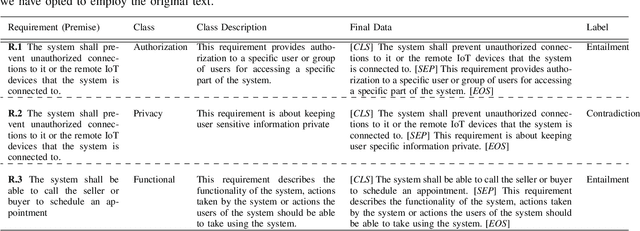

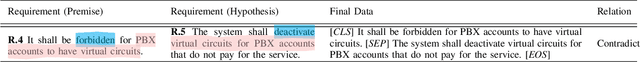

Abstract:We investigate the use of Natural Language Inference (NLI) in automating requirements engineering tasks. In particular, we focus on three tasks: requirements classification, identification of requirements specification defects, and detection of conflicts in stakeholders' requirements. While previous research has demonstrated significant benefit in using NLI as a universal method for a broad spectrum of natural language processing tasks, these advantages have not been investigated within the context of software requirements engineering. Therefore, we design experiments to evaluate the use of NLI in requirements analysis. We compare the performance of NLI with a spectrum of approaches, including prompt-based models, conventional transfer learning, Large Language Models (LLMs)-powered chatbot models, and probabilistic models. Through experiments conducted under various learning settings including conventional learning and zero-shot, we demonstrate conclusively that our NLI method surpasses classical NLP methods as well as other LLMs-based and chatbot models in the analysis of requirements specifications. Additionally, we share lessons learned characterizing the learning settings that make NLI a suitable approach for automating requirements engineering tasks.

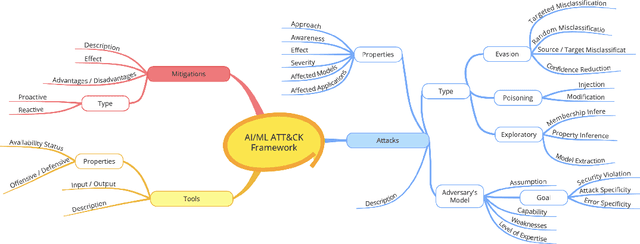

Attacks, Defenses, And Tools: A Framework To Facilitate Robust AI/ML Systems

Feb 18, 2022

Abstract:Software systems are increasingly relying on Artificial Intelligence (AI) and Machine Learning (ML) components. The emerging popularity of AI techniques in various application domains attracts malicious actors and adversaries. Therefore, the developers of AI-enabled software systems need to take into account various novel cyber-attacks and vulnerabilities that these systems may be susceptible to. This paper presents a framework to characterize attacks and weaknesses associated with AI-enabled systems and provide mitigation techniques and defense strategies. This framework aims to support software designers in taking proactive measures in developing AI-enabled software, understanding the attack surface of such systems, and developing products that are resilient to various emerging attacks associated with ML. The developed framework covers a broad spectrum of attacks, mitigation techniques, and defensive and offensive tools. In this paper, we demonstrate the framework architecture and its major components, describe their attributes, and discuss the long-term goals of this research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge