Mohamad Fazelnia

Lessons from the Use of Natural Language Inference in Requirements Engineering Tasks

Apr 24, 2024

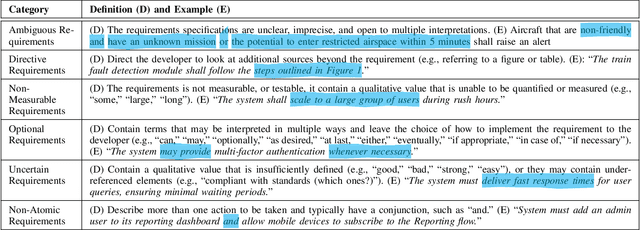

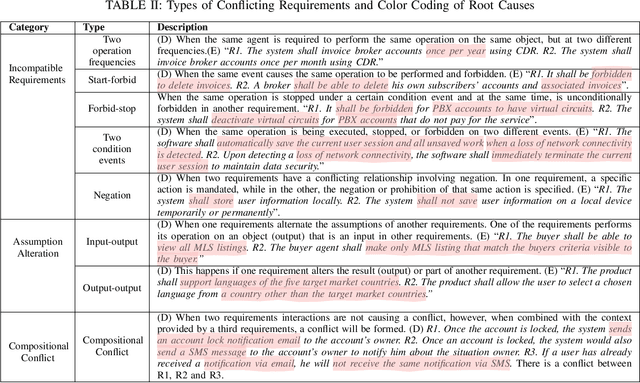

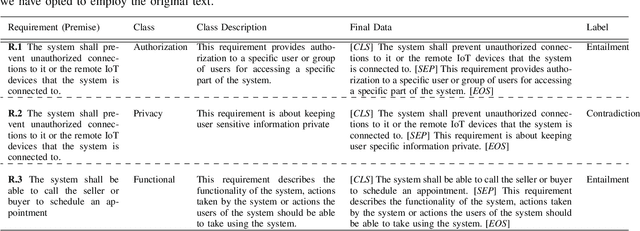

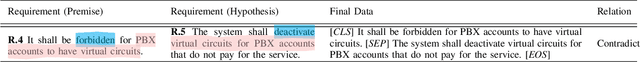

Abstract:We investigate the use of Natural Language Inference (NLI) in automating requirements engineering tasks. In particular, we focus on three tasks: requirements classification, identification of requirements specification defects, and detection of conflicts in stakeholders' requirements. While previous research has demonstrated significant benefit in using NLI as a universal method for a broad spectrum of natural language processing tasks, these advantages have not been investigated within the context of software requirements engineering. Therefore, we design experiments to evaluate the use of NLI in requirements analysis. We compare the performance of NLI with a spectrum of approaches, including prompt-based models, conventional transfer learning, Large Language Models (LLMs)-powered chatbot models, and probabilistic models. Through experiments conducted under various learning settings including conventional learning and zero-shot, we demonstrate conclusively that our NLI method surpasses classical NLP methods as well as other LLMs-based and chatbot models in the analysis of requirements specifications. Additionally, we share lessons learned characterizing the learning settings that make NLI a suitable approach for automating requirements engineering tasks.

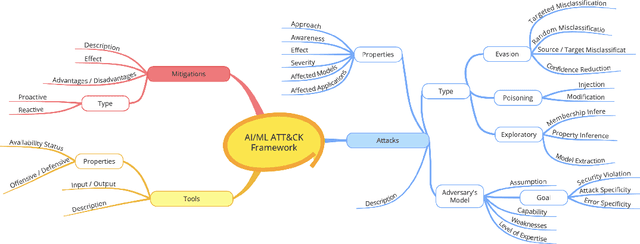

Attacks, Defenses, And Tools: A Framework To Facilitate Robust AI/ML Systems

Feb 18, 2022

Abstract:Software systems are increasingly relying on Artificial Intelligence (AI) and Machine Learning (ML) components. The emerging popularity of AI techniques in various application domains attracts malicious actors and adversaries. Therefore, the developers of AI-enabled software systems need to take into account various novel cyber-attacks and vulnerabilities that these systems may be susceptible to. This paper presents a framework to characterize attacks and weaknesses associated with AI-enabled systems and provide mitigation techniques and defense strategies. This framework aims to support software designers in taking proactive measures in developing AI-enabled software, understanding the attack surface of such systems, and developing products that are resilient to various emerging attacks associated with ML. The developed framework covers a broad spectrum of attacks, mitigation techniques, and defensive and offensive tools. In this paper, we demonstrate the framework architecture and its major components, describe their attributes, and discuss the long-term goals of this research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge