Mehak Maniktala

Enhancing a Student Productivity Model for Adaptive Problem-Solving Assistance

Jul 07, 2022

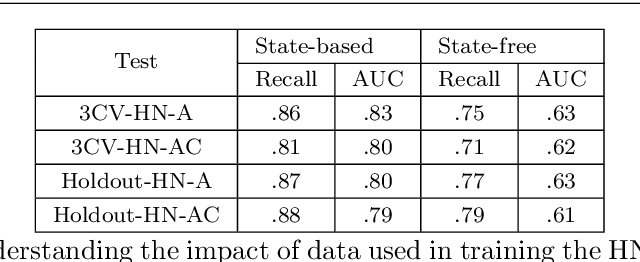

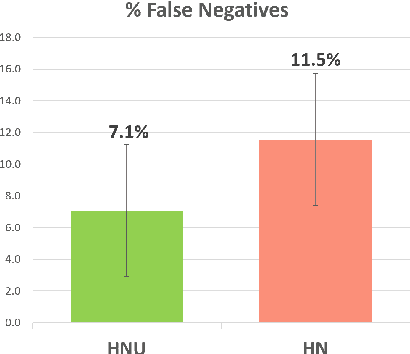

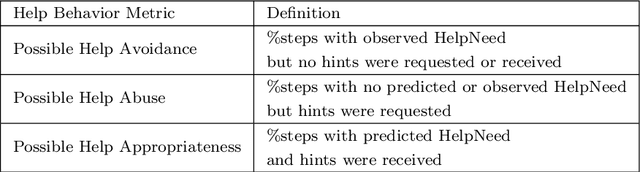

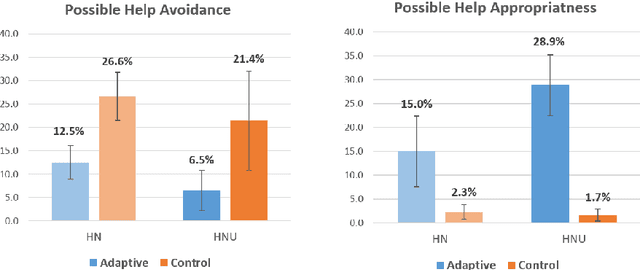

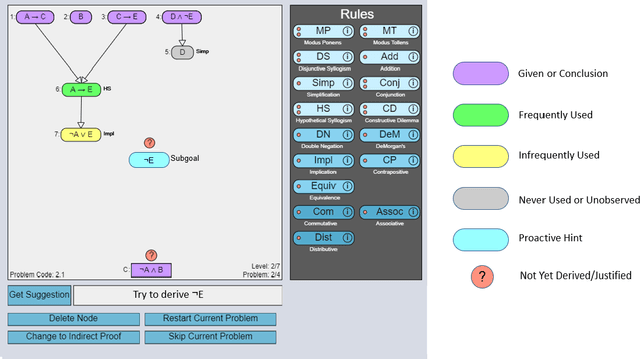

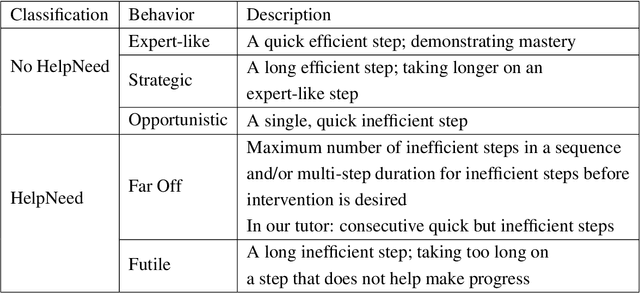

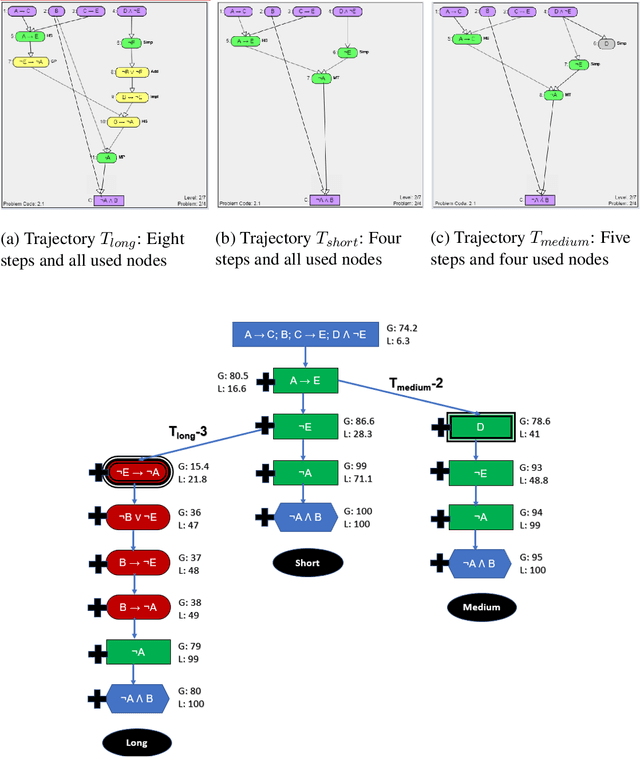

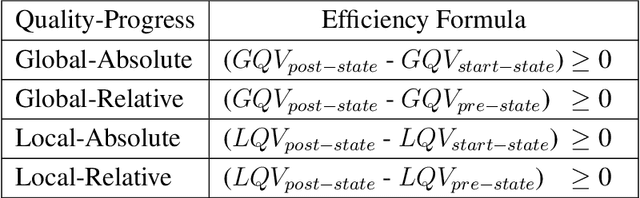

Abstract:Research on intelligent tutoring systems has been exploring data-driven methods to deliver effective adaptive assistance. While much work has been done to provide adaptive assistance when students seek help, they may not seek help optimally. This had led to the growing interest in proactive adaptive assistance, where the tutor provides unsolicited assistance upon predictions of struggle or unproductivity. Determining when and whether to provide personalized support is a well-known challenge called the assistance dilemma. Addressing this dilemma is particularly challenging in open-ended domains, where there can be several ways to solve problems. Researchers have explored methods to determine when to proactively help students, but few of these methods have taken prior hint usage into account. In this paper, we present a novel data-driven approach to incorporate students' hint usage in predicting their need for help. We explore its impact in an intelligent tutor that deals with the open-ended and well-structured domain of logic proofs. We present a controlled study to investigate the impact of an adaptive hint policy based on predictions of HelpNeed that incorporate students' hint usage. We show empirical evidence to support that such a policy can save students a significant amount of time in training, and lead to improved posttest results, when compared to a control without proactive interventions. We also show that incorporating students' hint usage significantly improves the adaptive hint policy's efficacy in predicting students' HelpNeed, thereby reducing training unproductivity, reducing possible help avoidance, and increasing possible help appropriateness (a higher chance of receiving help when it was likely to be needed). We conclude with suggestions on the domains that can benefit from this approach as well as the requirements for adoption.

Avoiding Help Avoidance: Using Interface Design Changes to Promote Unsolicited Hint Usage in an Intelligent Tutor

Oct 13, 2020

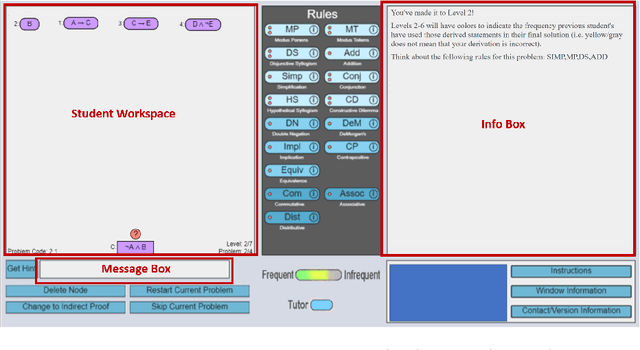

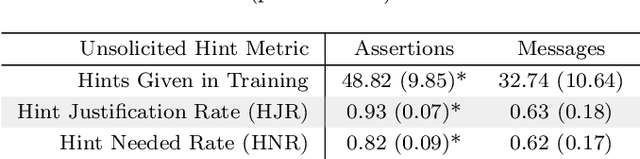

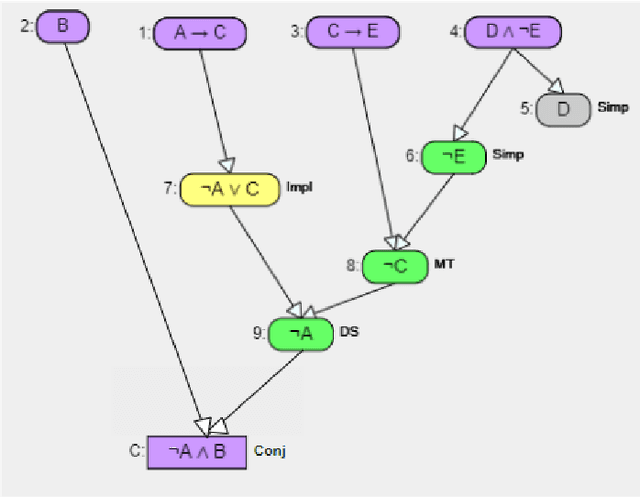

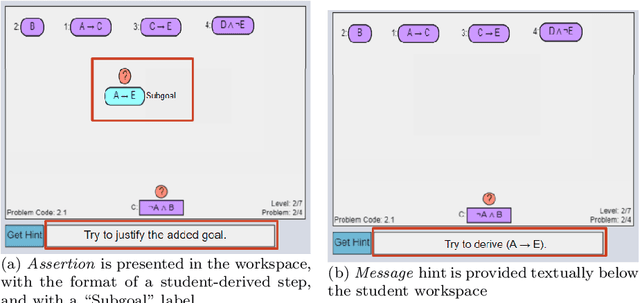

Abstract:Within intelligent tutoring systems, considerable research has investigated hints, including how to generate data-driven hints, what hint content to present, and when to provide hints for optimal learning outcomes. However, less attention has been paid to how hints are presented. In this paper, we propose a new hint delivery mechanism called "Assertions" for providing unsolicited hints in a data-driven intelligent tutor. Assertions are partially-worked example steps designed to appear within a student workspace, and in the same format as student-derived steps, to show students a possible subgoal leading to the solution. We hypothesized that Assertions can help address the well-known hint avoidance problem. In systems that only provide hints upon request, hint avoidance results in students not receiving hints when they are needed. Our unsolicited Assertions do not seek to improve student help-seeking, but rather seek to ensure students receive the help they need. We contrast Assertions with Messages, text-based, unsolicited hints that appear after student inactivity. Our results show that Assertions significantly increase unsolicited hint usage compared to Messages. Further, they show a significant aptitude-treatment interaction between Assertions and prior proficiency, with Assertions leading students with low prior proficiency to generate shorter (more efficient) posttest solutions faster. We also present a clustering analysis that shows patterns of productive persistence among students with low prior knowledge when the tutor provides unsolicited help in the form of Assertions. Overall, this work provides encouraging evidence that hint presentation can significantly impact how students use them and using Assertions can be an effective way to address help avoidance.

Extending the Hint Factory for the assistance dilemma: A novel, data-driven HelpNeed Predictor for proactive problem-solving help

Oct 08, 2020

Abstract:Determining when and whether to provide personalized support is a well-known challenge called the assistance dilemma. A core problem in solving the assistance dilemma is the need to discover when students are unproductive so that the tutor can intervene. Such a task is particularly challenging for open-ended domains, even those that are well-structured with defined principles and goals. In this paper, we present a set of data-driven methods to classify, predict, and prevent unproductive problem-solving steps in the well-structured open-ended domain of logic. This approach leverages and extends the Hint Factory, a set of methods that leverages prior student solution attempts to build data-driven intelligent tutors. We present a HelpNeed classification, that uses prior student data to determine when students are likely to be unproductive and need help learning optimal problem-solving strategies. We present a controlled study to determine the impact of an Adaptive pedagogical policy that provides proactive hints at the start of each step based on the outcomes of our HelpNeed predictor: productive vs. unproductive. Our results show that the students in the Adaptive condition exhibited better training behaviors, with lower help avoidance, and higher help appropriateness (a higher chance of receiving help when it was likely to be needed), as measured using the HelpNeed classifier, when compared to the Control. Furthermore, the results show that the students who received Adaptive hints based on HelpNeed predictions during training significantly outperform their Control peers on the posttest, with the former producing shorter, more optimal solutions in less time. We conclude with suggestions on how these HelpNeed methods could be applied in other well-structured open-ended domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge