Md. Rafat Rahman Tushar

A Memory Efficient Deep Reinforcement Learning Approach For Snake Game Autonomous Agents

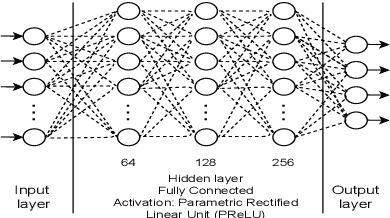

Jan 27, 2023Abstract:To perform well, Deep Reinforcement Learning (DRL) methods require significant memory resources and computational time. Also, sometimes these systems need additional environment information to achieve a good reward. However, it is more important for many applications and devices to reduce memory usage and computational times than to achieve the maximum reward. This paper presents a modified DRL method that performs reasonably well with compressed imagery data without requiring additional environment information and also uses less memory and time. We have designed a lightweight Convolutional Neural Network (CNN) with a variant of the Q-network that efficiently takes preprocessed image data as input and uses less memory. Furthermore, we use a simple reward mechanism and small experience replay memory so as to provide only the minimum necessary information. Our modified DRL method enables our autonomous agent to play Snake, a classical control game. The results show our model can achieve similar performance as other DRL methods.

* AICT 2022

Autonomous Warehouse Robot using Deep Q-Learning

Feb 21, 2022

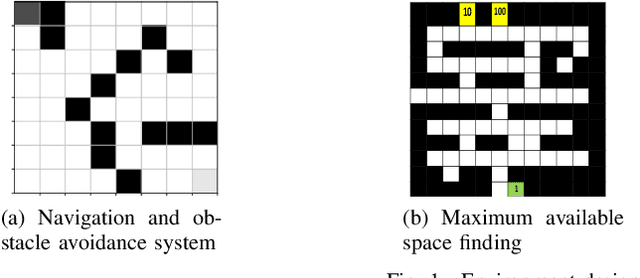

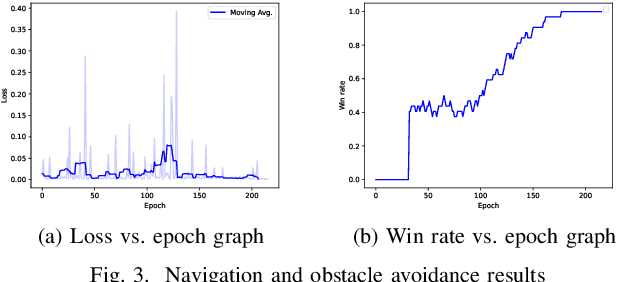

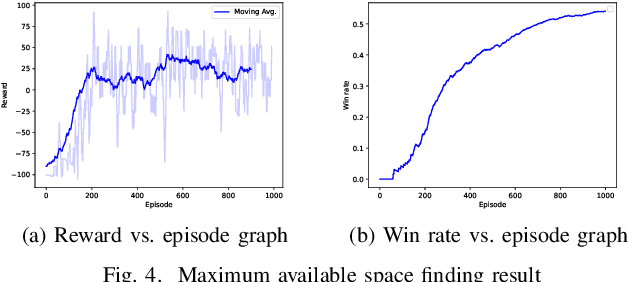

Abstract:In warehouses, specialized agents need to navigate, avoid obstacles and maximize the use of space in the warehouse environment. Due to the unpredictability of these environments, reinforcement learning approaches can be applied to complete these tasks. In this paper, we propose using Deep Reinforcement Learning (DRL) to address the robot navigation and obstacle avoidance problem and traditional Q-learning with minor variations to maximize the use of space for product placement. We first investigate the problem for the single robot case. Next, based on the single robot model, we extend our system to the multi-robot case. We use a strategic variation of Q-tables to perform multi-agent Q-learning. We successfully test the performance of our model in a 2D simulation environment for both the single and multi-robot cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge