Mattia Vaccari

The Classification of Optical Galaxy Morphology Using Unsupervised Learning Techniques

Jun 13, 2022

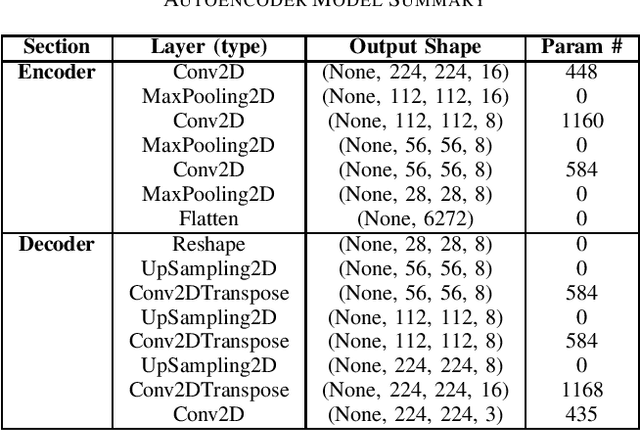

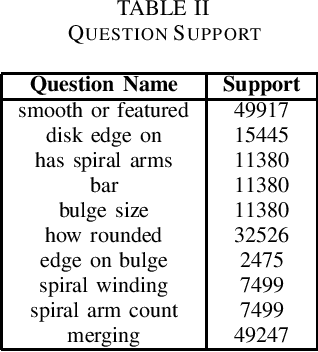

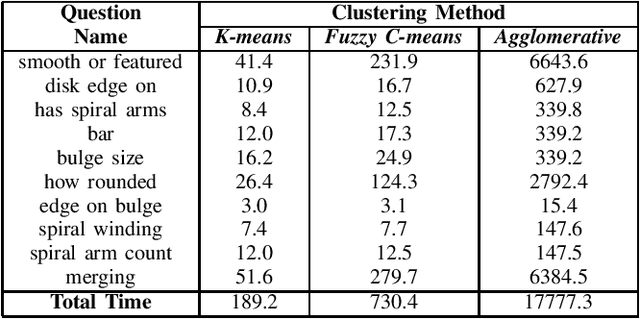

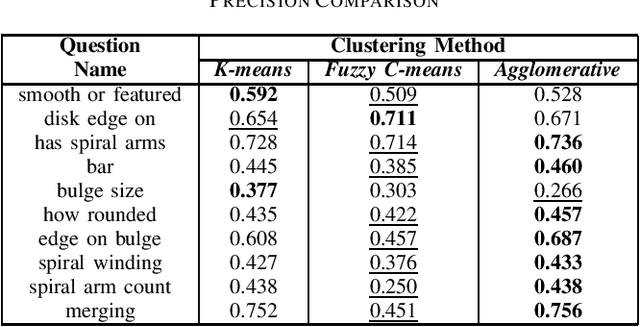

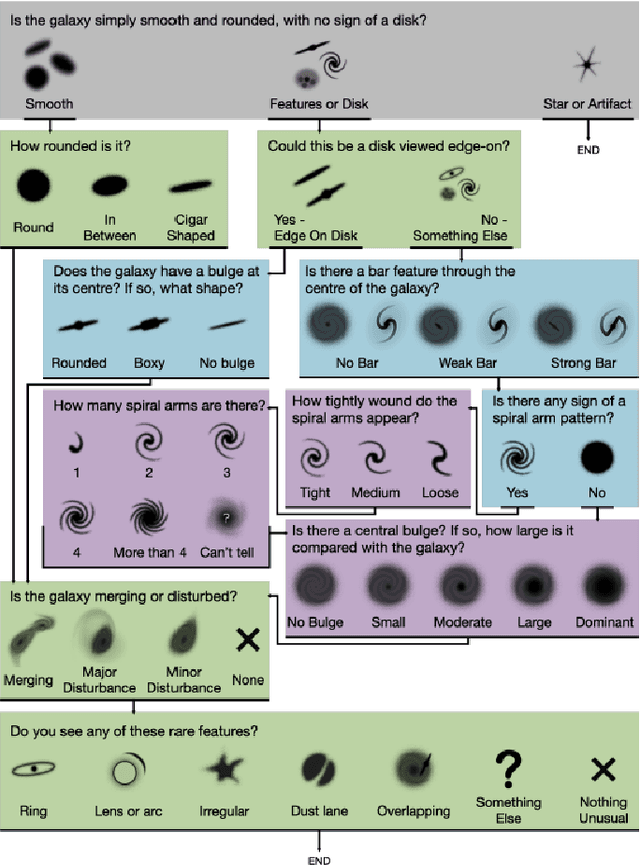

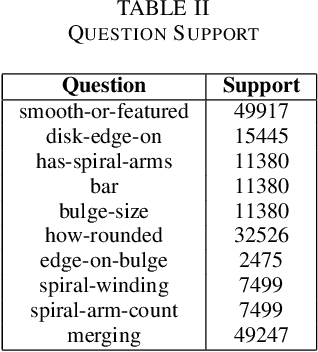

Abstract:The advent of large scale, data intensive astronomical surveys has caused the viability of human-based galaxy morphology classification methods to come into question. Put simply, too much astronomical data is being produced for scientists to visually label. Attempts have been made to crowd-source this work by recruiting volunteers from the general public. However, even these efforts will soon fail to keep up with data produced by modern surveys. Unsupervised learning techniques do not require existing labels to classify data and could pave the way to unplanned discoveries. Therefore, this paper aims to implement unsupervised learning algorithms to classify the Galaxy Zoo DECaLS dataset without human supervision. First, a convolutional autoencoder was implemented as a feature extractor. The extracted features were then clustered via k-means, fuzzy c-means and agglomerative clustering to provide classifications. The results were compared to the volunteer classifications of the Galaxy Zoo DECaLS dataset. Agglomerative clustering generally produced the best results, however, the performance gain over k-means clustering was not significant. With the appropriate optimizations, this approach could be used to provide classifications for the better performing Galaxy Zoo DECaLS decision tree questions. Ultimately, this unsupervised learning approach provided valuable insights and results that were useful to scientists.

A Comparison of Deep Learning Architectures for Optical Galaxy Morphology Classification

Nov 08, 2021

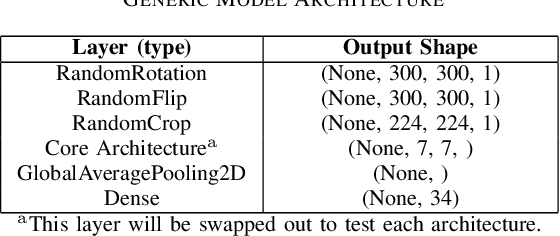

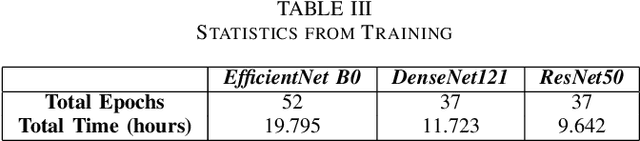

Abstract:The classification of galaxy morphology plays a crucial role in understanding galaxy formation and evolution. Traditionally, this process is done manually. The emergence of deep learning techniques has given room for the automation of this process. As such, this paper offers a comparison of deep learning architectures to determine which is best suited for optical galaxy morphology classification. Adapting the model training method proposed by Walmsley et al in 2021, the Zoobot Python library is used to train models to predict Galaxy Zoo DECaLS decision tree responses, made by volunteers, using EfficientNet B0, DenseNet121 and ResNet50 as core model architectures. The predicted results are then used to generate accuracy metrics per decision tree question to determine architecture performance. DenseNet121 was found to produce the best results, in terms of accuracy, with a reasonable training time. In future, further testing with more deep learning architectures could prove beneficial.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge