Matti Krueger

Human-Vehicle Cooperation on Prediction-Level: Enhancing Automated Driving with Human Foresight

Apr 14, 2021

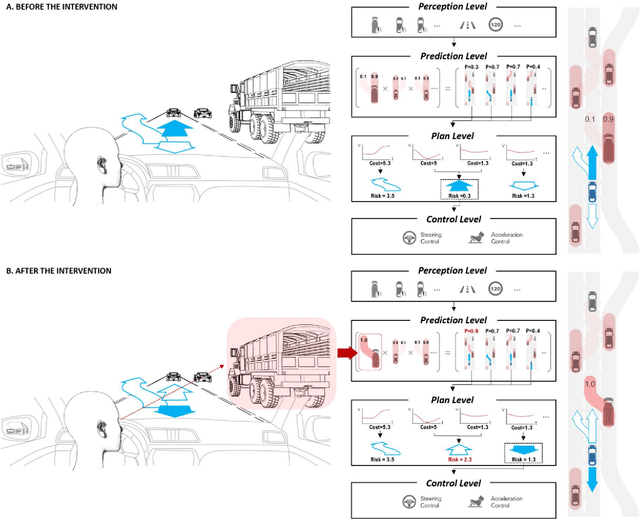

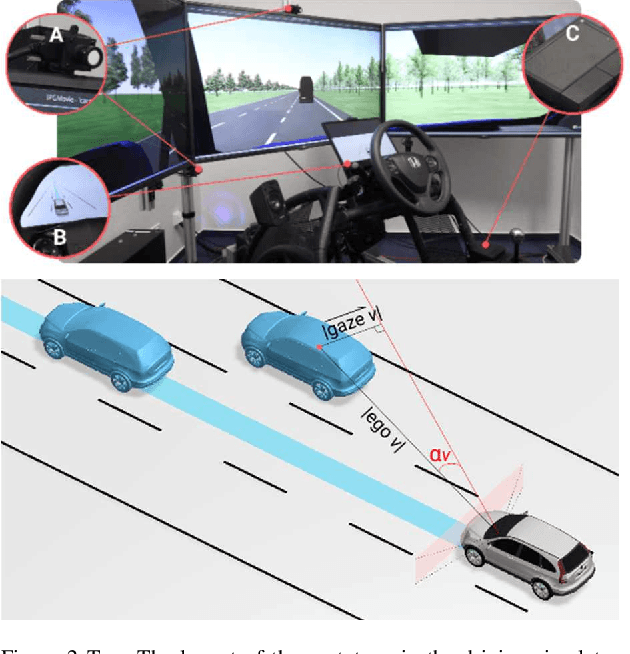

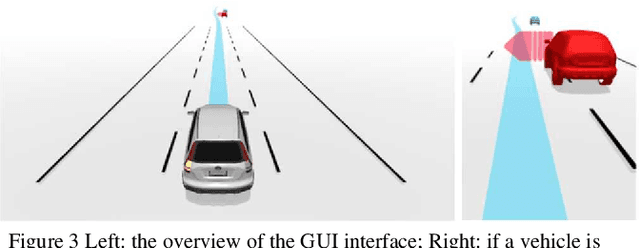

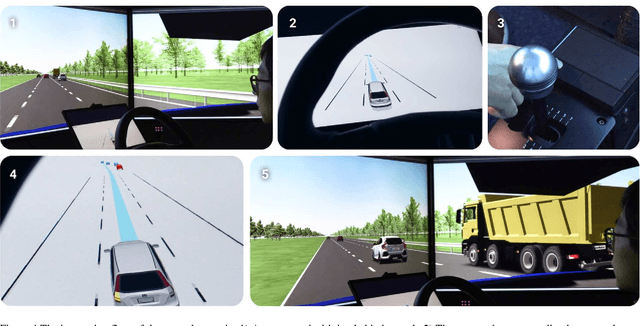

Abstract:To maximize safety and driving comfort, autonomous driving systems can benefit from implementing foresighted action choices that take different potential scenario developments into account. While artificial scene prediction methods are making fast progress, an attentive human driver may still be able to identify relevant contextual features which are not adequately considered by the system or for which the human driver may have a lack of trust into the system's capabilities to treat them appropriately. We implement an approach that lets a human driver quickly and intuitively supplement scene predictions to an autonomous driving system by gaze. We illustrate the feasibility of this approach in an existing autonomous driving system running a variety of scenarios in a simulator. Furthermore, a Graphical User Interface (GUI) was designed and integrated to enhance the trust and explainability of the system. The utilization of such cooperatively augmented scenario predictions has the potential to improve a system's foresighted driving abilities and make autonomous driving more trustable, comfortable and personalized.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge