Matthieu Lê

Recency Dropout for Recurrent Recommender Systems

Jan 26, 2022

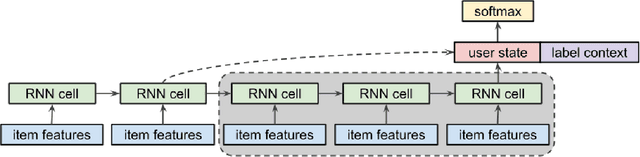

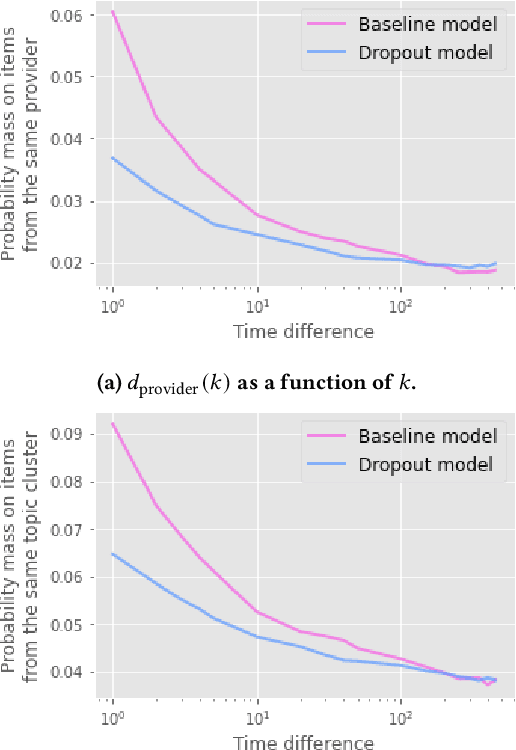

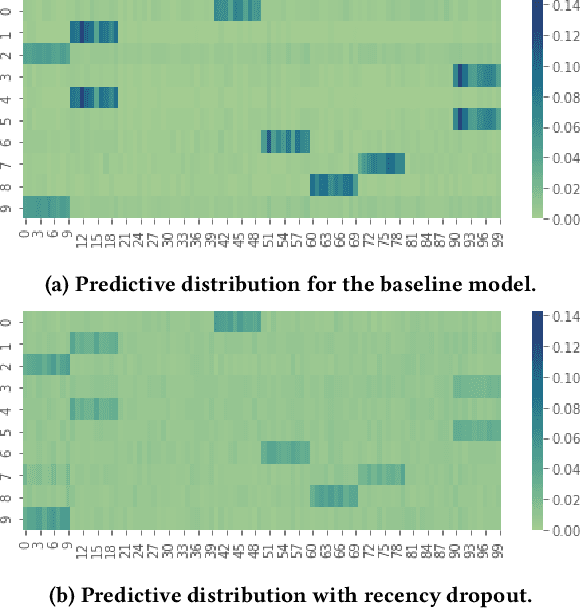

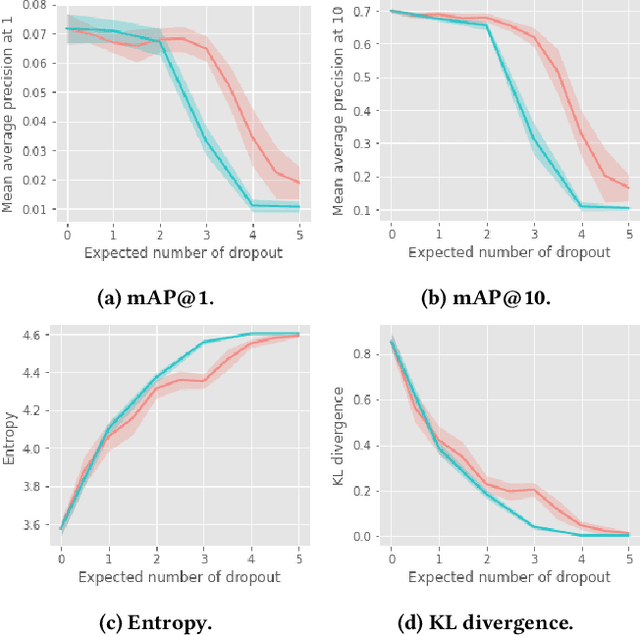

Abstract:Recurrent recommender systems have been successful in capturing the temporal dynamics in users' activity trajectories. However, recurrent neural networks (RNNs) are known to have difficulty learning long-term dependencies. As a consequence, RNN-based recommender systems tend to overly focus on short-term user interests. This is referred to as the recency bias, which could negatively affect the long-term user experience as well as the health of the ecosystem. In this paper, we introduce the recency dropout technique, a simple yet effective data augmentation technique to alleviate the recency bias in recurrent recommender systems. We demonstrate the effectiveness of recency dropout in various experimental settings including a simulation study, offline experiments, as well as live experiments on a large-scale industrial recommendation platform.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge