Matthias Vormann

Deep learning-based denoising streamed from mobile phones improves speech-in-noise understanding for hearing aid users

Aug 22, 2023

Abstract:The hearing loss of almost half a billion people is commonly treated with hearing aids. However, current hearing aids often do not work well in real-world noisy environments. We present a deep learning based denoising system that runs in real time on iPhone 7 and Samsung Galaxy S10 (25ms algorithmic latency). The denoised audio is streamed to the hearing aid, resulting in a total delay of around 75ms. In tests with hearing aid users having moderate to severe hearing loss, our denoising system improves audio across three tests: 1) listening for subjective audio ratings, 2) listening for objective speech intelligibility, and 3) live conversations in a noisy environment for subjective ratings. Subjective ratings increase by more than 40%, for both the listening test and the live conversation compared to a fitted hearing aid as a baseline. Speech reception thresholds, measuring speech understanding in noise, improve by 1.6 dB SRT. Ours is the first denoising system that is implemented on a mobile device, streamed directly to users' hearing aids using only a single channel as audio input while improving user satisfaction on all tested aspects, including speech intelligibility. This includes overall preference of the denoised and streamed signal over the hearing aid, thereby accepting the higher latency for the significant improvement in speech understanding.

Vehicle Noise: Comparison of Loudness Ratings in the Field and the Laboratory

Apr 28, 2022

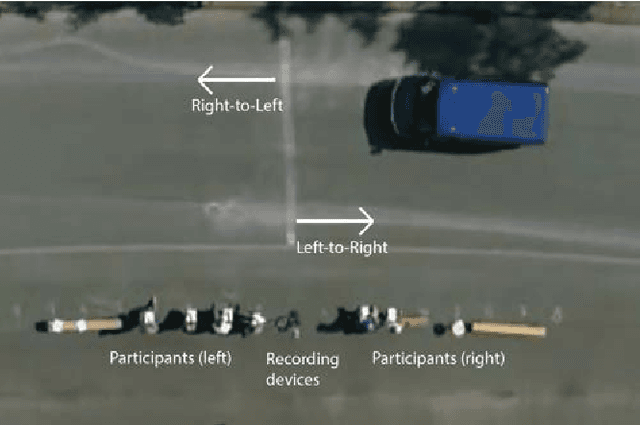

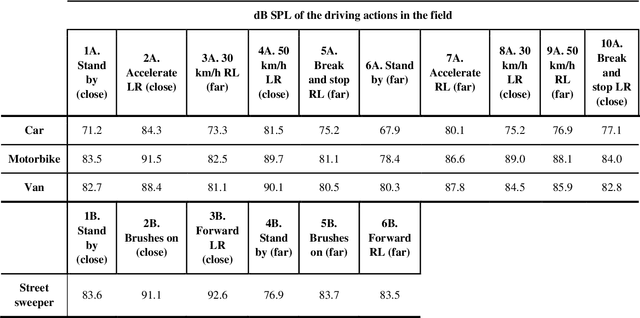

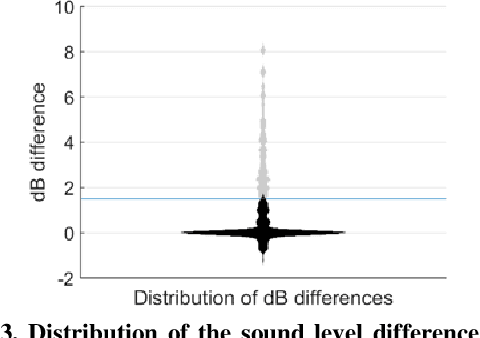

Abstract:Objective: Distorted loudness perception is one of the main complaints of hearing aid users. Being able to measure loudness perception correctly in the clinic is essential for fitting hearing aids. For this, experiments in the clinic should be able to reflect and capture loudness perception as in everyday-life situations. Little research has been done comparing loudness perception in the field and in the laboratory. Design: Participants rated the loudness in the field and in the laboratory of 36 driving actions done by four different vehicles. The field measurements were done in a restricted street and recorded with a 360deg camera and a tetrahedral microphone. The recorded stimuli, which are openly accessible, were presented in three different conditions in the laboratory: 360deg video recordings with a head-mounted display, video recordings with a desktop monitor, and audio-only. Sample: Thirteen normal-hearing participants and 18 hearing-impaired participants participated in the study. Results: The driving actions were rated significantly louder in the laboratory than in the field for the audio-only condition. These loudness rating differences were bigger for louder sounds in two laboratory conditions, i.e., the higher the sound level of a driving action was the more likely it was to be rated louder in the laboratory. There were no significant differences in the loudness ratings between the three laboratory conditions and between groups. Conclusions: The results of this experiment further remark the importance of increasing the realism and immersion when measuring loudness in the clinic.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge