Dirk Oetting

Integrating audiological datasets via federated merging of Auditory Profiles

Jul 30, 2024Abstract:Audiological datasets contain valuable knowledge about hearing loss in patients, which can be uncovered using data-driven, federated learning techniques. Our previous approach summarized patient information from one audiological dataset into distinct Auditory Profiles (APs). To cover the complete audiological patient population, however, patient patterns must be analyzed across multiple, separated datasets, and finally, be integrated into a combined set of APs. This study aimed at extending the existing profile generation pipeline with an AP merging step, enabling the combination of APs from different datasets based on their similarity across audiological measures. The 13 previously generated APs (NA=595) were merged with 31 newly generated APs from a second dataset (NB=1272) using a similarity score derived from the overlapping densities of common features across the two datasets. To ensure clinical applicability, random forest models were created for various scenarios, encompassing different combinations of audiological measures. A new set with 13 combined APs is proposed, providing well-separable profiles, which still capture detailed patient information from various test outcome combinations. The classification performance across these profiles is satisfactory. The best performance was achieved using a combination of loudness scaling, audiogram and speech test information, while single measures performed worst. The enhanced profile generation pipeline demonstrates the feasibility of combining APs across datasets, which should generalize to all datasets and could lead to an interpretable population-based profile set in the future. The classification models maintain clinical applicability. Hence, even if only smartphone-based measures are available, a given patient can be classified into an appropriate AP.

Vehicle Noise: Comparison of Loudness Ratings in the Field and the Laboratory

Apr 28, 2022

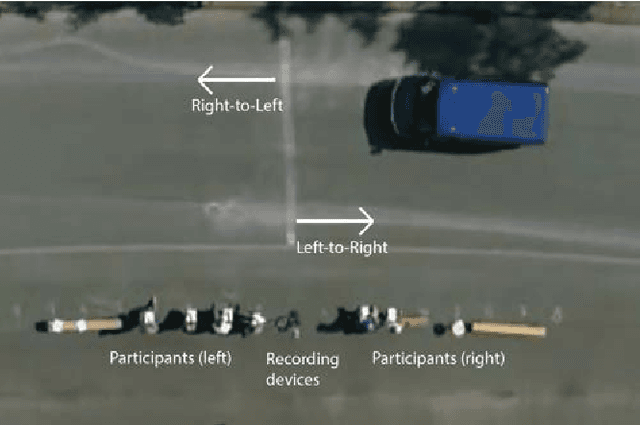

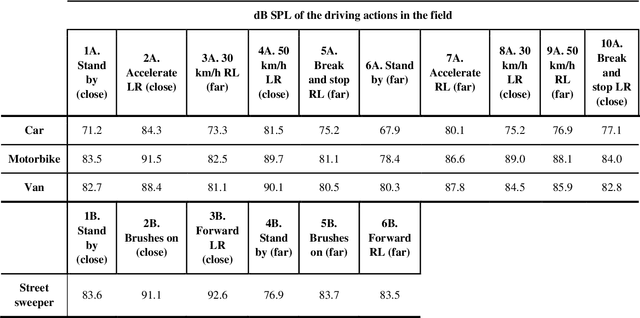

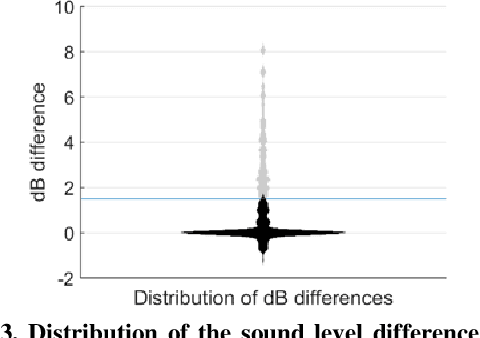

Abstract:Objective: Distorted loudness perception is one of the main complaints of hearing aid users. Being able to measure loudness perception correctly in the clinic is essential for fitting hearing aids. For this, experiments in the clinic should be able to reflect and capture loudness perception as in everyday-life situations. Little research has been done comparing loudness perception in the field and in the laboratory. Design: Participants rated the loudness in the field and in the laboratory of 36 driving actions done by four different vehicles. The field measurements were done in a restricted street and recorded with a 360deg camera and a tetrahedral microphone. The recorded stimuli, which are openly accessible, were presented in three different conditions in the laboratory: 360deg video recordings with a head-mounted display, video recordings with a desktop monitor, and audio-only. Sample: Thirteen normal-hearing participants and 18 hearing-impaired participants participated in the study. Results: The driving actions were rated significantly louder in the laboratory than in the field for the audio-only condition. These loudness rating differences were bigger for louder sounds in two laboratory conditions, i.e., the higher the sound level of a driving action was the more likely it was to be rated louder in the laboratory. There were no significant differences in the loudness ratings between the three laboratory conditions and between groups. Conclusions: The results of this experiment further remark the importance of increasing the realism and immersion when measuring loudness in the clinic.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge