Matthew Malek-Podjaski

Adversarial Attention for Human Motion Synthesis

Apr 25, 2022

Abstract:Analysing human motions is a core topic of interest for many disciplines, from Human-Computer Interaction, to entertainment, Virtual Reality and healthcare. Deep learning has achieved impressive results in capturing human pose in real-time. On the other hand, due to high inter-subject variability, human motion analysis models often suffer from not being able to generalise to data from unseen subjects due to very limited specialised datasets available in fields such as healthcare. However, acquiring human motion datasets is highly time-consuming, challenging, and expensive. Hence, human motion synthesis is a crucial research problem within deep learning and computer vision. We present a novel method for controllable human motion synthesis by applying attention-based probabilistic deep adversarial models with end-to-end training. We show that we can generate synthetic human motion over both short- and long-time horizons through the use of adversarial attention. Furthermore, we show that we can improve the classification performance of deep learning models in cases where there is inadequate real data, by supplementing existing datasets with synthetic motions.

Preserving Privacy in Human-Motion Affect Recognition

May 09, 2021

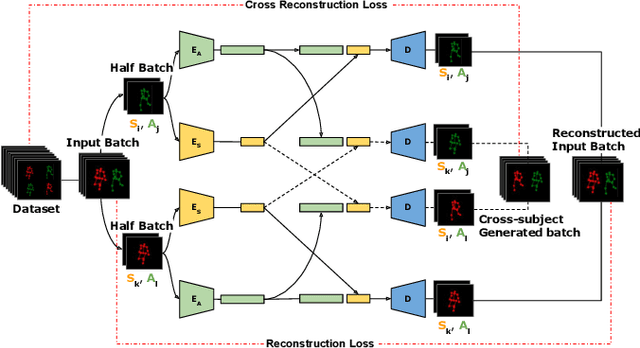

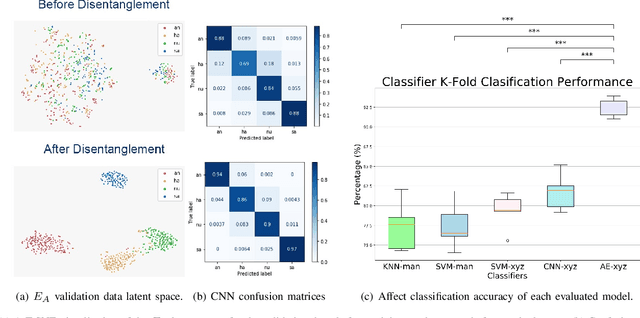

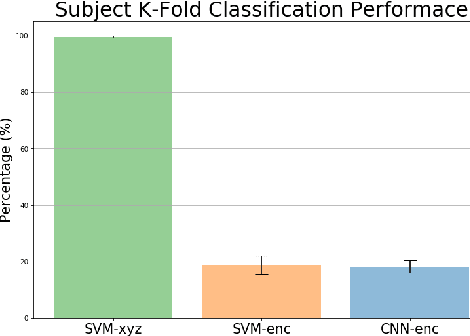

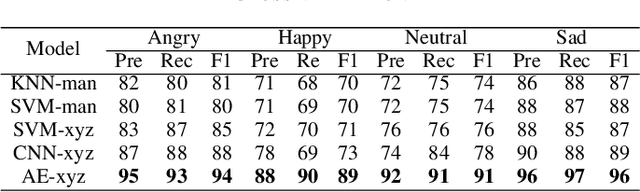

Abstract:Human motion is a biomarker used extensively in clinical analysis to monitor the progression of neurological diseases and mood disorders. Since perceptions of emotions are also interleaved with body posture and movements, emotion recognition from human gait can be used to quantitatively monitor mood changes that are often related to neurological disorders. Many existing solutions often use shallow machine learning models with raw positional data or manually extracted features to achieve this. However, gait is composed of many highly expressive characteristics that can be used to identify human subjects, and most solutions fail to address this, disregarding the subject's privacy. This work evaluates the effectiveness of existing methods at recognising emotions using both 3D temporal joint signals and manually extracted features. We also show that this data can easily be exploited to expose a subject's identity. Therefore to this end, we propose a cross-subject transfer learning technique for training a multi-encoder autoencoder deep neural network to learn disentangled latent representations of human motion features. By disentangling subject biometrics from the gait data, we show that the subjects privacy is preserved while the affect recognition performance outperforms traditional methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge