Preserving Privacy in Human-Motion Affect Recognition

Paper and Code

May 09, 2021

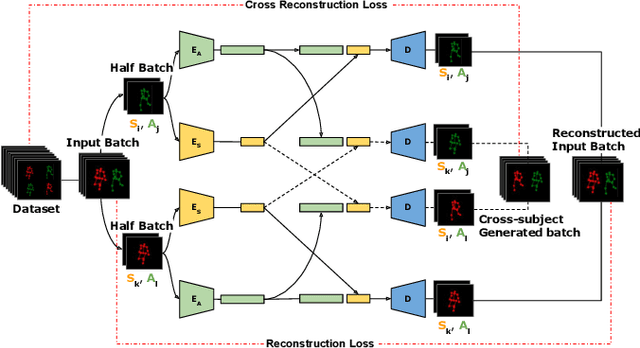

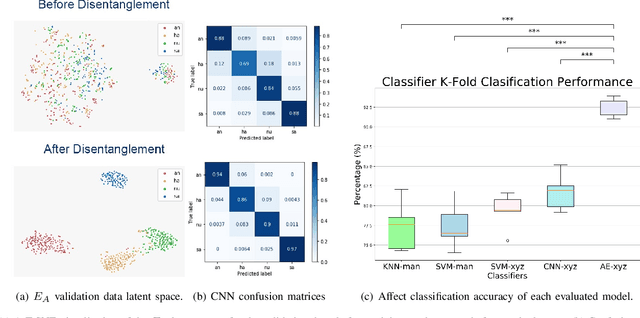

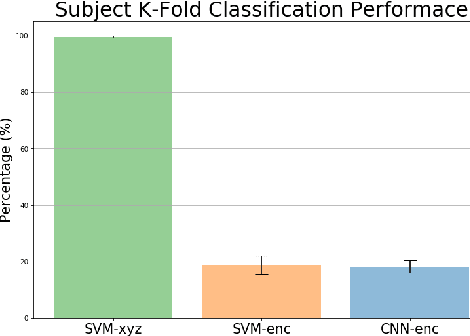

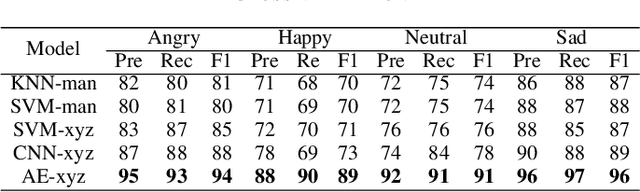

Human motion is a biomarker used extensively in clinical analysis to monitor the progression of neurological diseases and mood disorders. Since perceptions of emotions are also interleaved with body posture and movements, emotion recognition from human gait can be used to quantitatively monitor mood changes that are often related to neurological disorders. Many existing solutions often use shallow machine learning models with raw positional data or manually extracted features to achieve this. However, gait is composed of many highly expressive characteristics that can be used to identify human subjects, and most solutions fail to address this, disregarding the subject's privacy. This work evaluates the effectiveness of existing methods at recognising emotions using both 3D temporal joint signals and manually extracted features. We also show that this data can easily be exploited to expose a subject's identity. Therefore to this end, we propose a cross-subject transfer learning technique for training a multi-encoder autoencoder deep neural network to learn disentangled latent representations of human motion features. By disentangling subject biometrics from the gait data, we show that the subjects privacy is preserved while the affect recognition performance outperforms traditional methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge