Matej Ulicny

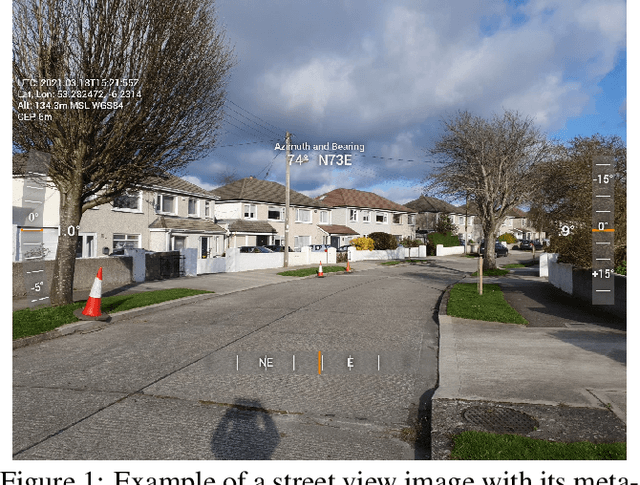

Combining geolocation and height estimation of objects from street level imagery

May 14, 2023

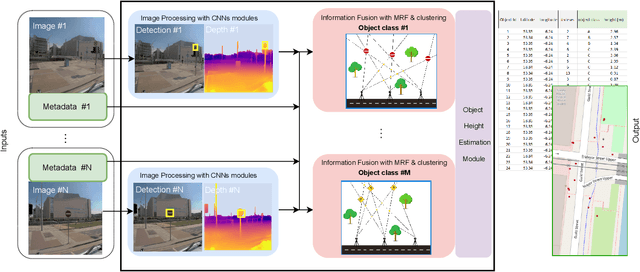

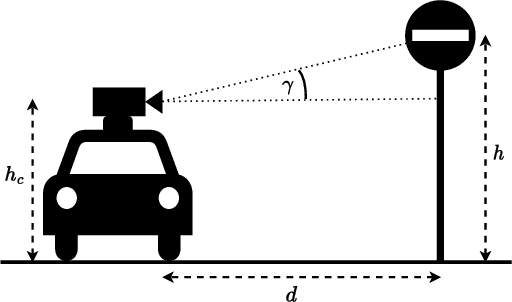

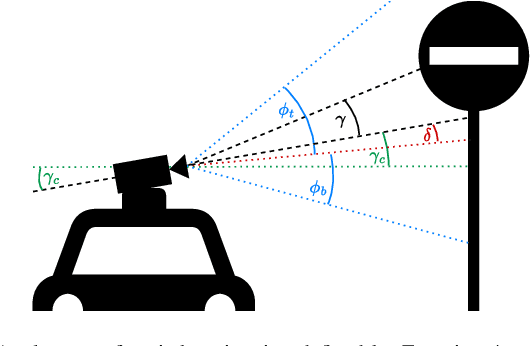

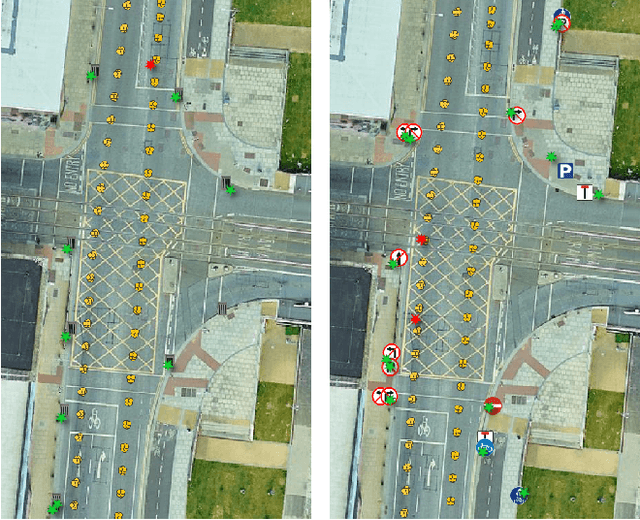

Abstract:We propose a pipeline for combined multi-class object geolocation and height estimation from street level RGB imagery, which is considered as a single available input data modality. Our solution is formulated via Markov Random Field optimization with deterministic output. The proposed technique uses image metadata along with coordinates of objects detected in the image plane as found by a custom-trained Convolutional Neural Network. Computing the object height using our methodology, in addition to object geolocation, has negligible effect on the overall computational cost. Accuracy is demonstrated experimentally for water drains and road signs on which we achieve average elevation estimation error lower than 20cm.

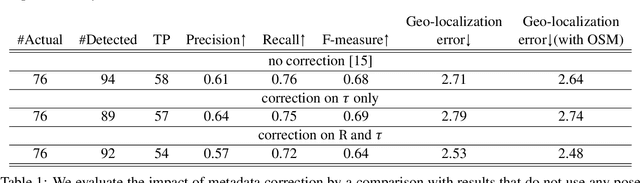

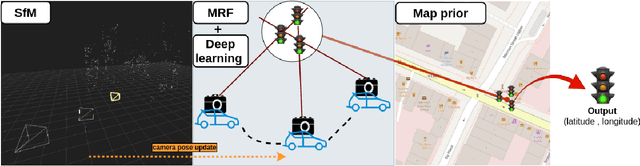

Context Aware Object Geotagging

Aug 13, 2021

Abstract:Localization of street objects from images has gained a lot of attention in recent years. We propose an approach to improve asset geolocation from street view imagery by enhancing the quality of the metadata associated with the images using Structure from Motion. The predicted object geolocation is further refined by imposing contextual geographic information extracted from OpenStreetMap. Our pipeline is validated experimentally against the state of the art approaches for geotagging traffic lights.

* 8 pages

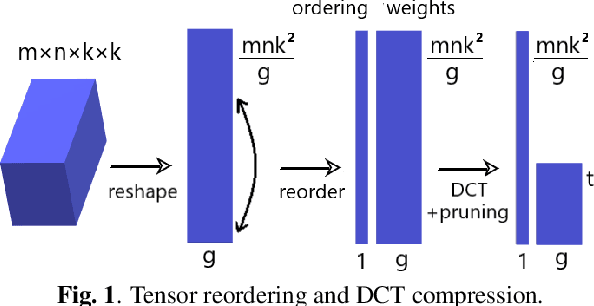

Tensor Reordering for CNN Compression

Oct 22, 2020

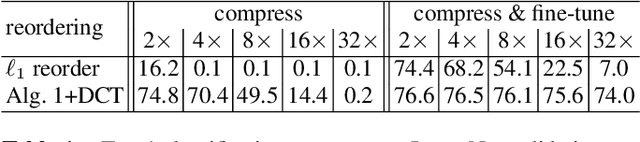

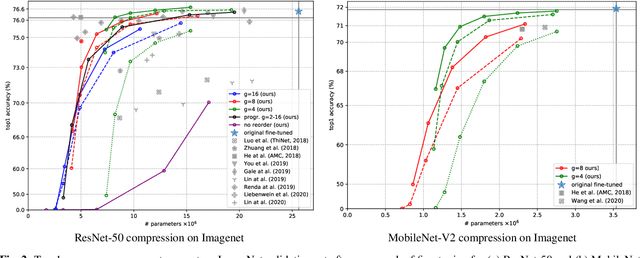

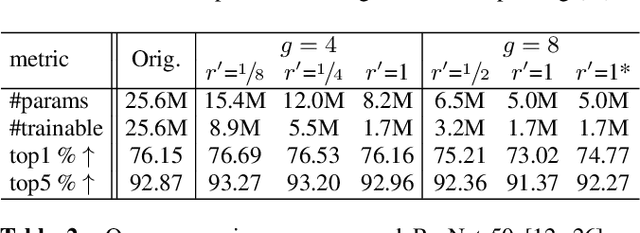

Abstract:We show how parameter redundancy in Convolutional Neural Network (CNN) filters can be effectively reduced by pruning in spectral domain. Specifically, the representation extracted via Discrete Cosine Transform (DCT) is more conducive for pruning than the original space. By relying on a combination of weight tensor reshaping and reordering we achieve high levels of layer compression with just minor accuracy loss. Our approach is applied to compress pretrained CNNs and we show that minor additional fine-tuning allows our method to recover the original model performance after a significant parameter reduction. We validate our approach on ResNet-50 and MobileNet-V2 architectures for ImageNet classification task.

Harmonic Convolutional Networks based on Discrete Cosine Transform

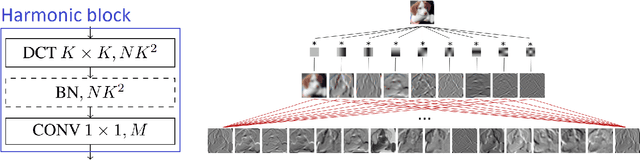

Jan 18, 2020

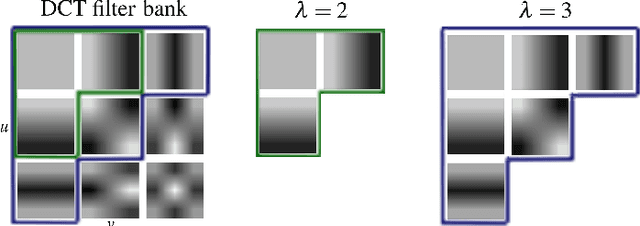

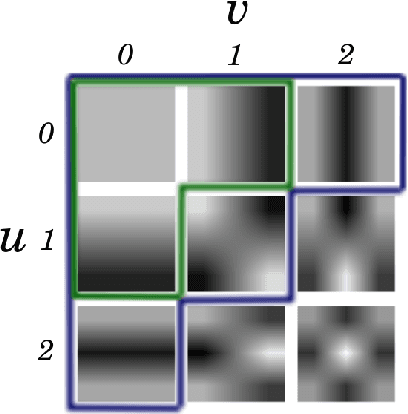

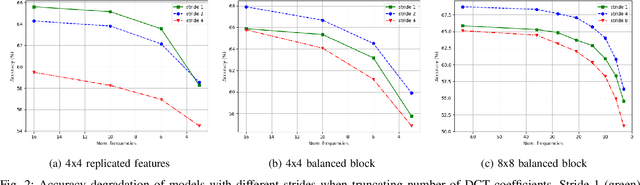

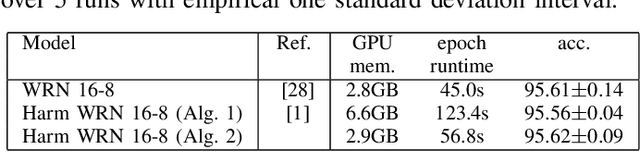

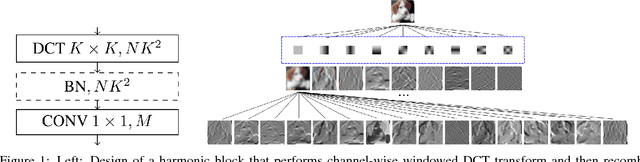

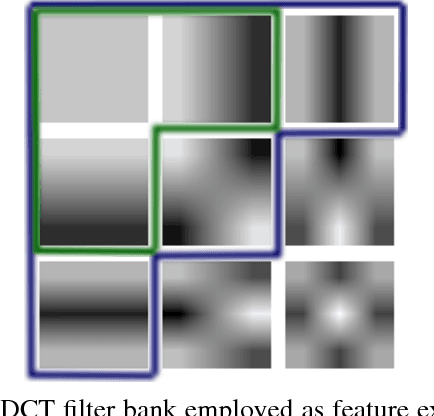

Abstract:Convolutional neural networks (CNNs) learn filters in order to capture local correlation patterns in feature space. In this paper we propose to revert to learning combinations of preset spectral filters by switching to CNNs with harmonic blocks. We rely on the use of the Discrete Cosine Transform (DCT) filters which have excellent energy compaction properties and are widely used for image compression. The proposed harmonic blocks rely on DCT-modeling and replace conventional convolutional layers to produce partially or fully harmonic versions of new or existing CNN architectures. We demonstrate how the harmonic networks can be efficiently compressed in a straightforward manner by truncating high-frequency information in harmonic blocks which is possible due to the redundancies in the spectral domain. We report extensive experimental validation demonstrating the benefits of the introduction of harmonic blocks into state-of-the-art CNN models in image classification, segmentation and edge detection applications.

Harmonic Networks with Limited Training Samples

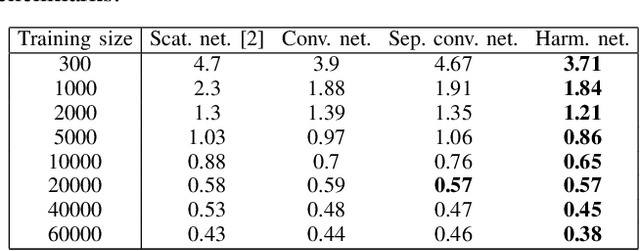

Apr 30, 2019

Abstract:Convolutional neural networks (CNNs) are very popular nowadays for image processing. CNNs allow one to learn optimal filters in a (mostly) supervised machine learning context. However this typically requires abundant labelled training data to estimate the filter parameters. Alternative strategies have been deployed for reducing the number of parameters and / or filters to be learned and thus decrease overfitting. In the context of reverting to preset filters, we propose here a computationally efficient harmonic block that uses Discrete Cosine Transform (DCT) filters in CNNs. In this work we examine the performance of harmonic networks in limited training data scenario. We validate experimentally that its performance compares well against scattering networks that use wavelets as preset filters.

Harmonic Networks: Integrating Spectral Information into CNNs

Dec 07, 2018

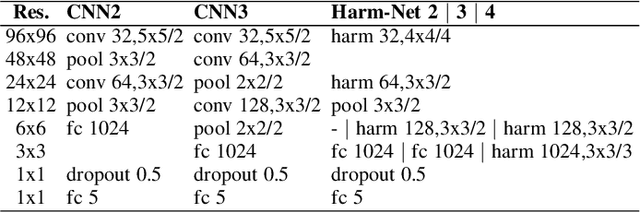

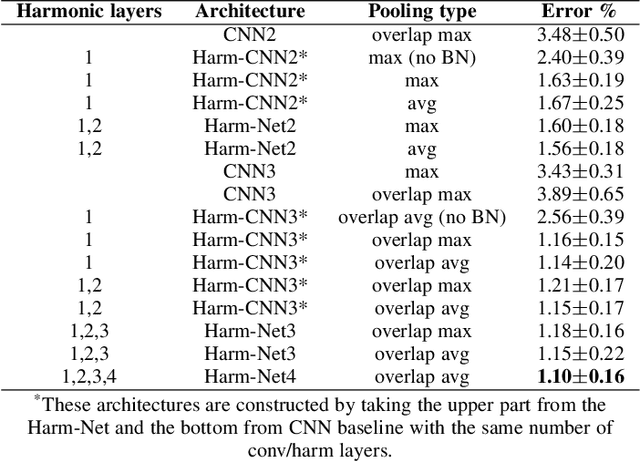

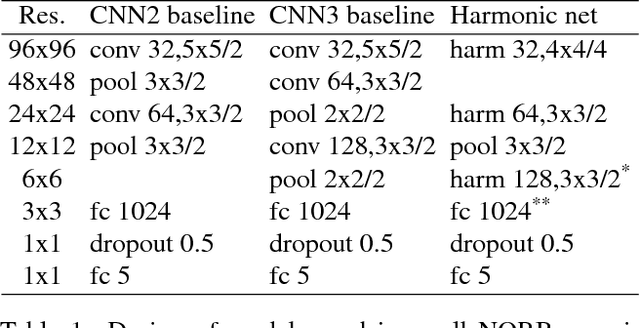

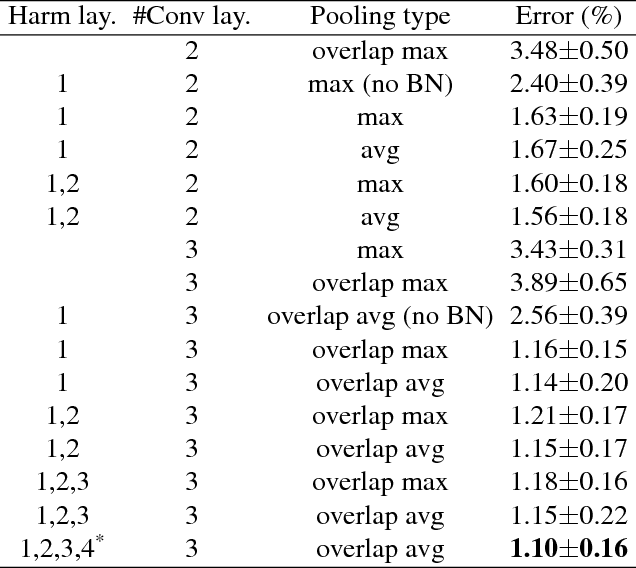

Abstract:Convolutional neural networks (CNNs) learn filters in order to capture local correlation patterns in feature space. In contrast, in this paper we propose harmonic blocks that produce features by learning optimal combinations of spectral filters defined by the Discrete Cosine Transform. The harmonic blocks are used to replace conventional convolutional layers to construct partial or fully harmonic CNNs. We extensively validate our approach and show that the introduction of harmonic blocks into state-of-the-art CNN baseline architectures results in comparable or better performance in classification tasks on small NORB, CIFAR10 and CIFAR100 datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge