Martino Lovisetto

Alteca

Deep Matrix Factorization with Adaptive Weights for Multi-View Clustering

Dec 03, 2024Abstract:Recently, deep matrix factorization has been established as a powerful model for unsupervised tasks, achieving promising results, especially for multi-view clustering. However, existing methods often lack effective feature selection mechanisms and rely on empirical hyperparameter selection. To address these issues, we introduce a novel Deep Matrix Factorization with Adaptive Weights for Multi-View Clustering (DMFAW). Our method simultaneously incorporates feature selection and generates local partitions, enhancing clustering results. Notably, the features weights are controlled and adjusted by a parameter that is dynamically updated using Control Theory inspired mechanism, which not only improves the model's stability and adaptability to diverse datasets but also accelerates convergence. A late fusion approach is then proposed to align the weighted local partitions with the consensus partition. Finally, the optimization problem is solved via an alternating optimization algorithm with theoretically guaranteed convergence. Extensive experiments on benchmark datasets highlight that DMFAW outperforms state-of-the-art methods in terms of clustering performance.

CADMR: Cross-Attention and Disentangled Learning for Multimodal Recommender Systems

Dec 03, 2024

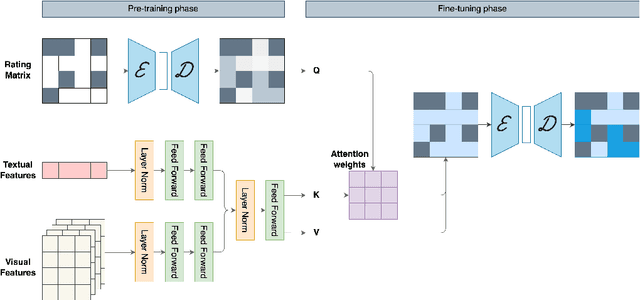

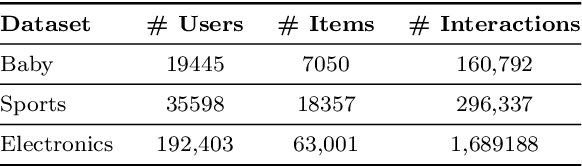

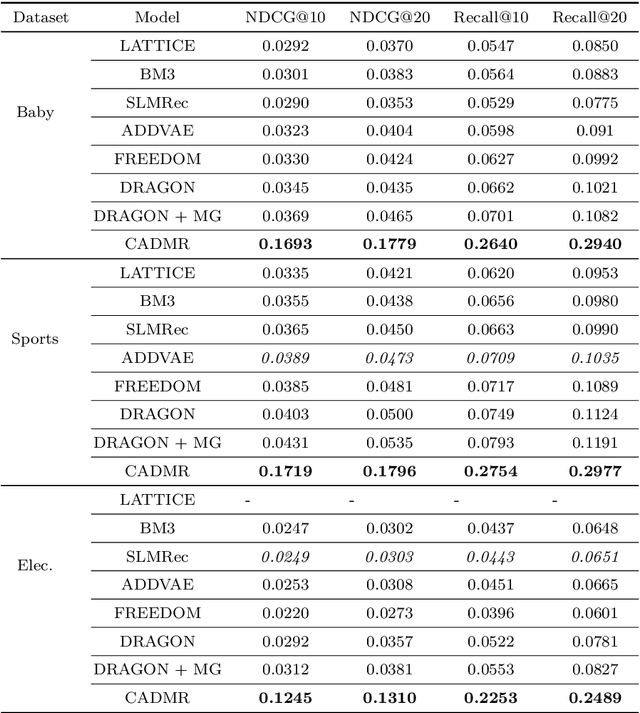

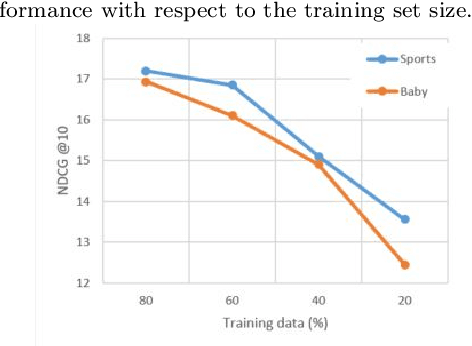

Abstract:The increasing availability and diversity of multimodal data in recommender systems offer new avenues for enhancing recommendation accuracy and user satisfaction. However, these systems must contend with high-dimensional, sparse user-item rating matrices, where reconstructing the matrix with only small subsets of preferred items for each user poses a significant challenge. To address this, we propose CADMR, a novel autoencoder-based multimodal recommender system framework. CADMR leverages multi-head cross-attention mechanisms and Disentangled Learning to effectively integrate and utilize heterogeneous multimodal data in reconstructing the rating matrix. Our approach first disentangles modality-specific features while preserving their interdependence, thereby learning a joint latent representation. The multi-head cross-attention mechanism is then applied to enhance user-item interaction representations with respect to the learned multimodal item latent representations. We evaluate CADMR on three benchmark datasets, demonstrating significant performance improvements over state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge