Martin Pal

Multiarmed Bandit Problems with Delayed Feedback

Jun 18, 2013

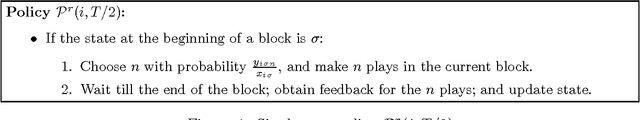

Abstract:In this paper we initiate the study of optimization of bandit type problems in scenarios where the feedback of a play is not immediately known. This arises naturally in allocation problems which have been studied extensively in the literature, albeit in the absence of delays in the feedback. We study this problem in the Bayesian setting. In presence of delays, no solution with provable guarantees is known to exist with sub-exponential running time. We show that bandit problems with delayed feedback that arise in allocation settings can be forced to have significant structure, with a slight loss in optimality. This structure gives us the ability to reason about the relationship of single arm policies to the entangled optimum policy, and eventually leads to a O(1) approximation for a significantly general class of priors. The structural insights we develop are of key interest and carry over to the setting where the feedback of an action is available instantaneously, and we improve all previous results in this setting as well.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge