Martin Lange

On the Expressiveness of State Space Models via Temporal Logics

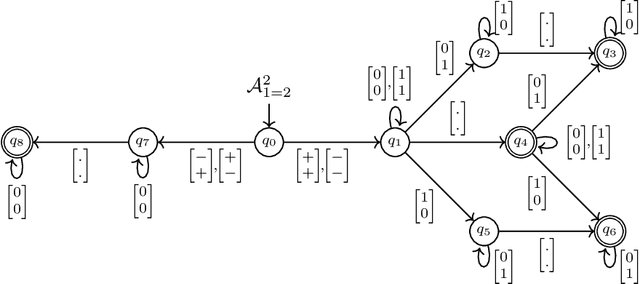

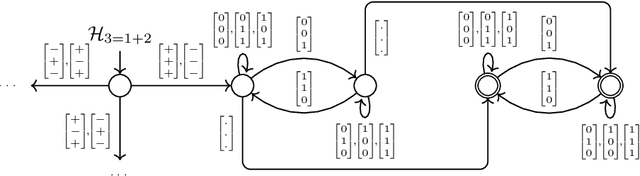

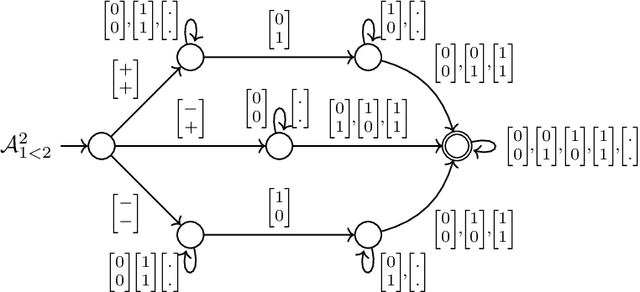

Jan 27, 2026Abstract:We investigate the expressive power of state space models (SSM), which have recently emerged as a potential alternative to transformer architectures in large language models. Building on recent work, we analyse SSM expressiveness through fragments and extensions of linear temporal logic over finite traces. Our results show that the expressive capabilities of SSM vary substantially depending on the underlying gating mechanism. We further distinguish between SSM operating over fixed-width arithmetic (quantised models), whose expressive power remains within regular languages, and SSM with unbounded precision, which can capture counting properties and non-regular languages. In addition, we provide a systematic comparison between these different SSM variants and known results on transformers, thereby clarifying how the two architectures relate in terms of expressive power.

The Computational Complexity of Satisfiability in State Space Models

Aug 25, 2025

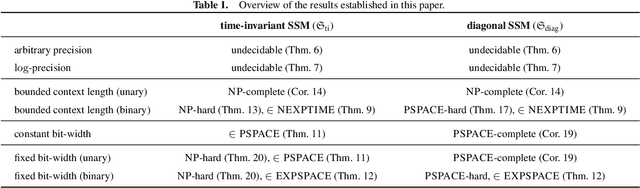

Abstract:We analyse the complexity of the satisfiability problem ssmSAT for State Space Models (SSM), which asks whether an input sequence can lead the model to an accepting configuration. We find that ssmSAT is undecidable in general, reflecting the computational power of SSM. Motivated by practical settings, we identify two natural restrictions under which ssmSAT becomes decidable and establish corresponding complexity bounds. First, for SSM with bounded context length, ssmSAT is NP-complete when the input length is given in unary and in NEXPTIME (and PSPACE-hard) when the input length is given in binary. Second, for quantised SSM operating over fixed-width arithmetic, ssmSAT is PSPACE-complete resp. in EXPSPACE depending on the bit-width encoding. While these results hold for diagonal gated SSM we also establish complexity bounds for time-invariant SSM. Our results establish a first complexity landscape for formal reasoning in SSM and highlight fundamental limits and opportunities for the verification of SSM-based language models.

The Logical Expressiveness of Temporal GNNs via Two-Dimensional Product Logics

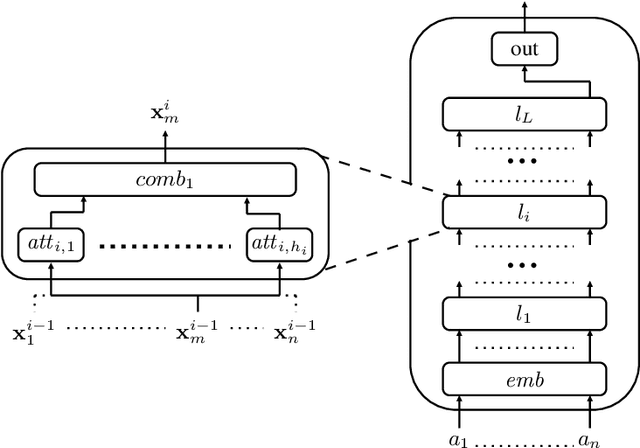

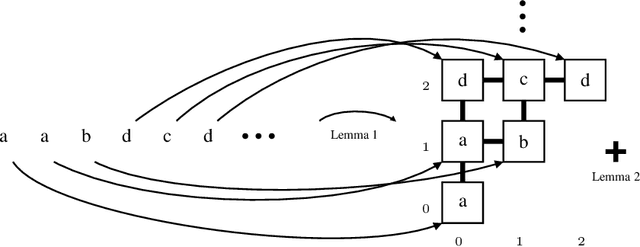

May 17, 2025Abstract:In recent years, the expressive power of various neural architectures -- including graph neural networks (GNNs), transformers, and recurrent neural networks -- has been characterised using tools from logic and formal language theory. As the capabilities of basic architectures are becoming well understood, increasing attention is turning to models that combine multiple architectural paradigms. Among them particularly important, and challenging to analyse, are temporal extensions of GNNs, which integrate both spatial (graph-structure) and temporal (evolution over time) dimensions. In this paper, we initiate the study of logical characterisation of temporal GNNs by connecting them to two-dimensional product logics. We show that the expressive power of temporal GNNs depends on how graph and temporal components are combined. In particular, temporal GNNs that apply static GNNs recursively over time can capture all properties definable in the product logic of (past) propositional temporal logic PTL and the modal logic K. In contrast, architectures such as graph-and-time TGNNs and global TGNNs can only express restricted fragments of this logic, where the interaction between temporal and spatial operators is syntactically constrained. These results yield the first logical characterisations of temporal GNNs and establish new relative expressiveness results for temporal GNNs.

The Computational Complexity of Formal Reasoning for Encoder-Only Transformers

May 28, 2024

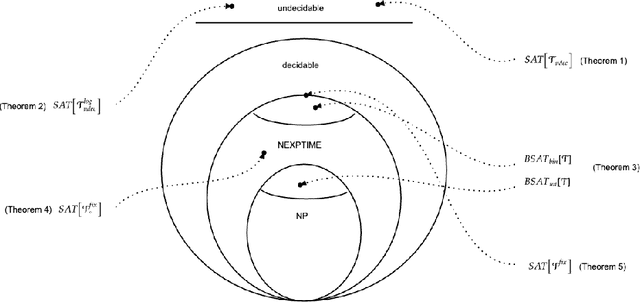

Abstract:We investigate challenges and possibilities of formal reasoning for encoder-only transformers (EOT), meaning sound and complete methods for verifying or interpreting behaviour. In detail, we condense related formal reasoning tasks in the form of a naturally occurring satisfiability problem (SAT). We find that SAT is undecidable if we consider EOT, commonly considered in the expressiveness community. Furthermore, we identify practical scenarios where SAT is decidable and establish corresponding complexity bounds. Besides trivial cases, we find that quantized EOT, namely those restricted by some fixed-width arithmetic, lead to the decidability of SAT due to their limited attention capabilities. However, the problem remains difficult, as we establish those scenarios where SAT is NEXPTIME-hard and those where we can show that it is solvable in NEXPTIME for quantized EOT. To complement our theoretical results, we put our findings and their implications in the overall perspective of formal reasoning.

Verifying And Interpreting Neural Networks using Finite Automata

Nov 02, 2022

Abstract:Verifying properties and interpreting the behaviour of deep neural networks (DNN) is an important task given their ubiquitous use in applications, including safety-critical ones, and their blackbox nature. We propose an automata-theoric approach to tackling problems arising in DNN analysis. We show that the input-output behaviour of a DNN can be captured precisely by a (special) weak B\"uchi automaton of exponential size. We show how these can be used to address common verification and interpretation tasks like adversarial robustness, minimum sufficient reasons etc. We report on a proof-of-concept implementation translating DNN to automata on finite words for better efficiency at the cost of losing precision in analysis.

We Cannot Guarantee Safety: The Undecidability of Graph Neural Network Verification

Jun 10, 2022

Abstract:Graph Neural Networks (GNN) are commonly used for two tasks: (whole) graph classification and node classification. We formally introduce generically formulated decision problems for both tasks, corresponding to the following pattern: given a GNN, some specification of valid inputs, and some specification of valid outputs, decide whether there is a valid input satisfying the output specification. We then prove that graph classifier verification is undecidable in general, implying that there cannot be an algorithm surely guaranteeing the absence of misclassification of any kind. Additionally, we show that verification in the node classification case becomes decidable as soon as we restrict the degree of the considered graphs. Furthermore, we discuss possible changes to these results depending on the considered GNN model and specifications.

Reachability In Simple Neural Networks

Mar 15, 2022

Abstract:We investigate the complexity of the reachability problem for (deep) neural networks: does it compute valid output given some valid input? It was recently claimed that the problem is NP-complete for general neural networks and specifications over the input/output dimension given by conjunctions of linear inequalities. We recapitulate the proof and repair some flaws in the original upper and lower bound proofs. Motivated by the general result, we show that NP-hardness already holds for restricted classes of simple specifications and neural networks. Allowing for a single hidden layer and an output dimension of one as well as neural networks with just one negative, zero and one positive weight or bias is sufficient to ensure NP-hardness. Additionally, we give a thorough discussion and outlook of possible extensions for this direction of research on neural network verification.

Reachability Is NP-Complete Even for the Simplest Neural Networks

Sep 01, 2021

Abstract:We investigate the complexity of the reachability problem for (deep) neural networks: does it compute valid output given some valid input? It was recently claimed that the problem is NP-complete for general neural networks and conjunctive input/output specifications. We repair some flaws in the original upper and lower bound proofs. We then show that NP-hardness already holds for restricted classes of simple specifications and neural networks with just one layer, as well as neural networks with minimal requirements on the occurring parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge