Markus Bohlin

Machine Learning Testing in an ADAS Case Study Using Simulation-Integrated Bio-Inspired Search-Based Testing

Mar 22, 2022

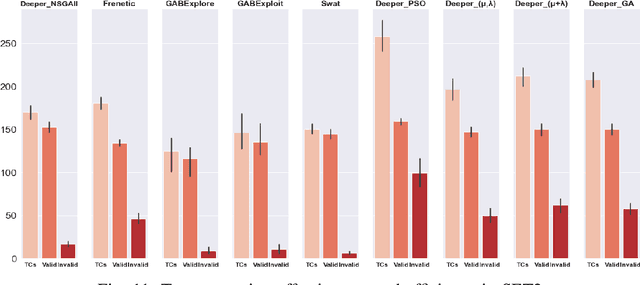

Abstract:This paper presents an extended version of Deeper, a search-based simulation-integrated test solution that generates failure-revealing test scenarios for testing a deep neural network-based lane-keeping system. In the newly proposed version, we utilize a new set of bio-inspired search algorithms, genetic algorithm (GA), $({\mu}+{\lambda})$ and $({\mu},{\lambda})$ evolution strategies (ES), and particle swarm optimization (PSO), that leverage a quality population seed and domain-specific cross-over and mutation operations tailored for the presentation model used for modeling the test scenarios. In order to demonstrate the capabilities of the new test generators within Deeper, we carry out an empirical evaluation and comparison with regard to the results of five participating tools in the cyber-physical systems testing competition at SBST 2021. Our evaluation shows the newly proposed test generators in Deeper not only represent a considerable improvement on the previous version but also prove to be effective and efficient in provoking a considerable number of diverse failure-revealing test scenarios for testing an ML-driven lane-keeping system. They can trigger several failures while promoting test scenario diversity, under a limited test time budget, high target failure severity, and strict speed limit constraints.

An Autonomous Performance Testing Framework using Self-Adaptive Fuzzy Reinforcement Learning

Aug 19, 2019

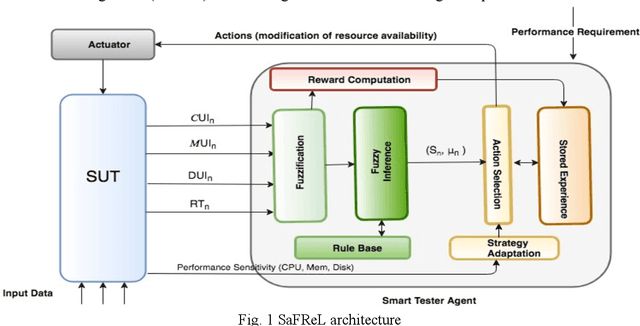

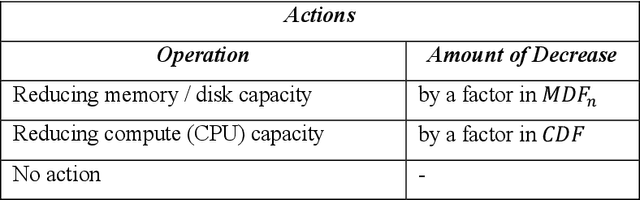

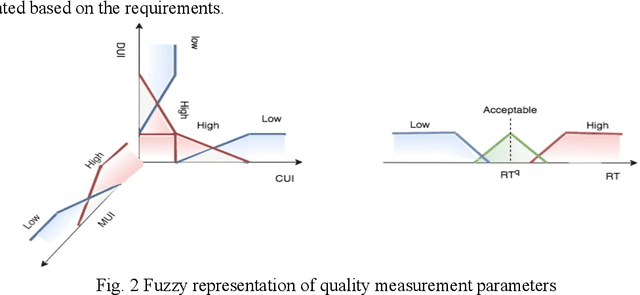

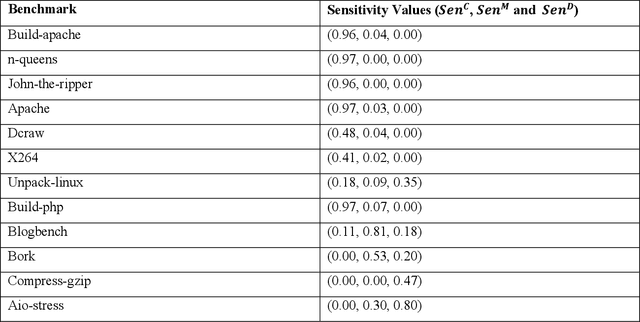

Abstract:Test automation can result in reduction in cost and human effort. If the optimal policy, the course of actions taken, for the intended objective in a testing process could be learnt by the testing system (e.g., a smart tester agent), then it could be reused in similar situations, thus leading to higher efficiency, i.e., less computational time. Automating stress testing to find performance breaking points remains a challenge for complex software systems. Common approaches are mainly based on source code or system model analysis or use-case based techniques. However, source code or system models might not be available at testing time. In this paper, we propose a self-adaptive fuzzy reinforcement learning-based performance (stress) testing framework (SaFReL) that enables the tester agent to learn the optimal policy for generating stress test cases leading to performance breaking point without access to performance model of the system under test. SaFReL learns the optimal policy through an initial learning, then reuses it during a transfer learning phase, while keeping the learning running in the long-term. Through multiple experiments on a simulated environment, we demonstrate that our approach generates the stress test cases for different programs efficiently and adaptively without access to performance models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge