Marjolein Oostrom

Bayesian SegNet for Semantic Segmentation with Improved Interpretation of Microstructural Evolution During Irradiation of Materials

Feb 20, 2025Abstract:Understanding the relationship between the evolution of microstructures of irradiated LiAlO2 pellets and tritium diffusion, retention and release could improve predictions of tritium-producing burnable absorber rod performance. Given expert-labeled segmented images of irradiated and unirradiated pellets, we trained Deep Convolutional Neural Networks to segment images into defect, grain, and boundary classes. Qualitative microstructural information was calculated from these segmented images to facilitate the comparison of unirradiated and irradiated pellets. We tested modifications to improve the sensitivity of the model, including incorporating meta-data into the model and utilizing uncertainty quantification. The predicted segmentation was similar to the expert-labeled segmentation for most methods of microstructural qualification, including pixel proportion, defect area, and defect density. Overall, the high performance metrics for the best models for both irradiated and unirradiated images shows that utilizing neural network models is a viable alternative to expert-labeled images.

DBCal: Density Based Calibration of classifier predictions for uncertainty quantification

Apr 01, 2022

Abstract:Measurement of uncertainty of predictions from machine learning methods is important across scientific domains and applications. We present, to our knowledge, the first such technique that quantifies the uncertainty of predictions from a classifier and accounts for both the classifier's belief and performance. We prove that our method provides an accurate estimate of the probability that the outputs of two neural networks are correct by showing an expected calibration error of less than 0.2% on a binary classifier, and less than 3% on a semantic segmentation network with extreme class imbalance. We empirically show that the uncertainty returned by our method is an accurate measurement of the probability that the classifier's prediction is correct and, therefore has broad utility in uncertainty propagation.

An Automated Scanning Transmission Electron Microscope Guided by Sparse Data Analytics

Sep 30, 2021

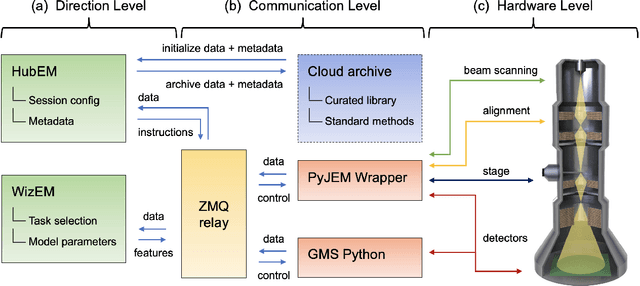

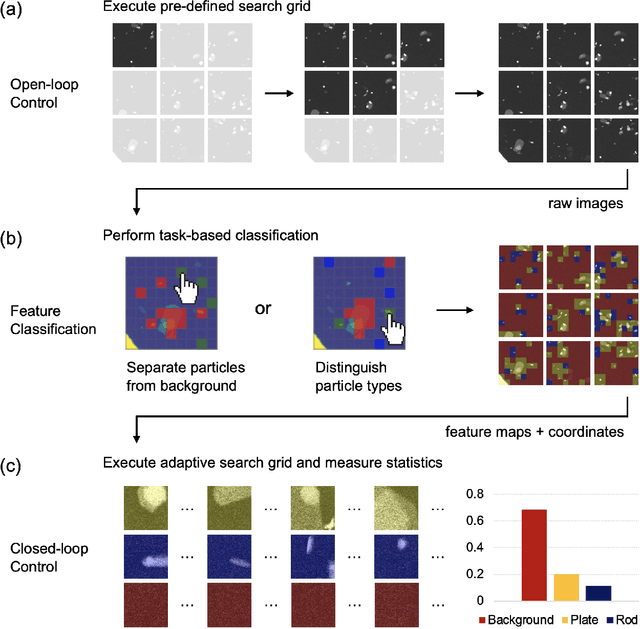

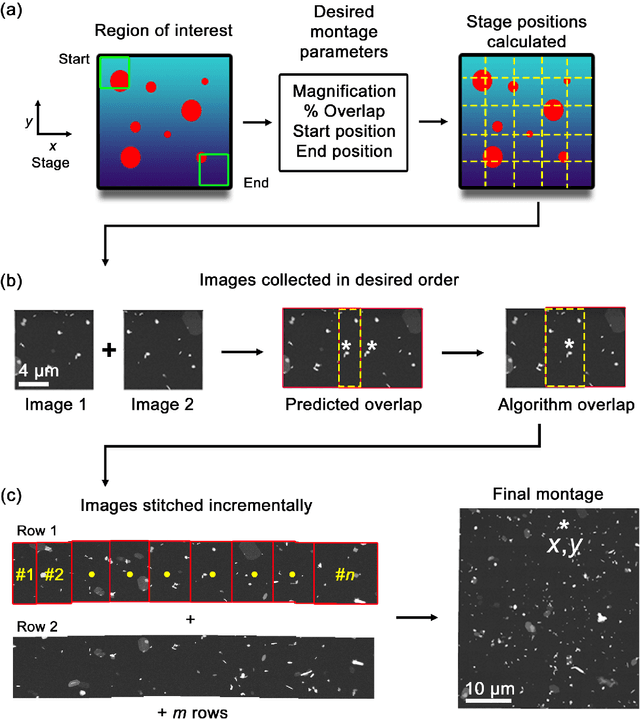

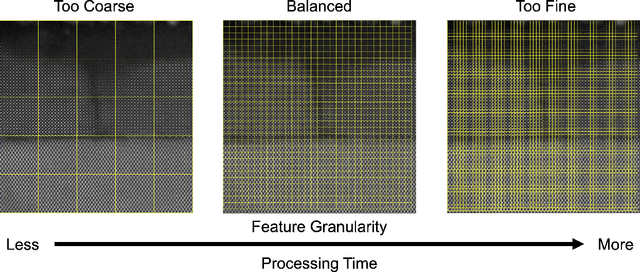

Abstract:Artificial intelligence (AI) promises to reshape scientific inquiry and enable breakthrough discoveries in areas such as energy storage, quantum computing, and biomedicine. Scanning transmission electron microscopy (STEM), a cornerstone of the study of chemical and materials systems, stands to benefit greatly from AI-driven automation. However, present barriers to low-level instrument control, as well as generalizable and interpretable feature detection, make truly automated microscopy impractical. Here, we discuss the design of a closed-loop instrument control platform guided by emerging sparse data analytics. We demonstrate how a centralized controller, informed by machine learning combining limited $a$ $priori$ knowledge and task-based discrimination, can drive on-the-fly experimental decision-making. This platform unlocks practical, automated analysis of a variety of material features, enabling new high-throughput and statistical studies.

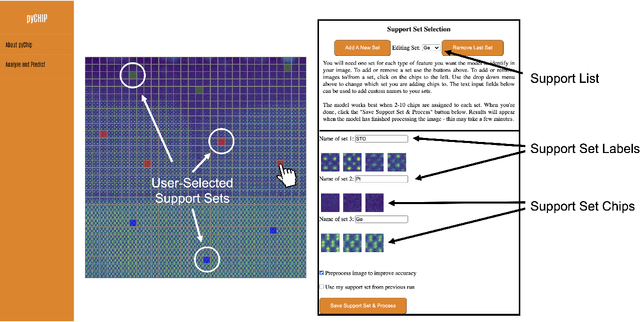

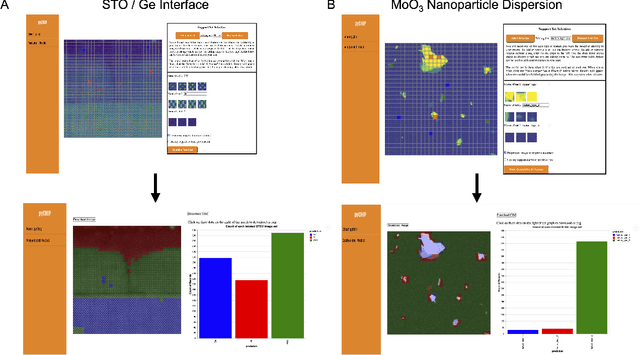

Design of a Graphical User Interface for Few-Shot Machine Learning Classification of Electron Microscopy Data

Jul 21, 2021

Abstract:The recent growth in data volumes produced by modern electron microscopes requires rapid, scalable, and flexible approaches to image segmentation and analysis. Few-shot machine learning, which can richly classify images from a handful of user-provided examples, is a promising route to high-throughput analysis. However, current command-line implementations of such approaches can be slow and unintuitive to use, lacking the real-time feedback necessary to perform effective classification. Here we report on the development of a Python-based graphical user interface that enables end users to easily conduct and visualize the output of few-shot learning models. This interface is lightweight and can be hosted locally or on the web, providing the opportunity to reproducibly conduct, share, and crowd-source few-shot analyses.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge