Marine Collery

Neural-based classification rule learning for sequential data

Feb 22, 2023Abstract:Discovering interpretable patterns for classification of sequential data is of key importance for a variety of fields, ranging from genomics to fraud detection or more generally interpretable decision-making. In this paper, we propose a novel differentiable fully interpretable method to discover both local and global patterns (i.e. catching a relative or absolute temporal dependency) for rule-based binary classification. It consists of a convolutional binary neural network with an interpretable neural filter and a training strategy based on dynamically-enforced sparsity. We demonstrate the validity and usefulness of the approach on synthetic datasets and on an open-source peptides dataset. Key to this end-to-end differentiable method is that the expressive patterns used in the rules are learned alongside the rules themselves.

Differentiable Rule Induction with Learned Relational Features

Jan 17, 2022

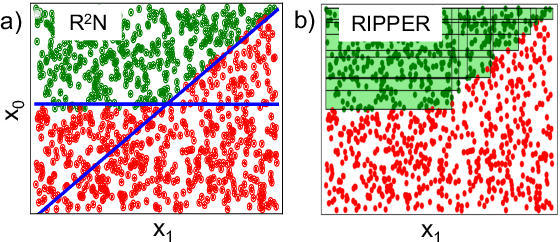

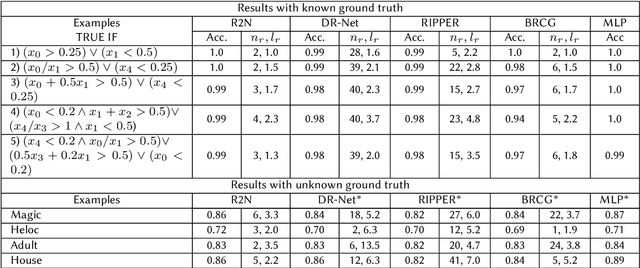

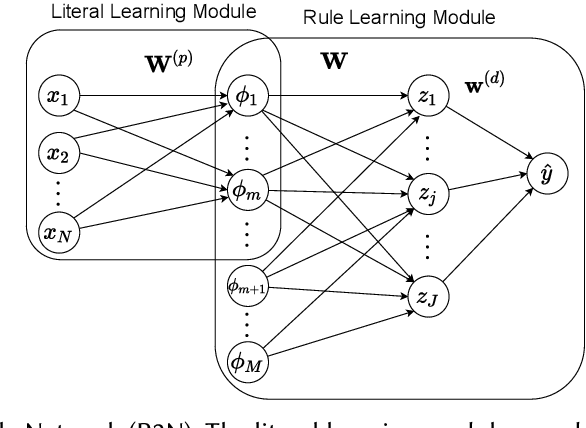

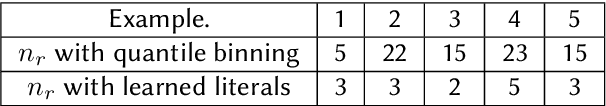

Abstract:Rule-based decision models are attractive due to their interpretability. However, existing rule induction methods often results in long and consequently less interpretable set of rules. This problem can, in many cases, be attributed to the rule learner's lack of appropriately expressive vocabulary, i.e., relevant predicates. Most existing rule induction algorithms presume the availability of predicates used to represent the rules, naturally decoupling the predicate definition and the rule learning phases. In contrast, we propose the Relational Rule Network (RRN), a neural architecture that learns relational predicates that represent a linear relationship among attributes along with the rules that use them. This approach opens the door to increasing the expressiveness of induced decision models by coupling predicate learning directly with rule learning in an end to end differentiable fashion. On benchmark tasks, we show that these relational predicates are simple enough to retain interpretability, yet improve prediction accuracy and provide sets of rules that are more concise compared to state of the art rule induction algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge