Marina Dubova

One system for learning and remembering episodes and rules

Jul 08, 2024

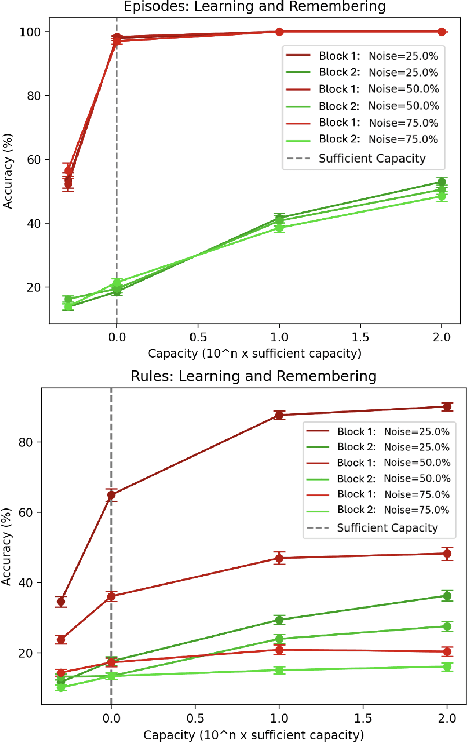

Abstract:Humans can learn individual episodes and generalizable rules and also successfully retain both kinds of acquired knowledge over time. In the cognitive science literature, (1) learning individual episodes and rules and (2) learning and remembering are often both conceptualized as competing processes that necessitate separate, complementary learning systems. Inspired by recent research in statistical learning, we challenge these trade-offs, hypothesizing that they arise from capacity limitations rather than from the inherent incompatibility of the underlying cognitive processes. Using an associative learning task, we show that one system with excess representational capacity can learn and remember both episodes and rules.

Building Human-like Communicative Intelligence: A Grounded Perspective

Jan 02, 2022

Abstract:Modern Artificial Intelligence (AI) systems excel at diverse tasks, from image classification to strategy games, even outperforming humans in many of these domains. After making astounding progress in language learning in the recent decade, AI systems, however, seem to approach the ceiling that does not reflect important aspects of human communicative capacities. Unlike human learners, communicative AI systems often fail to systematically generalize to new data, suffer from sample inefficiency, fail to capture common-sense semantic knowledge, and do not translate to real-world communicative situations. Cognitive Science offers several insights on how AI could move forward from this point. This paper aims to: (1) suggest that the dominant cognitively-inspired AI directions, based on nativist and symbolic paradigms, lack necessary substantiation and concreteness to guide progress in modern AI, and (2) articulate an alternative, "grounded", perspective on AI advancement, inspired by Embodied, Embedded, Extended, and Enactive Cognition (4E) research. I review results on 4E research lines in Cognitive Science to distinguish the main aspects of naturalistic learning conditions that play causal roles for human language development. I then use this analysis to propose a list of concrete, implementable components for building "grounded" linguistic intelligence. These components include embodying machines in a perception-action cycle, equipping agents with active exploration mechanisms so they can build their own curriculum, allowing agents to gradually develop motor abilities to promote piecemeal language development, and endowing the agents with adaptive feedback from their physical and social environment. I hope that these ideas can direct AI research towards building machines that develop human-like language abilities through their experiences with the world.

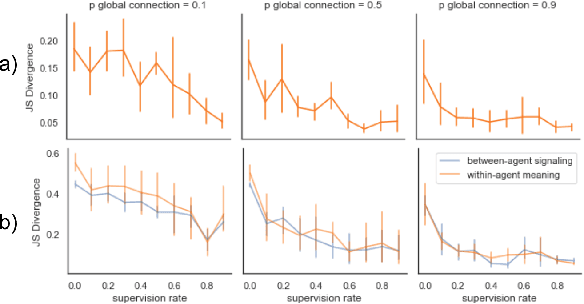

Reinforcement Communication Learning in Different Social Network Structures

Jul 19, 2020

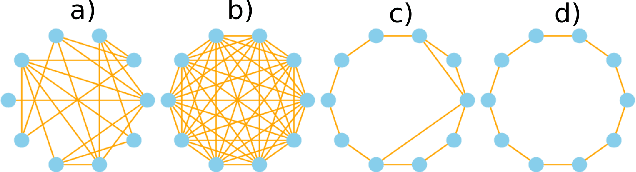

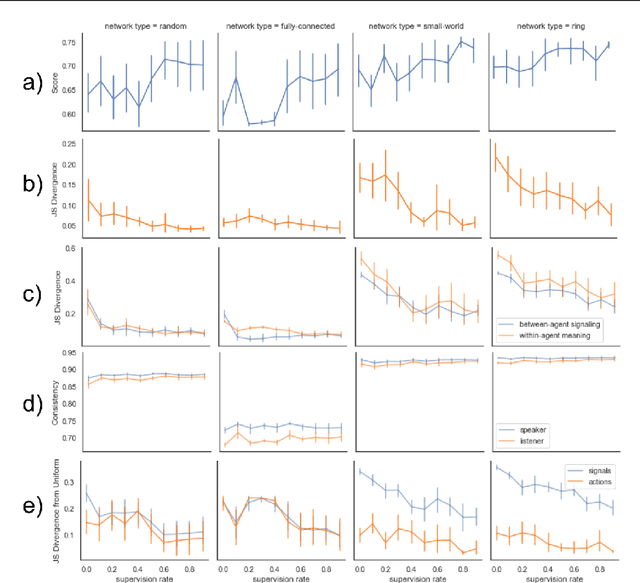

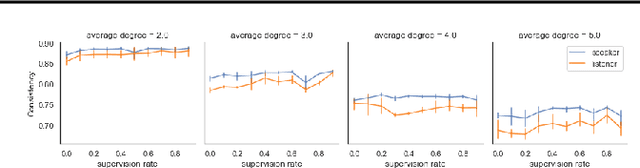

Abstract:Social network structure is one of the key determinants of human language evolution. Previous work has shown that the network of social interactions shapes decentralized learning in human groups, leading to the emergence of different kinds of communicative conventions. We examined the effects of social network organization on the properties of communication systems emerging in decentralized, multi-agent reinforcement learning communities. We found that the global connectivity of a social network drives the convergence of populations on shared and symmetric communication systems, preventing the agents from forming many local "dialects". Moreover, the agent's degree is inversely related to the consistency of its use of communicative conventions. These results show the importance of the basic properties of social network structure on reinforcement communication learning and suggest a new interpretation of findings on human convergence on word conventions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge