Marija Vella

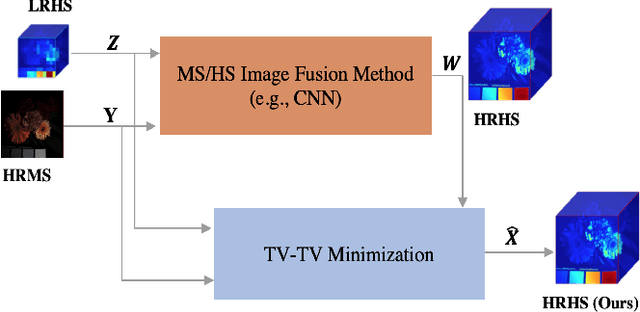

Enhanced Hyperspectral Image Super-Resolution via RGB Fusion and TV-TV Minimization

Jun 13, 2021

Abstract:Hyperspectral (HS) images contain detailed spectral information that has proven crucial in applications like remote sensing, surveillance, and astronomy. However, because of hardware limitations of HS cameras, the captured images have low spatial resolution. To improve them, the low-resolution hyperspectral images are fused with conventional high-resolution RGB images via a technique known as fusion based HS image super-resolution. Currently, the best performance in this task is achieved by deep learning (DL) methods. Such methods, however, cannot guarantee that the input measurements are satisfied in the recovered image, since the learned parameters by the network are applied to every test image. Conversely, model-based algorithms can typically guarantee such measurement consistency. Inspired by these observations, we propose a framework that integrates learning and model based methods. Experimental results show that our method produces images of superior spatial and spectral resolution compared to the current leading methods, whether model- or DL-based.

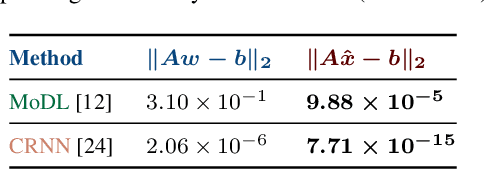

Overcoming Measurement Inconsistency in Deep Learning for Linear Inverse Problems: Applications in Medical Imaging

Nov 29, 2020

Abstract:The remarkable performance of deep neural networks (DNNs) currently makes them the method of choice for solving linear inverse problems. They have been applied to super-resolve and restore images, as well as to reconstruct MR and CT images. In these applications, DNNs invert a forward operator by finding, via training data, a map between the measurements and the input images. It is then expected that the map is still valid for the test data. This framework, however, introduces measurement inconsistency during testing. We show that such inconsistency, which can be critical in domains like medical imaging or defense, is intimately related to the generalization error. We then propose a framework that post-processes the output of DNNs with an optimization algorithm that enforces measurement consistency. Experiments on MR images show that enforcing measurement consistency via our method can lead to large gains in reconstruction performance.

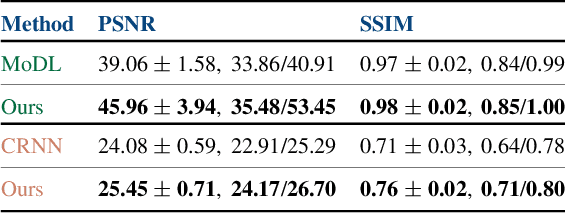

Robust Single-Image Super-Resolution via CNNs and TV-TV Minimization

Apr 02, 2020

Abstract:Single-image super-resolution is the process of increasing the resolution of an image, obtaining a high-resolution (HR) image from a low-resolution (LR) one. By leveraging large training datasets, convolutional neural networks (CNNs) currently achieve the state-of-the-art performance in this task. Yet, during testing/deployment, they fail to enforce consistency between the HR and LR images: if we downsample the output HR image, it never matches its LR input. Based on this observation, we propose to post-process the CNN outputs with an optimization problem that we call TV-TV minimization, which enforces consistency. As our extensive experiments show, such post-processing not only improves the quality of the images, in terms of PSNR and SSIM, but also makes the super-resolution task robust to operator mismatch, i.e., when the true downsampling operator is different from the one used to create the training dataset.

Single Image Super-Resolution via CNN Architectures and TV-TV Minimization

Jul 11, 2019

Abstract:Super-resolution (SR) is a technique that allows increasing the resolution of a given image. Having applications in many areas, from medical imaging to consumer electronics, several SR methods have been proposed. Currently, the best performing methods are based on convolutional neural networks (CNNs) and require extensive datasets for training. However, at test time, they fail to impose consistency between the super-resolved image and the given low-resolution image, a property that classic reconstruction-based algorithms naturally enforce in spite of having poorer performance. Motivated by this observation, we propose a new framework that joins both approaches and produces images with superior quality than any of the prior methods. Although our framework requires additional computation, our experiments on Set5, Set14, and BSD100 show that it systematically produces images with better peak signal to noise ratio (PSNR) and structural similarity (SSIM) than the current state-of-the-art CNN architectures for SR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge