Maria Hartmann

Multi-objective methods in Federated Learning: A survey and taxonomy

Feb 05, 2025

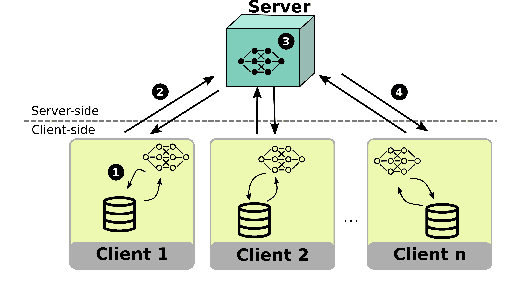

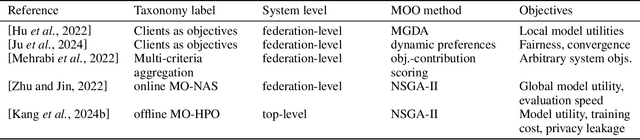

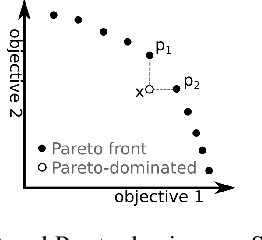

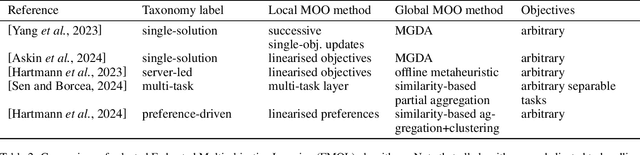

Abstract:The Federated Learning paradigm facilitates effective distributed machine learning in settings where training data is decentralized across multiple clients. As the popularity of the strategy grows, increasingly complex real-world problems emerge, many of which require balancing conflicting demands such as fairness, utility, and resource consumption. Recent works have begun to recognise the use of a multi-objective perspective in answer to this challenge. However, this novel approach of combining federated methods with multi-objective optimisation has never been discussed in the broader context of both fields. In this work, we offer a first clear and systematic overview of the different ways the two fields can be integrated. We propose a first taxonomy on the use of multi-objective methods in connection with Federated Learning, providing a targeted survey of the state-of-the-art and proposing unambiguous labels to categorise contributions. Given the developing nature of this field, our taxonomy is designed to provide a solid basis for further research, capturing existing works while anticipating future additions. Finally, we outline open challenges and possible directions for further research.

FedPref: Federated Learning Across Heterogeneous Multi-objective Preferences

Jan 23, 2025

Abstract:Federated Learning (FL) is a distributed machine learning strategy, developed for settings where training data is owned by distributed devices and cannot be shared. FL circumvents this constraint by carrying out model training in distribution. The parameters of these local models are shared intermittently among participants and aggregated to enhance model accuracy. This strategy has been rapidly adopted by the industry in efforts to overcome privacy and resource constraints in model training. However, the application of FL to real-world settings brings additional challenges associated with heterogeneity between participants. Research into mitigating these difficulties in FL has largely focused on only two types of heterogeneity: the unbalanced distribution of training data, and differences in client resources. Yet more types of heterogeneity are becoming relevant as the capability of FL expands to cover more complex problems, from the tuning of LLMs to enabling machine learning on edge devices. In this work, we discuss a novel type of heterogeneity that is likely to become increasingly relevant in future applications: this is preference heterogeneity, emerging when clients learn under multiple objectives, with different importance assigned to each objective on different clients. In this work, we discuss the implications of this type of heterogeneity and propose FedPref, a first algorithm designed to facilitate personalised FL in this setting. We demonstrate the effectiveness of the algorithm across different problems, preference distributions and model architectures. In addition, we introduce a new analytical point of view, based on multi-objective metrics, for evaluating the performance of FL algorithms in this setting beyond the traditional client-focused metrics. We perform a second experimental analysis based in this view, and show that FedPref outperforms compared algorithms.

Heterogeneity: An Open Challenge for Federated On-board Machine Learning

Aug 13, 2024

Abstract:The design of satellite missions is currently undergoing a paradigm shift from the historical approach of individualised monolithic satellites towards distributed mission configurations, consisting of multiple small satellites. With a rapidly growing number of such satellites now deployed in orbit, each collecting large amounts of data, interest in on-board orbital edge computing is rising. Federated Learning is a promising distributed computing approach in this context, allowing multiple satellites to collaborate efficiently in training on-board machine learning models. Though recent works on the use of Federated Learning in orbital edge computing have focused largely on homogeneous satellite constellations, Federated Learning could also be employed to allow heterogeneous satellites to form ad-hoc collaborations, e.g. in the case of communications satellites operated by different providers. Such an application presents additional challenges to the Federated Learning paradigm, arising largely from the heterogeneity of such a system. In this position paper, we offer a systematic review of these challenges in the context of the cross-provider use case, giving a brief overview of the state-of-the-art for each, and providing an entry point for deeper exploration of each issue.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge