Mari Kobayashi

Beam-Space MIMO Radar for Joint Communication and Sensing with OTFS Modulation

Jul 12, 2022

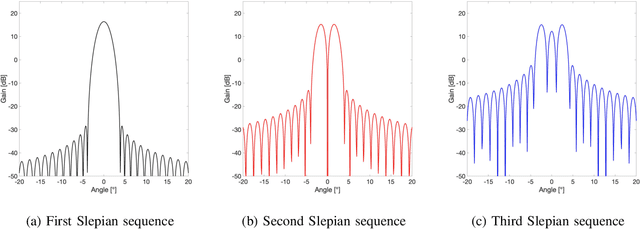

Abstract:Motivated by automotive applications, we consider joint radar sensing and data communication for a system operating at millimeter wave (mmWave) frequency bands, where a Base Station (BS) is equipped with a co-located radar receiver and sends data using the Orthogonal Time Frequency Space (OTFS) modulation format. We consider two distinct modes of operation. In Discovery mode, a single common data stream is broadcast over a wide angular sector. The radar receiver must detect the presence of not yet acquired targets and perform coarse estimation of their parameters (angle of arrival, range, and velocity). In Tracking mode, the BS transmits multiple individual data streams to already acquired users via beamforming, while the radar receiver performs accurate estimation of the aforementioned parameters. Due to hardware complexity and power consumption constraints, we consider a hybrid digital-analog architecture where the number of RF chains and A/D converters is significantly smaller than the number of antenna array elements. In this case, a direct application of the conventional MIMO radar approach is not possible. Consequently, we advocate a beam-space approach where the vector observation at the radar receiver is obtained through a RF-domain beamforming matrix operating the dimensionality reduction from antennas to RF chains. Under this setup, we propose a likelihood function-based scheme to perform joint target detection and parameter estimation in Discovery, and high-resolution parameter estimation in Tracking mode, respectively. Our numerical results demonstrate that the proposed approach is able to reliably detect multiple targets while closely approaching the Cramer-Rao Lower Bound (CRLB) of the corresponding parameter estimation problem.

Simultaneous Communication and Tracking in Arbitrary Trajectories via Beam-Space Processing

Mar 29, 2022

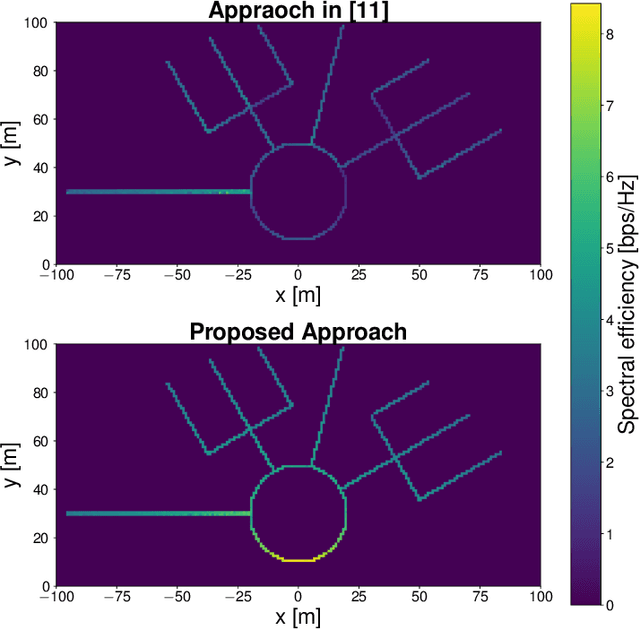

Abstract:In this paper, we develop a beam tracking scheme for an orthogonal frequency division multiplexing (OFDM) Integrated Sensing and Communication (ISAC) system with a hybrid digital analog (HDA) architecture operating in the millimeter wave (mmWave) band. Our tracking method consists of an estimation step inspired by radar signal processing techniques, and a prediction step based on simple kinematic equations. The hybrid architecture exploits the predicted state information to focus only on the directions of interest, trading off beamforming gain, hardware complexity and multistream processing capabilities. Our extensive simulations in arbitrary trajectories show that the proposed method can outperform state of the art beam tracking methods in terms of prediction accuracy and consequently achievable communication rate, and is fully capable of dealing with highly non-linear dynamic motion patterns.

Beam Refinement and User State Acquisition via Integrated Sensing and Communication with OFDM

Sep 21, 2021

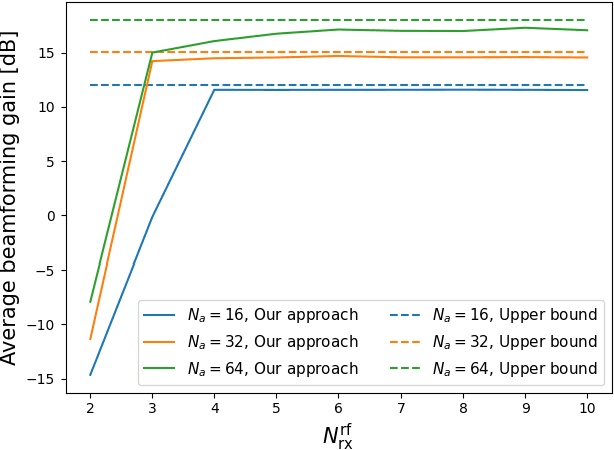

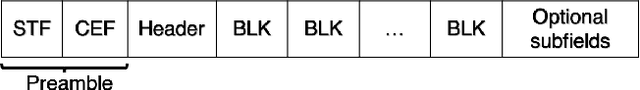

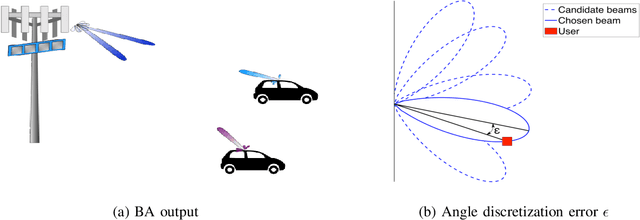

Abstract:The performance of millimeter wave (mmWave) communications strongly relies on accurate beamforming both at base station and user terminal sides, referred to as beam alignment (BA). Existing BA algorithms provide initial yet coarse angle estimates as they typically use a codebook of a finite number of discreteized beams (angles). Towards emerging applications requiring timely and precise tracking of dynamically changing state of users, we consider a system where a base station with a co-located radar receiver estimates relevant state parameters of users and simultaneously sends OFDM-modulated data symbols. In particular, based on a hybrid digital analog data transmitter/radar receiver architecture, we propose a simple beam refinement and initial state acquisition scheme that can be used for beam and user location tracking in a dynamic environment. Numerical results inspired by IEEE802.11ad parameters demonstrate that the proposed method is able to improve significantly the communication rate and further achieve accurate state estimation.

Scalable Vector Gaussian Information Bottleneck

Feb 15, 2021

Abstract:In the context of statistical learning, the Information Bottleneck method seeks a right balance between accuracy and generalization capability through a suitable tradeoff between compression complexity, measured by minimum description length, and distortion evaluated under logarithmic loss measure. In this paper, we study a variation of the problem, called scalable information bottleneck, in which the encoder outputs multiple descriptions of the observation with increasingly richer features. The model, which is of successive-refinement type with degraded side information streams at the decoders, is motivated by some application scenarios that require varying levels of accuracy depending on the allowed (or targeted) level of complexity. We establish an analytic characterization of the optimal relevance-complexity region for vector Gaussian sources. Then, we derive a variational inference type algorithm for general sources with unknown distribution; and show means of parametrizing it using neural networks. Finally, we provide experimental results on the MNIST dataset which illustrate that the proposed method generalizes better to unseen data during the training phase.

On the Relevance-Complexity Region of Scalable Information Bottleneck

Nov 02, 2020

Abstract:The Information Bottleneck method is a learning technique that seeks a right balance between accuracy and generalization capability through a suitable tradeoff between compression complexity, measured by minimum description length, and distortion evaluated under logarithmic loss measure. In this paper, we study a variation of the problem, called scalable information bottleneck, where the encoder outputs multiple descriptions of the observation with increasingly richer features. The problem at hand is motivated by some application scenarios that require varying levels of accuracy depending on the allowed level of generalization. First, we establish explicit (analytic) characterizations of the relevance-complexity region for memoryless Gaussian sources and memoryless binary sources. Then, we derive a Blahut-Arimoto type algorithm that allows us to compute (an approximation of) the region for general discrete sources. Finally, an application example in the pattern classification problem is provided along with numerical results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge