Marcus Comiter

StitchNet: Composing Neural Networks from Pre-Trained Fragments

Jan 05, 2023

Abstract:We propose StitchNet, a novel neural network creation paradigm that stitches together fragments (one or more consecutive network layers) from multiple pre-trained neural networks. StitchNet allows the creation of high-performing neural networks without the large compute and data requirements needed under traditional model creation processes via backpropagation training. We leverage Centered Kernel Alignment (CKA) as a compatibility measure to efficiently guide the selection of these fragments in composing a network for a given task tailored to specific accuracy needs and computing resource constraints. We then show that these fragments can be stitched together to create neural networks with comparable accuracy to traditionally trained networks at a fraction of computing resource and data requirements. Finally, we explore a novel on-the-fly personalized model creation and inference application enabled by this new paradigm.

CheckNet: Secure Inference on Untrusted Devices

Jun 17, 2019

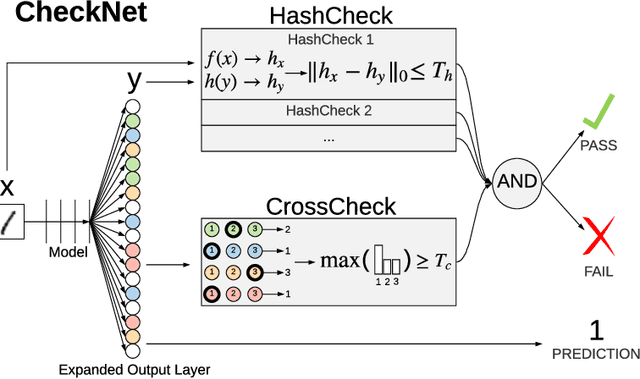

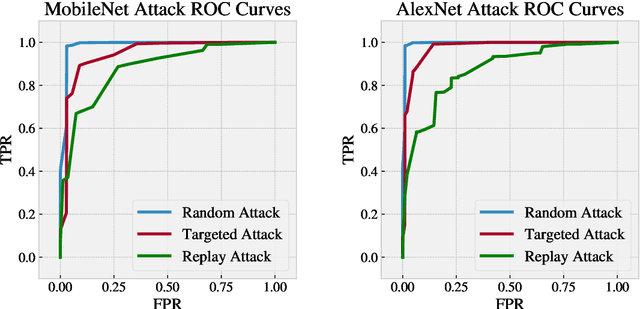

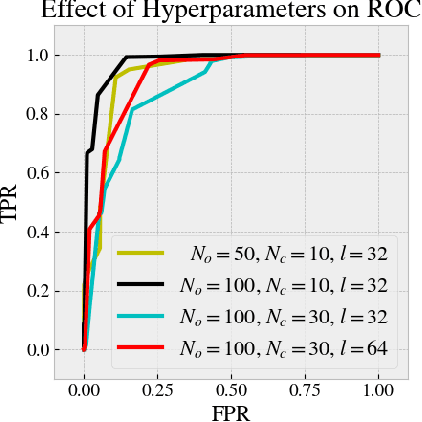

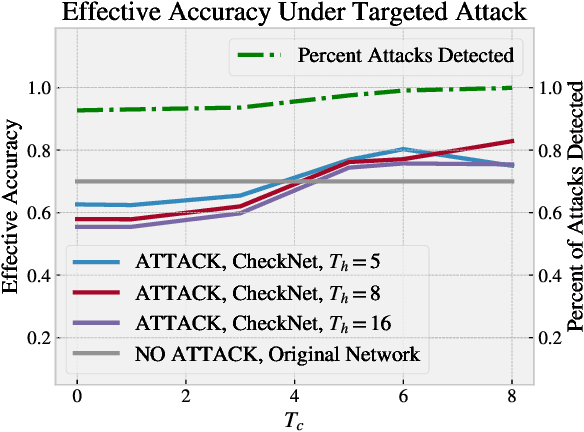

Abstract:We introduce CheckNet, a method for secure inference with deep neural networks on untrusted devices. CheckNet is like a checksum for neural network inference: it verifies the integrity of the inference computation performed by untrusted devices to 1) ensure the inference has actually been performed, and 2) ensure the inference has not been manipulated by an attacker. CheckNet is completely transparent to the third party running the computation, applicable to all types of neural networks, does not require specialized hardware, adds little overhead, and has negligible impact on model performance. CheckNet can be configured to provide different levels of security depending on application needs and compute/communication budgets. We present both empirical and theoretical validation of CheckNet on multiple popular deep neural network models, showing excellent attack detection (0.88-0.99 AUC) and attack success bounds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge