Marco Zuniga

Passive Screen-to-Camera Communication

Mar 24, 2024Abstract:A recent technology known as transparent screens is transforming windows into displays. These smart windows are present in buses, airports and offices. They can remain transparent, as a normal window, or display relevant information that overlays their panoramic views. In this paper, we propose transforming these windows not only into screens but also into wireless transmitters. To achieve this goal, we build upon the research area of screen-to-camera communication. In this area, videos are modified in a way that smartphone cameras can decode data out of them, while this data remains invisible to the viewers. A person sees a normal video, but the camera sees the video plus additional information. In this communication method, one of the biggest disadvantages is the traditional screens' power consumption, more than 80% of which is used to generate light. To solve this, we employ novel transparent screens relying on ambient light to display pictures, hence eliminating the power source. However, this comes at the cost of a lower image quality, since they use variable and out-of-control environment light, instead of generating a constant and strong light by LED panels. Our work, dubbed PassiveCam, overcomes the challenge of creating the first screen-to-camera communication link using passive displays. This paper presents two main contributions. First, we analyze and modify existing screens and encoding methods to embed information reliably in ambient light. Second, we develop an Android App that optimizes the decoding process, obtaining a real-time performance. Our evaluation, which considers a musical application, shows a Packet Success Rate (PSR) of close to 90%. In addition, our real-time application achieves response times of 530 ms and 1071 ms when the camera is static and when it is hand-held, respectively.

CardioID: Mitigating the Effects of Irregular Cardiac Signals for Biometric Identification

Mar 30, 2022

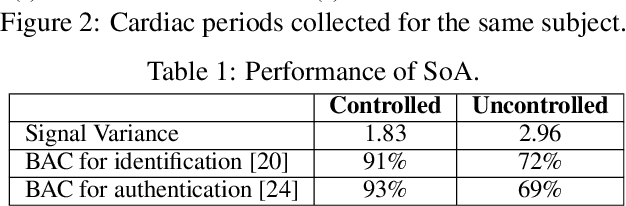

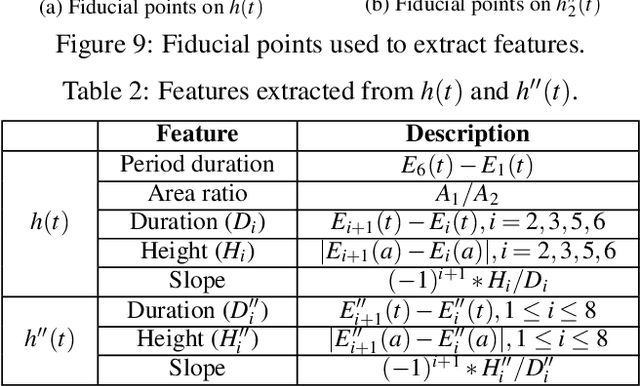

Abstract:Cardiac patterns are being used to obtain hard-to-forge biometric signatures and have led to high accuracy in state-of-the-art (SoA) identification applications. However, this performance is obtained under controlled scenarios where cardiac signals maintain a relatively uniform pattern, facilitating the identification process. In this work, we analyze cardiac signals collected in more realistic (uncontrolled) scenarios and show that their high signal variability (i.e., irregularity) makes it harder to obtain stable and distinct user features. Furthermore, SoA usually fails to identify specific groups of users, rendering existing identification methods futile in uncontrolled scenarios. To solve these problems, we propose a framework with three novel properties. First, we design an adaptive method that achieves stable and distinct features by tailoring the filtering spectrum to each user. Second, we show that users can have multiple cardiac morphologies, offering us a much bigger pool of cardiac signals and users compared to SoA. Third, we overcome other distortion effects present in authentication applications with a multi-cluster approach and the Mahalanobis distance. Our evaluation shows that the average balanced accuracy (BAC) of SoA drops from above 90% in controlled scenarios to 75% in uncontrolled ones, while our method maintains an average BAC above 90% in uncontrolled scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge