Marcin Korecki

LLM Voting: Human Choices and AI Collective Decision Making

Jan 31, 2024

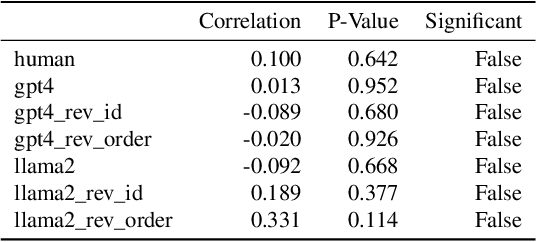

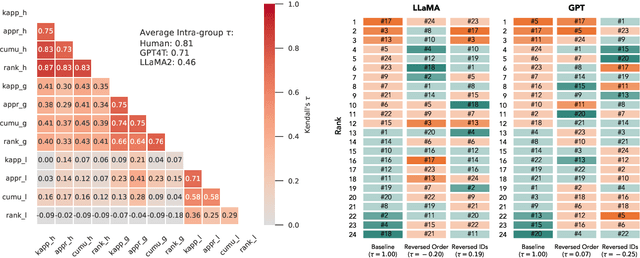

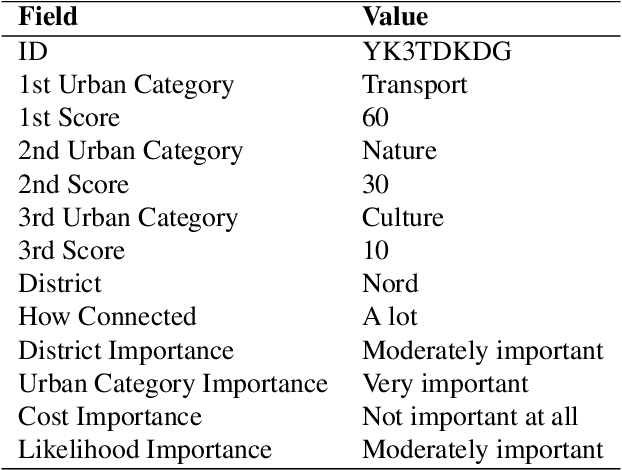

Abstract:This paper investigates the voting behaviors of Large Language Models (LLMs), particularly OpenAI's GPT4 and LLaMA2, and their alignment with human voting patterns. Our approach included a human voting experiment to establish a baseline for human preferences and a parallel experiment with LLM agents. The study focused on both collective outcomes and individual preferences, revealing differences in decision-making and inherent biases between humans and LLMs. We observed a trade-off between preference diversity and alignment in LLMs, with a tendency towards more uniform choices as compared to the diverse preferences of human voters. This finding indicates that LLMs could lead to more homogenized collective outcomes when used in voting assistance, underscoring the need for cautious integration of LLMs into democratic processes.

Biospheric AI

Jan 31, 2024Abstract:The dominant paradigm in AI ethics and value alignment is highly anthropocentric. The focus of these disciplines is strictly on human values which limits the depth and breadth of their insights. Recently, attempts to expand to a sentientist perspective have been initiated. We argue that neither of these outlooks is sufficient to capture the actual complexity of the biosphere and ensure that AI does not damage it. Thus, we propose a new paradigm -- Biospheric AI that assumes an ecocentric perspective. We discuss hypothetical ways in which such an AI might be designed. Moreover, we give directions for research and application of the modern AI models that would be consistent with the biospheric interests. All in all, this work attempts to take first steps towards a comprehensive program of research that focuses on the interactions between AI and the biosphere.

Dynamic value alignment through preference aggregation of multiple objectives

Oct 09, 2023Abstract:The development of ethical AI systems is currently geared toward setting objective functions that align with human objectives. However, finding such functions remains a research challenge, while in RL, setting rewards by hand is a fairly standard approach. We present a methodology for dynamic value alignment, where the values that are to be aligned with are dynamically changing, using a multiple-objective approach. We apply this approach to extend Deep $Q$-Learning to accommodate multiple objectives and evaluate this method on a simplified two-leg intersection controlled by a switching agent.Our approach dynamically accommodates the preferences of drivers on the system and achieves better overall performance across three metrics (speeds, stops, and waits) while integrating objectives that have competing or conflicting actions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge