Marcell Wolnitza

3D object reconstruction and 6D-pose estimation from 2D shape for robotic grasping of objects

Mar 02, 2022

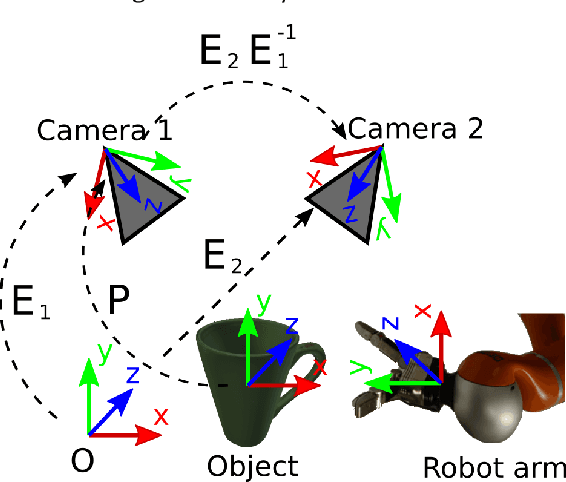

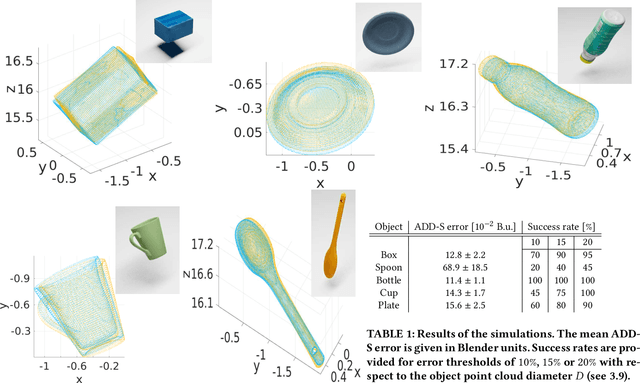

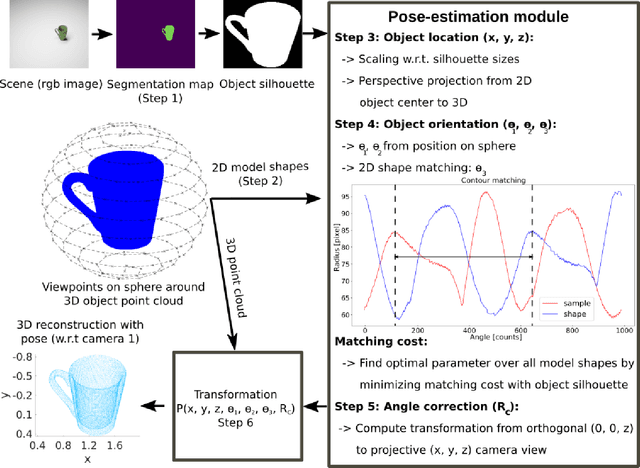

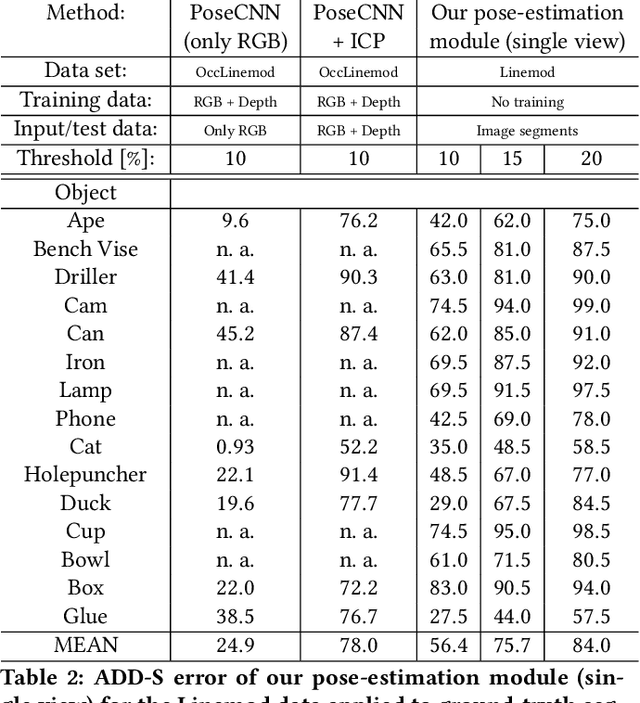

Abstract:We propose a method for 3D object reconstruction and 6D-pose estimation from 2D images that uses knowledge about object shape as the primary key. In the proposed pipeline, recognition and labeling of objects in 2D images deliver 2D segment silhouettes that are compared with the 2D silhouettes of projections obtained from various views of a 3D model representing the recognized object class. By computing transformation parameters directly from the 2D images, the number of free parameters required during the registration process is reduced, making the approach feasible. Furthermore, 3D transformations and projective geometry are employed to arrive at a full 3D reconstruction of the object in camera space using a calibrated set up. Inclusion of a second camera allows resolving remaining ambiguities. The method is quantitatively evaluated using synthetic data and tested with real data, and additional results for the well-known Linemod data set are shown. In robot experiments, successful grasping of objects demonstrates its usability in real-world environments, and, where possible, a comparison with other methods is provided. The method is applicable to scenarios where 3D object models, e.g., CAD-models or point clouds, are available and precise pixel-wise segmentation maps of 2D images can be obtained. Different from other methods, the method does not use 3D depth for training, widening the domain of application.

Feature selection of neural networks is skewed towards the less abstract cue

Aug 08, 2019

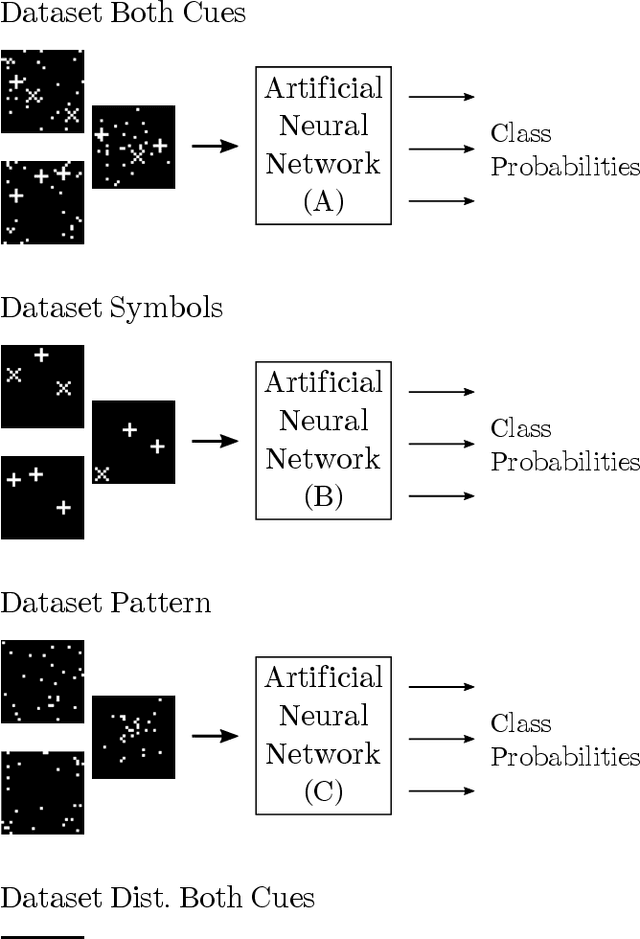

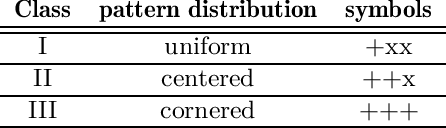

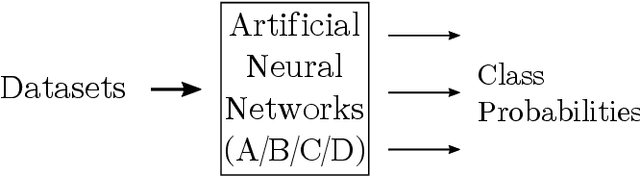

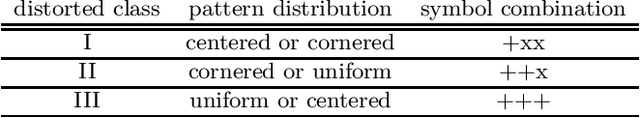

Abstract:Artificial neural networks (ANNs) have become an important tool for image classification with many applications in research and industry. However, it remains largely unknown how relevant image features are selected and how data properties affect this process. In particular, we are interested whether the abstraction level of image cues correlating with class membership influences feature selection. We perform experiments with binary images that contain a combination of cues, representing two different levels of abstractions: one is a pattern drawn from a random distribution where class membership correlates with the statistics of the pattern, the other a combination of symbol-like entities, where the symbolic code correlates with class membership. When the network is trained with data in which both cues are equally significant, we observe that the cues at the lower abstraction level, i.e., the pattern, is learned, while the symbolic information is largely ignored, even in networks with many layers. Symbol-like entities are only learned if the importance of low-level cues is reduced compared to the high-level ones. These findings raise important questions about the relevance of features that are learned by deep ANNs and how learning could be shifted towards symbolic features.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge