Marc Pierrot-Deseilligny

Multi-view dense image matching with similarity learning and geometry priors

May 16, 2025

Abstract:We introduce MV-DeepSimNets, a comprehensive suite of deep neural networks designed for multi-view similarity learning, leveraging epipolar geometry for training. Our approach incorporates an online geometry prior to characterize pixel relationships, either along the epipolar line or through homography rectification. This enables the generation of geometry-aware features from native images, which are then projected across candidate depth hypotheses using plane sweeping. Our method geometric preconditioning effectively adapts epipolar-based features for enhanced multi-view reconstruction, without requiring the laborious multi-view training dataset creation. By aggregating learned similarities, we construct and regularize the cost volume, leading to improved multi-view surface reconstruction over traditional dense matching approaches. MV-DeepSimNets demonstrates superior performance against leading similarity learning networks and end-to-end regression models, especially in terms of generalization capabilities across both aerial and satellite imagery with varied ground sampling distances. Our pipeline is integrated into MicMac software and can be readily adopted in standard multi-resolution image matching pipelines.

An evaluation of Deep Learning based stereo dense matching dataset shift from aerial images and a large scale stereo dataset

Feb 19, 2024

Abstract:Dense matching is crucial for 3D scene reconstruction since it enables the recovery of scene 3D geometry from image acquisition. Deep Learning (DL)-based methods have shown effectiveness in the special case of epipolar stereo disparity estimation in the computer vision community. DL-based methods depend heavily on the quality and quantity of training datasets. However, generating ground-truth disparity maps for real scenes remains a challenging task in the photogrammetry community. To address this challenge, we propose a method for generating ground-truth disparity maps directly from Light Detection and Ranging (LiDAR) and images to produce a large and diverse dataset for six aerial datasets across four different areas and two areas with different resolution images. We also introduce a LiDAR-to-image co-registration refinement to the framework that takes special precautions regarding occlusions and refrains from disparity interpolation to avoid precision loss. Evaluating 11 dense matching methods across datasets with diverse scene types, image resolutions, and geometric configurations, which are deeply investigated in dataset shift, GANet performs best with identical training and testing data, and PSMNet shows robustness across different datasets, and we proposed the best strategy for training with a limit dataset. We will also provide the dataset and training models; more information can be found at https://github.com/whuwuteng/Aerial_Stereo_Dataset.

DeepSim-Nets: Deep Similarity Networks for Stereo Image Matching

Apr 17, 2023

Abstract:We present three multi-scale similarity learning architectures, or DeepSim networks. These models learn pixel-level matching with a contrastive loss and are agnostic to the geometry of the considered scene. We establish a middle ground between hybrid and end-to-end approaches by learning to densely allocate all corresponding pixels of an epipolar pair at once. Our features are learnt on large image tiles to be expressive and capture the scene's wider context. We also demonstrate that curated sample mining can enhance the overall robustness of the predicted similarities and improve the performance on radiometrically homogeneous areas. We run experiments on aerial and satellite datasets. Our DeepSim-Nets outperform the baseline hybrid approaches and generalize better to unseen scene geometries than end-to-end methods. Our flexible architecture can be readily adopted in standard multi-resolution image matching pipelines.

Pointless Global Bundle Adjustment With Relative Motions Hessians

Apr 11, 2023

Abstract:Bundle adjustment (BA) is the standard way to optimise camera poses and to produce sparse representations of a scene. However, as the number of camera poses and features grows, refinement through bundle adjustment becomes inefficient. Inspired by global motion averaging methods, we propose a new bundle adjustment objective which does not rely on image features' reprojection errors yet maintains precision on par with classical BA. Our method averages over relative motions while implicitly incorporating the contribution of the structure in the adjustment. To that end, we weight the objective function by local hessian matrices - a by-product of local bundle adjustments performed on relative motions (e.g., pairs or triplets) during the pose initialisation step. Such hessians are extremely rich as they encapsulate both the features' random errors and the geometric configuration between the cameras. These pieces of information propagated to the global frame help to guide the final optimisation in a more rigorous way. We argue that this approach is an upgraded version of the motion averaging approach and demonstrate its effectiveness on both photogrammetric datasets and computer vision benchmarks.

Feature matching for multi-epoch historical aerial images

Dec 08, 2021

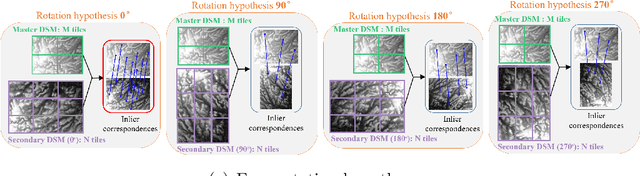

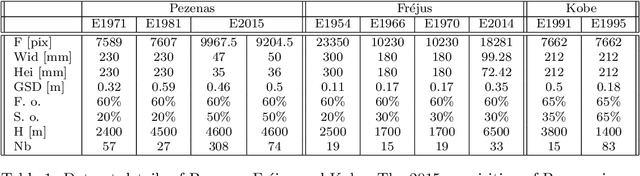

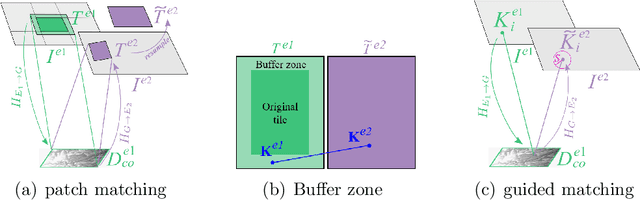

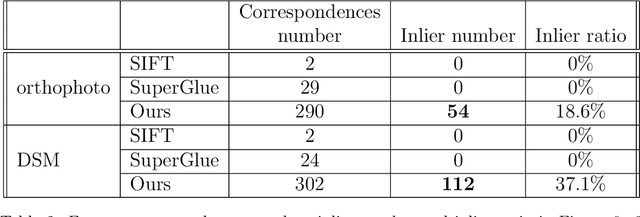

Abstract:Historical imagery is characterized by high spatial resolution and stereo-scopic acquisitions, providing a valuable resource for recovering 3D land-cover information. Accurate geo-referencing of diachronic historical images by means of self-calibration remains a bottleneck because of the difficulty to find sufficient amount of feature correspondences under evolving landscapes. In this research, we present a fully automatic approach to detecting feature correspondences between historical images taken at different times (i.e., inter-epoch), without auxiliary data required. Based on relative orientations computed within the same epoch (i.e., intra-epoch), we obtain DSMs (Digital Surface Model) and incorporate them in a rough-to-precise matching. The method consists of: (1) an inter-epoch DSMs matching to roughly co-register the orientations and DSMs (i.e, the 3D Helmert transformation), followed by (2) a precise inter-epoch feature matching using the original RGB images. The innate ambiguity of the latter is largely alleviated by narrowing down the search space using the co-registered data. With the inter-epoch features, we refine the image orientations and quantitatively evaluate the results (1) with DoD (Difference of DSMs), (2) with ground check points, and (3) by quantifying ground displacement due to an earthquake. We demonstrate that our method: (1) can automatically georeference diachronic historical images; (2) can effectively mitigate systematic errors induced by poorly estimated camera parameters; (3) is robust to drastic scene changes. Compared to the state-of-the-art, our method improves the image georeferencing accuracy by a factor of 2. The proposed methods are implemented in MicMac, a free, open-source photogrammetric software.

* 34 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge