María Luis Valero

Inference-Adaptive Neural Steering for Real-Time Area-Based Sound Source Separation

Aug 23, 2024

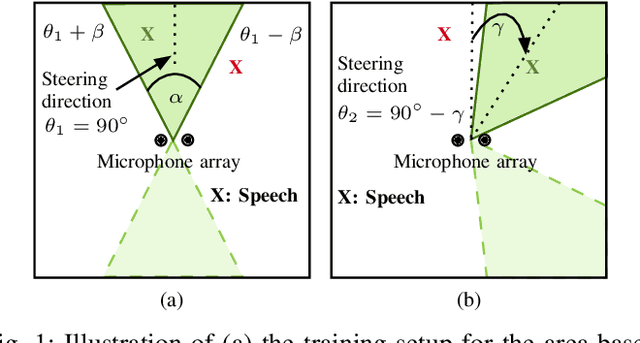

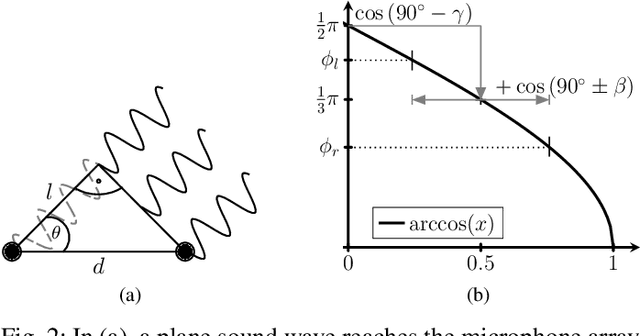

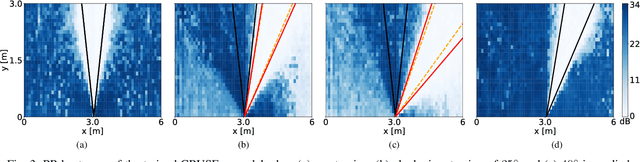

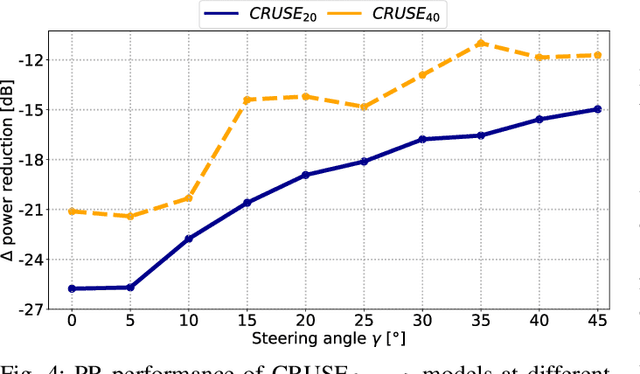

Abstract:We propose a novel Neural Steering technique that adapts the target area of a spatial-aware multi-microphone sound source separation algorithm during inference without the necessity of retraining the deep neural network (DNN). To achieve this, we first train a DNN aiming to retain speech within a target region, defined by an angular span, while suppressing sound sources stemming from other directions. Afterward, a phase shift is applied to the microphone signals, allowing us to shift the center of the target area during inference at negligible additional cost in computational complexity. Further, we show that the proposed approach performs well in a wide variety of acoustic scenarios, including several speakers inside and outside the target area and additional noise. More precisely, the proposed approach performs on par with DNNs trained explicitly for the steered target area in terms of DNSMOS and SI-SDR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge