Manuel de Sousa Ribeiro

On Modifying a Neural Network's Perception

Mar 05, 2023

Abstract:Artificial neural networks have proven to be extremely useful models that have allowed for multiple recent breakthroughs in the field of Artificial Intelligence and many others. However, they are typically regarded as black boxes, given how difficult it is for humans to interpret how these models reach their results. In this work, we propose a method which allows one to modify what an artificial neural network is perceiving regarding specific human-defined concepts, enabling the generation of hypothetical scenarios that could help understand and even debug the neural network model. Through empirical evaluation, in a synthetic dataset and in the ImageNet dataset, we test the proposed method on different models, assessing whether the performed manipulations are well interpreted by the models, and analyzing how they react to them.

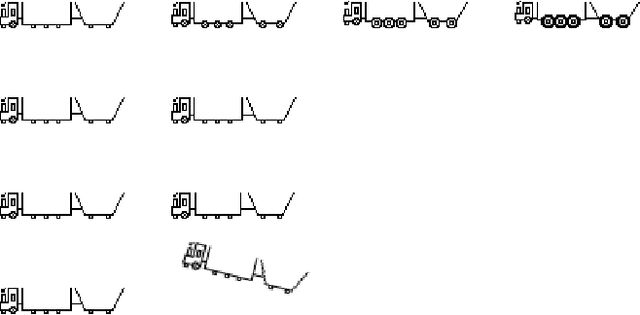

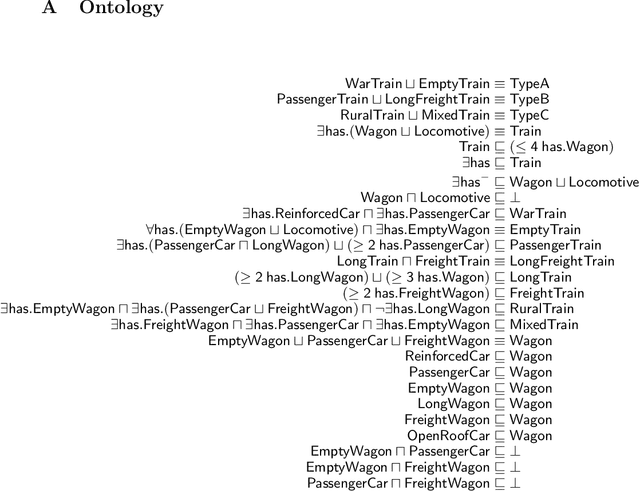

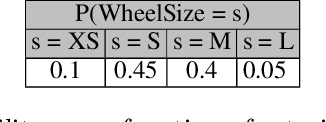

Explainable Abstract Trains Dataset

Dec 15, 2020

Abstract:The Explainable Abstract Trains Dataset is an image dataset containing simplified representations of trains. It aims to provide a platform for the application and research of algorithms for justification and explanation extraction. The dataset is accompanied by an ontology that conceptualizes and classifies the depicted trains based on their visual characteristics, allowing for a precise understanding of how each train was labeled. Each image in the dataset is annotated with multiple attributes describing the trains' features and with bounding boxes for the train elements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge