Malik ishfaq

Harnessing The Power of Attention For Patch-Based Biomedical Image Classification

Apr 01, 2024

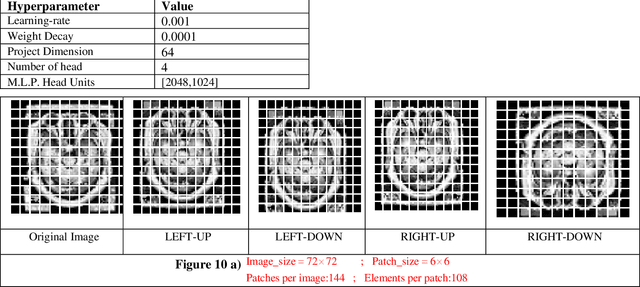

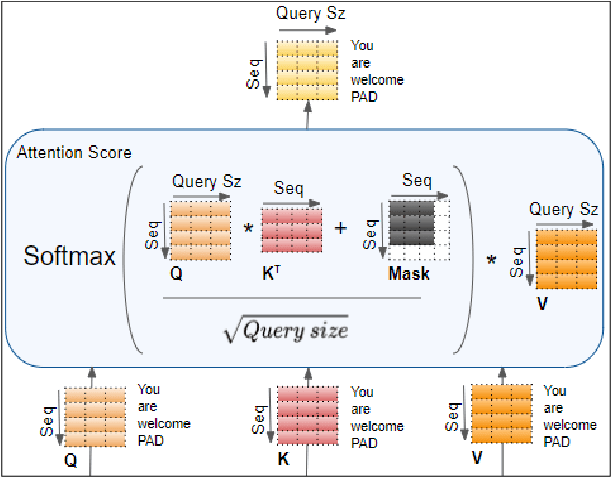

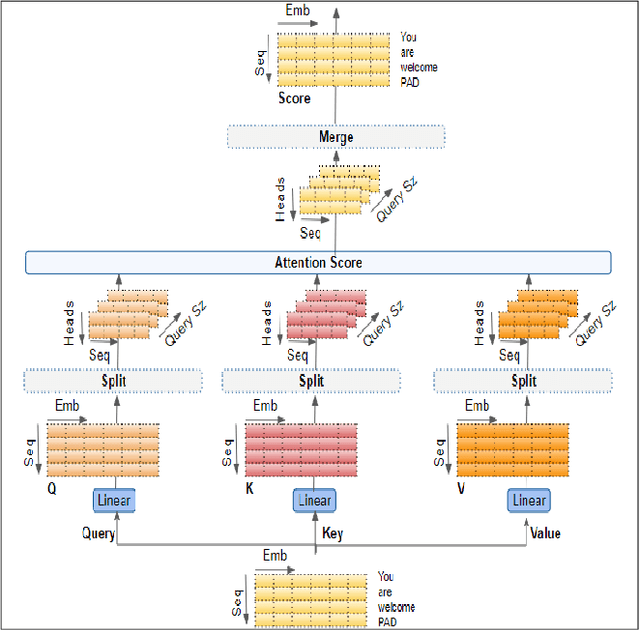

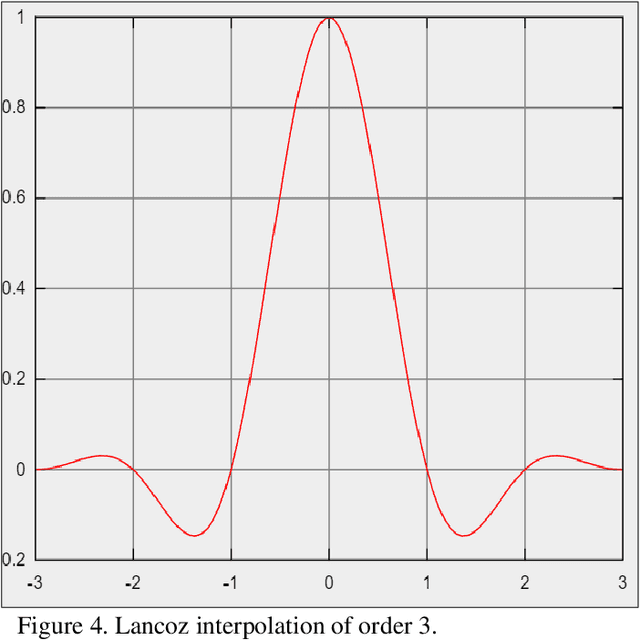

Abstract:Biomedical image analysis can be facilitated by an innovative architecture rooted in self-attention mechanisms. The traditional convolutional neural network (CNN), characterized by fixed-sized windows, needs help capturing intricate spatial and temporal relations at the pixel level. The immutability of CNN filter weights post-training further restricts input fluctuations. Recognizing these limitations, we propose a new paradigm of attention-based models instead of convolutions. As an alternative to traditional CNNs, these models demonstrate robust modelling capabilities and the ability to grasp comprehensive long-range contextual information efficiently. Providing a solution to critical challenges faced by attention-based vision models such as inductive bias, weight sharing, receptive field limitations, and data handling in high resolution, our work combines non-overlapping (vanilla patching) with novel overlapped Shifted Patching Techniques (S.P.T.s) to induce local context that enhances model generalization. Moreover, we examine the novel Lancoz5 interpolation technique, which adapts variable image sizes to higher resolutions. Experimental evidence validates our model's generalization effectiveness, comparing favourably with existing approaches. Attention-based methods are particularly effective with ample data, especially when advanced data augmentation methodologies are integrated to strengthen their robustness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge