Maitrayee Keskar

Robust Detection, Assocation, and Localization of Vehicle Lights: A Context-Based Cascaded CNN Approach and Evaluations

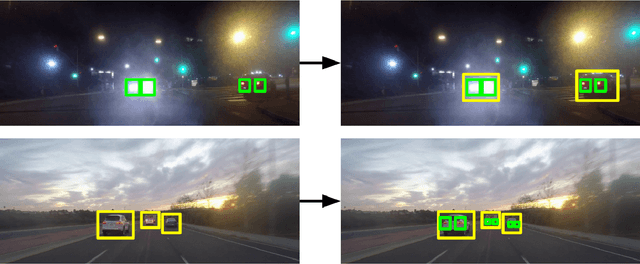

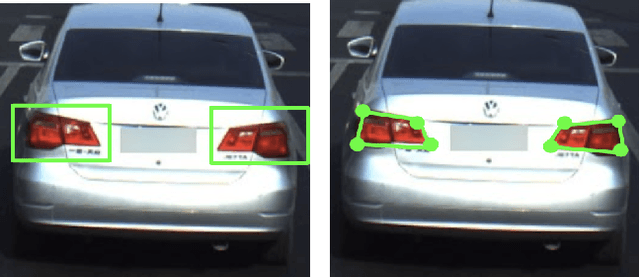

Jul 27, 2023Abstract:Vehicle light detection is required for important downstream safe autonomous driving tasks, such as predicting a vehicle's light state to determine if the vehicle is making a lane change or turning. Currently, many vehicle light detectors use single-stage detectors which predict bounding boxes to identify a vehicle light, in a manner decoupled from vehicle instances. In this paper, we present a method for detecting a vehicle light given an upstream vehicle detection and approximation of a visible light's center. Our method predicts four approximate corners associated with each vehicle light. We experiment with CNN architectures, data augmentation, and contextual preprocessing methods designed to reduce surrounding-vehicle confusion. We achieve an average distance error from the ground truth corner of 5.09 pixels, about 17.24% of the size of the vehicle light on average. We train and evaluate our model on the LISA Lights dataset, allowing us to thoroughly evaluate our vehicle light corner detection model on a large variety of vehicle light shapes and lighting conditions. We propose that this model can be integrated into a pipeline with vehicle detection and vehicle light center detection to make a fully-formed vehicle light detection network, valuable to identifying trajectory-informative signals in driving scenes.

Patterns of Vehicle Lights: Addressing Complexities in Curation and Annotation of Camera-Based Vehicle Light Datasets and Metrics

Jul 26, 2023

Abstract:This paper explores the representation of vehicle lights in computer vision and its implications for various tasks in the field of autonomous driving. Different specifications for representing vehicle lights, including bounding boxes, center points, corner points, and segmentation masks, are discussed in terms of their strengths and weaknesses. Three important tasks in autonomous driving that can benefit from vehicle light detection are identified: nighttime vehicle detection, 3D vehicle orientation estimation, and dynamic trajectory cues. Each task may require a different representation of the light. The challenges of collecting and annotating large datasets for training data-driven models are also addressed, leading to introduction of the LISA Vehicle Lights Dataset and associated Light Visibility Model, which provides light annotations specifically designed for downstream applications in vehicle detection, intent and trajectory prediction, and safe path planning. A comparison of existing vehicle light datasets is provided, highlighting the unique features and limitations of each dataset. Overall, this paper provides insights into the representation of vehicle lights and the importance of accurate annotations for training effective detection models in autonomous driving applications. Our dataset and model are made available at https://cvrr.ucsd.edu/vehicle-lights-dataset

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge