Maik Wischow

Real-time Noise Source Estimation of a Camera System from an Image and Metadata

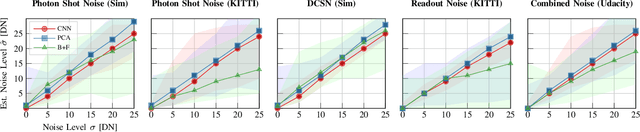

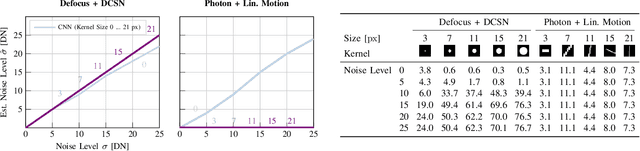

Apr 04, 2024Abstract:Autonomous machines must self-maintain proper functionality to ensure the safety of humans and themselves. This pertains particularly to its cameras as predominant sensors to perceive the environment and support actions. A fundamental camera problem addressed in this study is noise. Solutions often focus on denoising images a posteriori, that is, fighting symptoms rather than root causes. However, tackling root causes requires identifying the noise sources, considering the limitations of mobile platforms. This work investigates a real-time, memory-efficient and reliable noise source estimator that combines data- and physically-based models. To this end, a DNN that examines an image with camera metadata for major camera noise sources is built and trained. In addition, it quantifies unexpected factors that impact image noise or metadata. This study investigates seven different estimators on six datasets that include synthetic noise, real-world noise from two camera systems, and real field campaigns. For these, only the model with most metadata is capable to accurately and robustly quantify all individual noise contributions. This method outperforms total image noise estimators and can be plug-and-play deployed. It also serves as a basis to include more advanced noise sources, or as part of an automatic countermeasure feedback-loop to approach fully reliable machines.

* 16 pages, 16 figures, 12 tables, Project page: https://github.com/MaikWischow/Noise-Source-Estimation

Camera Condition Monitoring and Readjustment by means of Noise and Blur

Dec 10, 2021

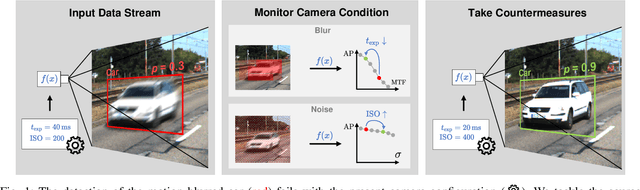

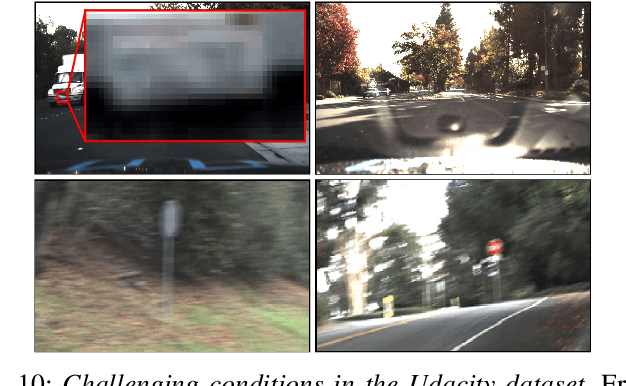

Abstract:Autonomous vehicles and robots require increasingly more robustness and reliability to meet the demands of modern tasks. These requirements specially apply to cameras because they are the predominant sensors to acquire information about the environment and support actions. A camera must maintain proper functionality and take automatic countermeasures if necessary. However, there is little work that examines the practical use of a general condition monitoring approach for cameras and designs countermeasures in the context of an envisaged high-level application. We propose a generic and interpretable self-health-maintenance framework for cameras based on data- and physically-grounded models. To this end, we determine two reliable, real-time capable estimators for typical image effects of a camera in poor condition (defocus blur, motion blur, different noise phenomena and most common combinations) by comparing traditional and retrained machine learning-based approaches in extensive experiments. Furthermore, we demonstrate how one can adjust the camera parameters (e.g., exposure time and ISO gain) to achieve optimal whole-system capability based on experimental (non-linear and non-monotonic) input-output performance curves, using object detection, motion blur and sensor noise as examples. Our framework not only provides a practical ready-to-use solution to evaluate and maintain the health of cameras, but can also serve as a basis for extensions to tackle more sophisticated problems that combine additional data sources (e.g., sensor or environment parameters) empirically in order to attain fully reliable and robust machines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge