Mahdi Salarian

Iris Recognition for Personal Identification using LAMSTAR neural network

Jul 28, 2019

Abstract:Iris recognition is one of the most important biometric recognition method. This is because the iris texture provides many features such as freckles, coronas, stripes, furrows, crypts, etc. Those features are unique for different people and distinguishable. Such unique features in the anatomical structure of the iris make it possible the differentiation among individuals. So during last years huge number of people have been trying to improve its performance. In this article first different common steps for the Iris recognition system is explained. Then a special type of neural network is used for recognition part. Experimental results show high accuracy can be obtained especially when the primary steps are done well.

Efficient refinement of GPS-based localization in urban areas using visual information and sensor parameter

Oct 28, 2015

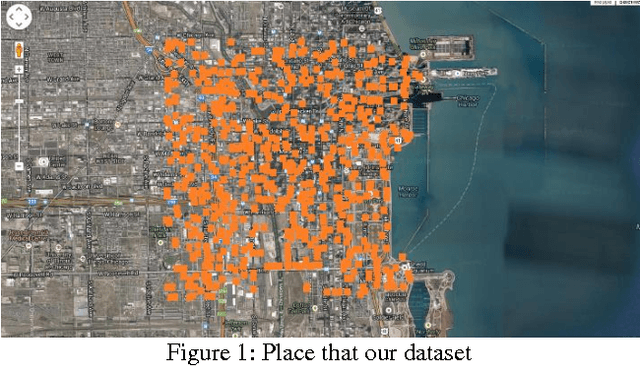

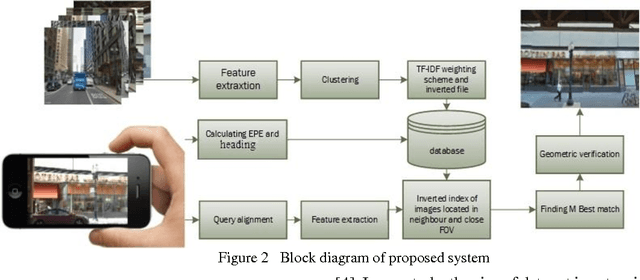

Abstract:An efficient method is proposed for refining GPS-acquired location coordinates in urban areas using camera images, Google Street View (GSV) and sensor parameters. The main goal is to compensate for GPS location imprecision in dense area of cities due to proximity to walls and buildings. Avail-able methods for better localization often use visual information by using query images acquired with camera-equipped mobile devices and applying image retrieval techniques to find the closest match in a GPS-referenced image data set. The search areas required for reliable search are about 1-2 sq. Km and the accuracy is typically 25-100 meters. Here we describe a method based on image retrieval where a reliable search can be confined to areas of 0.01 sq. Km and the accuracy in our experiments is less than 10 meters. To test our procedure we created a database by acquiring all Google Street View images close to what is seen by a pedestrian in a large region of downtown Chicago and saved all coordinates and orientation data to be used for confining our search region. Prior knowledge from approximate position of query image is leveraged to address complexity and accuracy issues of our search in a large scale geo-tagged data set. One key aspect that differentiates our work is that it utilizes the sensor information of GPS SOS and the camera orientation in improving localization. Finally we demonstrate retrieval-based technique are less accurate in sparse open areas compared with purely GPS measurement. The effectiveness of our approach is discussed in detail and experimental results show improved performance when compared with regular approaches.

Accurate automatic segmentation of retina layers with emphasis on first layer

Feb 15, 2015

Abstract:Quantification of intra-retinal boundaries in optical coherence tomography (OCT) is a crucial task for studying and diagnosing neurological and ocular diseases. Since manual segmentation of layers is usually a time consuming task and relay on user, a lot of attempts done to do it automatically and without interference of user. Although for extracting all layers usually same procedure is applied but finding the first layer is usually more difficult due to vanishing it in some region specially close to Fobia. To have a general software, beside using common methods like applying shortest path algorithm on global gradient of image, some extra steps are used here to confine search area for Dijstra algorithm especially for the second layer. Results demonstrates high accuracy in segmenting all present layers, especially the first one that is important for diagnosing issue.

Accurate Localization in Dense Urban Area Using Google Street View Image

Dec 29, 2014

Abstract:Accurate information about the location and orientation of a camera in mobile devices is central to the utilization of location-based services (LBS). Most of such mobile devices rely on GPS data but this data is subject to inaccuracy due to imperfections in the quality of the signal provided by satellites. This shortcoming has spurred the research into improving the accuracy of localization. Since mobile devices have camera, a major thrust of this research has been seeks to acquire the local scene and apply image retrieval techniques by querying a GPS-tagged image database to find the best match for the acquired scene.. The techniques are however computationally demanding and unsuitable for real-time applications such as assistive technology for navigation by the blind and visually impaired which motivated out work. To overcome the high complexity of those techniques, we investigated the use of inertial sensors as an aid in image-retrieval-based approach. Armed with information of media other than images, such as data from the GPS module along with orientation sensors such as accelerometer and gyro, we sought to limit the size of the image set to c search for the best match. Specifically, data from the orientation sensors along with Dilution of precision (DOP) from GPS are used to find the angle of view and estimation of position. We present analysis of the reduction in the image set size for the search as well as simulations to demonstrate the effectiveness in a fast implementation with 98% Estimated Position Error.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge