Efficient refinement of GPS-based localization in urban areas using visual information and sensor parameter

Paper and Code

Oct 28, 2015

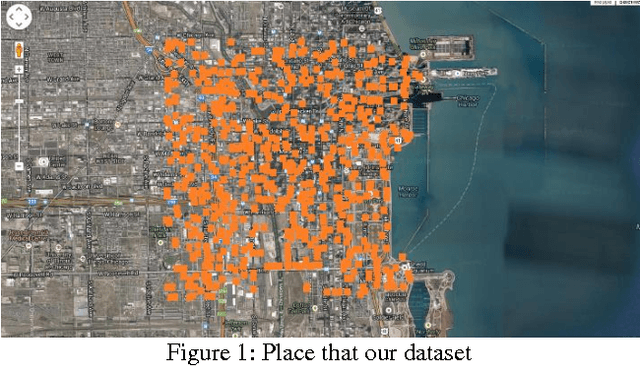

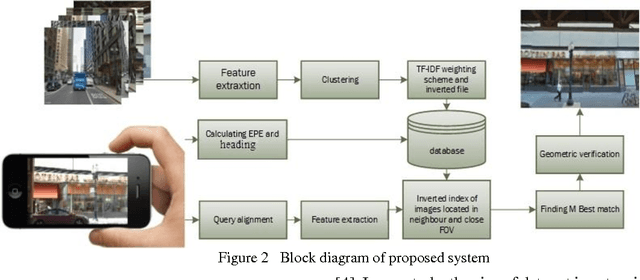

An efficient method is proposed for refining GPS-acquired location coordinates in urban areas using camera images, Google Street View (GSV) and sensor parameters. The main goal is to compensate for GPS location imprecision in dense area of cities due to proximity to walls and buildings. Avail-able methods for better localization often use visual information by using query images acquired with camera-equipped mobile devices and applying image retrieval techniques to find the closest match in a GPS-referenced image data set. The search areas required for reliable search are about 1-2 sq. Km and the accuracy is typically 25-100 meters. Here we describe a method based on image retrieval where a reliable search can be confined to areas of 0.01 sq. Km and the accuracy in our experiments is less than 10 meters. To test our procedure we created a database by acquiring all Google Street View images close to what is seen by a pedestrian in a large region of downtown Chicago and saved all coordinates and orientation data to be used for confining our search region. Prior knowledge from approximate position of query image is leveraged to address complexity and accuracy issues of our search in a large scale geo-tagged data set. One key aspect that differentiates our work is that it utilizes the sensor information of GPS SOS and the camera orientation in improving localization. Finally we demonstrate retrieval-based technique are less accurate in sparse open areas compared with purely GPS measurement. The effectiveness of our approach is discussed in detail and experimental results show improved performance when compared with regular approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge