Mahdi Chehimi

Quantum-enhanced unsupervised image segmentation for medical images analysis

Nov 22, 2024

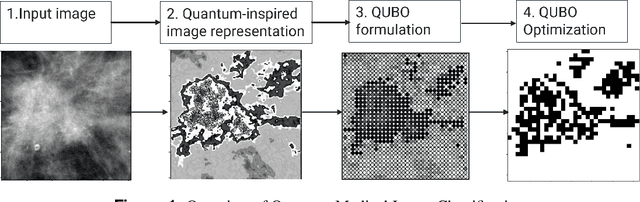

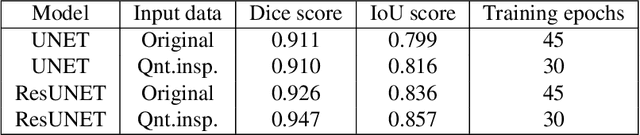

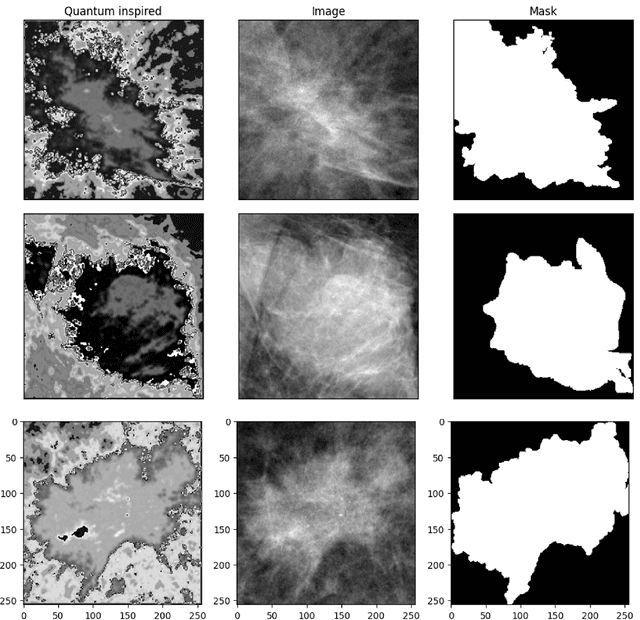

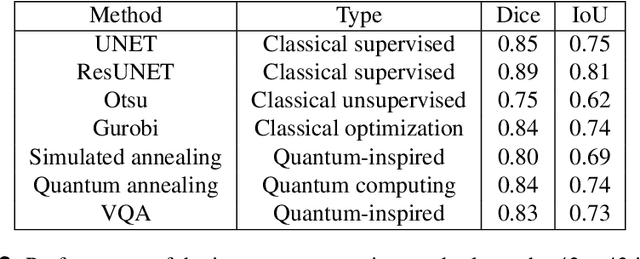

Abstract:Breast cancer remains the leading cause of cancer-related mortality among women worldwide, necessitating the meticulous examination of mammograms by radiologists to characterize abnormal lesions. This manual process demands high accuracy and is often time-consuming, costly, and error-prone. Automated image segmentation using artificial intelligence offers a promising alternative to streamline this workflow. However, most existing methods are supervised, requiring large, expertly annotated datasets that are not always available, and they experience significant generalization issues. Thus, unsupervised learning models can be leveraged for image segmentation, but they come at a cost of reduced accuracy, or require extensive computational resourcess. In this paper, we propose the first end-to-end quantum-enhanced framework for unsupervised mammography medical images segmentation that balances between performance accuracy and computational requirements. We first introduce a quantum-inspired image representation that serves as an initial approximation of the segmentation mask. The segmentation task is then formulated as a QUBO problem, aiming to maximize the contrast between the background and the tumor region while ensuring a cohesive segmentation mask with minimal connected components. We conduct an extensive evaluation of quantum and quantum-inspired methods for image segmentation, demonstrating that quantum annealing and variational quantum circuits achieve performance comparable to classical optimization techniques. Notably, quantum annealing is shown to be an order of magnitude faster than the classical optimization method in our experiments. Our findings demonstrate that this framework achieves performance comparable to state-of-the-art supervised methods, including UNet-based architectures, offering a viable unsupervised alternative for breast cancer image segmentation.

Federated Quantum Long Short-term Memory (FedQLSTM)

Dec 21, 2023

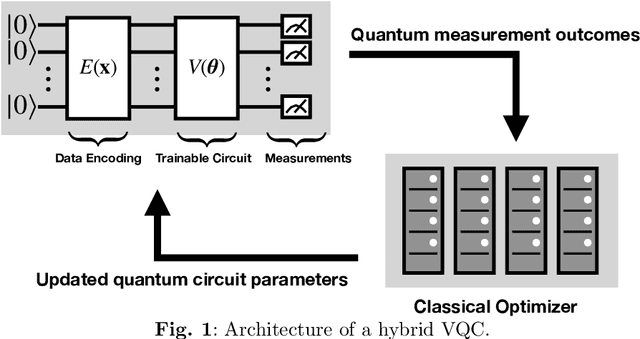

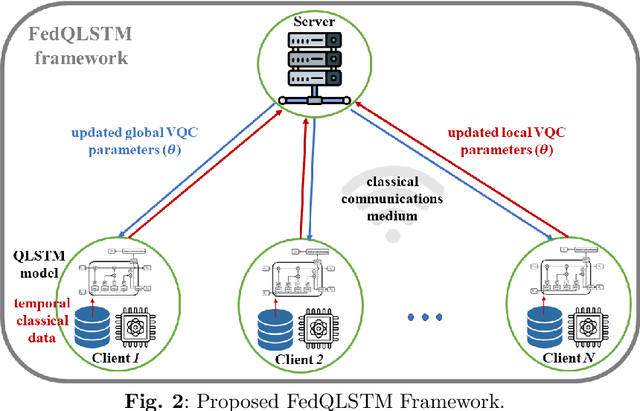

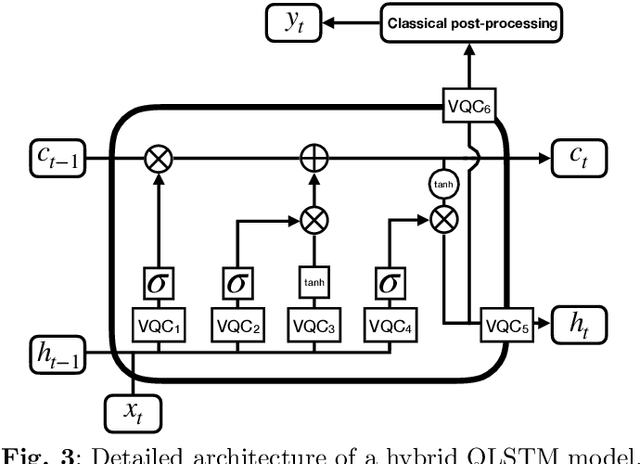

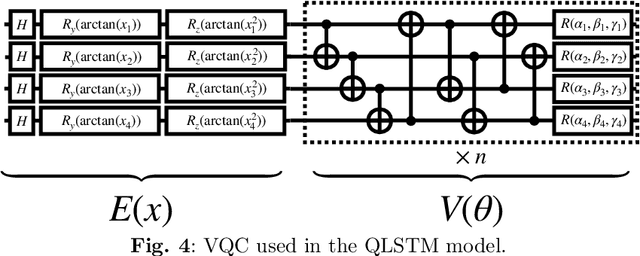

Abstract:Quantum federated learning (QFL) can facilitate collaborative learning across multiple clients using quantum machine learning (QML) models, while preserving data privacy. Although recent advances in QFL span different tasks like classification while leveraging several data types, no prior work has focused on developing a QFL framework that utilizes temporal data to approximate functions useful to analyze the performance of distributed quantum sensing networks. In this paper, a novel QFL framework that is the first to integrate quantum long short-term memory (QLSTM) models with temporal data is proposed. The proposed federated QLSTM (FedQLSTM) framework is exploited for performing the task of function approximation. In this regard, three key use cases are presented: Bessel function approximation, sinusoidal delayed quantum feedback control function approximation, and Struve function approximation. Simulation results confirm that, for all considered use cases, the proposed FedQLSTM framework achieves a faster convergence rate under one local training epoch, minimizing the overall computations, and saving 25-33% of the number of communication rounds needed until convergence compared to an FL framework with classical LSTM models.

Quantum Federated Learning with Quantum Data

May 30, 2021

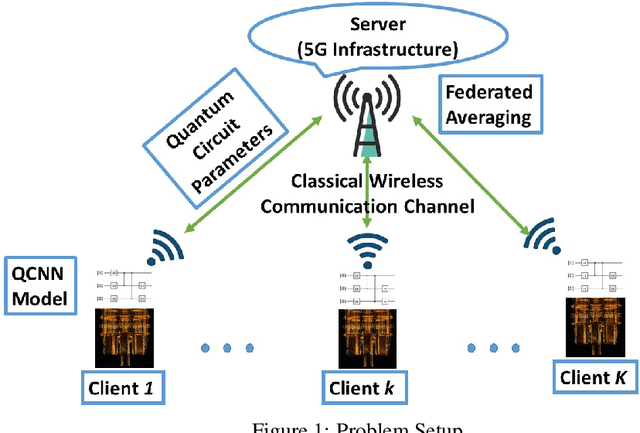

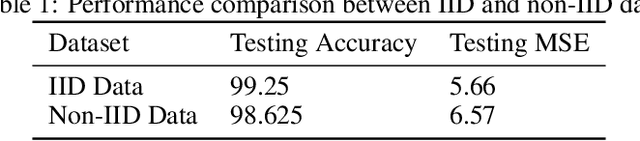

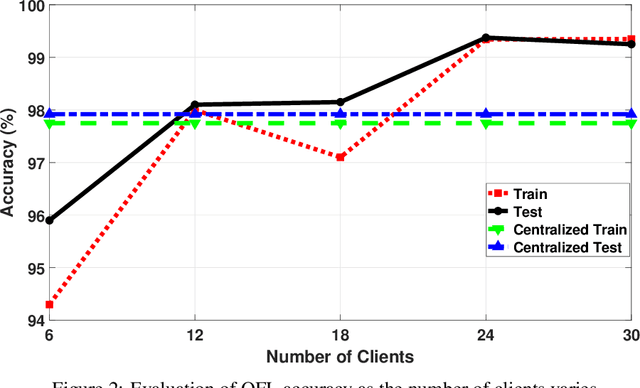

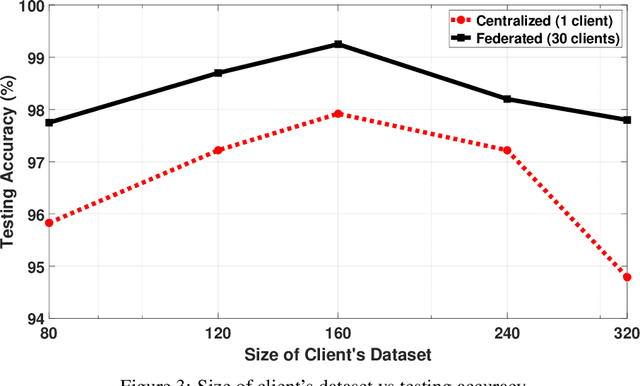

Abstract:Quantum machine learning (QML) has emerged as a promising field that leans on the developments in quantum computing to explore large complex machine learning problems. Recently, some purely quantum machine learning models were proposed such as the quantum convolutional neural networks (QCNN) to perform classification on quantum data. However, all of the existing QML models rely on centralized solutions that cannot scale well for large-scale and distributed quantum networks. Hence, it is apropos to consider more practical quantum federated learning (QFL) solutions tailored towards emerging quantum network architectures. Indeed, developing QFL frameworks for quantum networks is critical given the fragile nature of computing qubits and the difficulty of transferring them. On top of its practical momentousness, QFL allows for distributed quantum learning by leveraging existing wireless communication infrastructure. This paper proposes the first fully quantum federated learning framework that can operate over quantum data and, thus, share the learning of quantum circuit parameters in a decentralized manner. First, given the lack of existing quantum federated datasets in the literature, the proposed framework begins by generating the first quantum federated dataset, with a hierarchical data format, for distributed quantum networks. Then, clients sharing QCNN models are fed with the quantum data to perform a classification task. Subsequently, the server aggregates the learnable quantum circuit parameters from clients and performs federated averaging. Extensive experiments are conducted to evaluate and validate the effectiveness of the proposed QFL solution. This work is the first to combine Google's TensorFlow Federated and TensorFlow Quantum in a practical implementation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge