Maggie Collier

Tracking Human Pose During Robot-Assisted Dressing using Single-Axis Capacitive Proximity Sensing

Sep 22, 2017

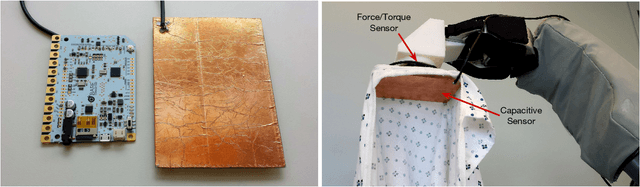

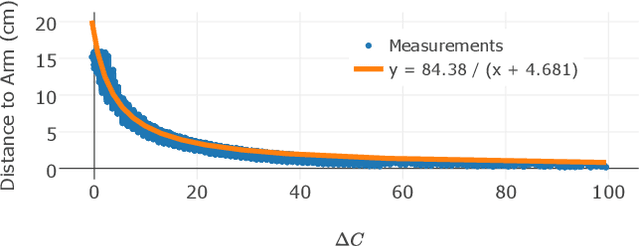

Abstract:Dressing is a fundamental task of everyday living and robots offer an opportunity to assist people with motor impairments. While several robotic systems have explored robot-assisted dressing, few have considered how a robot can manage errors in human pose estimation, or adapt to human motion in real time during dressing assistance. In addition, estimating pose changes due to human motion can be challenging with vision-based techniques since dressing is often intended to visually occlude the body with clothing. We present a method to track a person's pose in real time using capacitive proximity sensing. This sensing approach gives direct estimates of distance with low latency, has a high signal-to-noise ratio, and has low computational requirements. Using our method, a robot can adjust for errors in the estimated pose of a person and physically follow the contours and movements of the person while providing dressing assistance. As part of an evaluation of our method, the robot successfully pulled the sleeve of a hospital gown and a cardigan onto the right arms of 10 human participants, despite arm motions and large errors in the initially estimated pose of the person's arm. We also show that a capacitive sensor is unaffected by visual occlusion of the body and can sense a person's body through fabric clothing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge