Maeve Henchion

A Sentence-level Hierarchical BERT Model for Document Classification with Limited Labelled Data

Jun 12, 2021

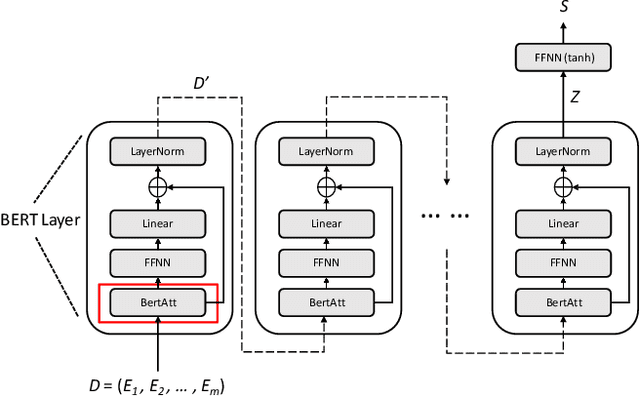

Abstract:Training deep learning models with limited labelled data is an attractive scenario for many NLP tasks, including document classification. While with the recent emergence of BERT, deep learning language models can achieve reasonably good performance in document classification with few labelled instances, there is a lack of evidence in the utility of applying BERT-like models on long document classification. This work introduces a long-text-specific model -- the Hierarchical BERT Model (HBM) -- that learns sentence-level features of the text and works well in scenarios with limited labelled data. Various evaluation experiments have demonstrated that HBM can achieve higher performance in document classification than the previous state-of-the-art methods with only 50 to 200 labelled instances, especially when documents are long. Also, as an extra benefit of HBM, the salient sentences identified by learned HBM are useful as explanations for labelling documents based on a user study.

Investigating the Effectiveness of Representations Based on Word-Embeddings in Active Learning for Labelling Text Datasets

Oct 10, 2019

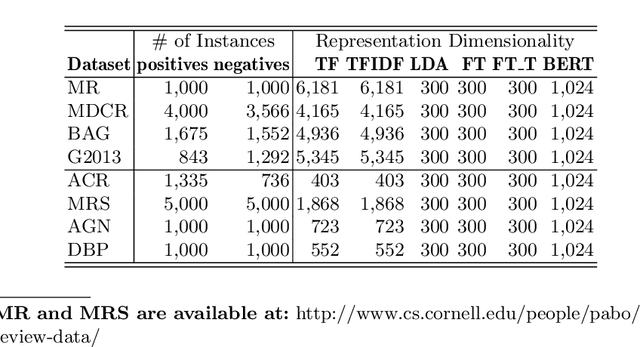

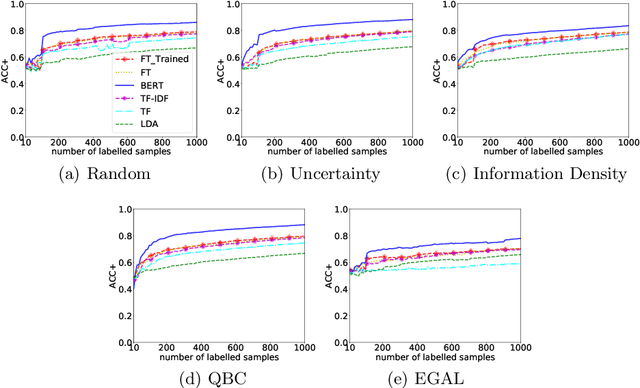

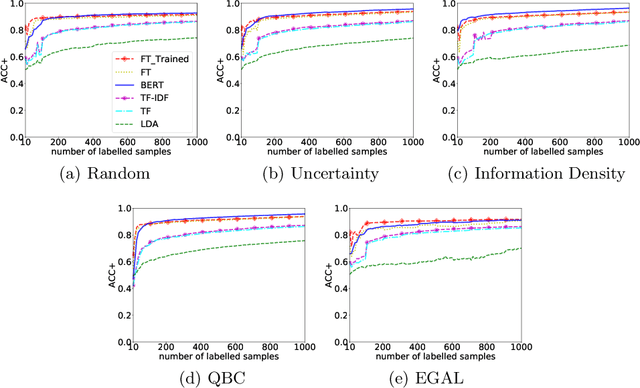

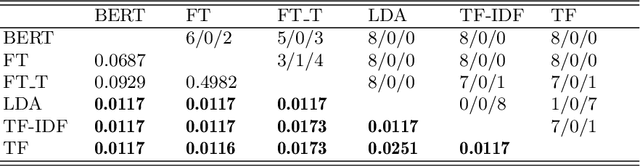

Abstract:Manually labelling large collections of text data is a time-consuming, expensive, and laborious task, but one that is necessary to support machine learning based on text datasets. Active learning has been shown to be an effective way to alleviate some of the effort required in utilising large collections of unlabelled data for machine learning tasks without needing to fully label them. The representation mechanism used to represent text documents when performing active learning, however, has a significant influence on how effective the process will be. While simple vector representations such as bag of words have been shown to be an effective way to represent documents during active learning, the emergence of representation mechanisms based on the word embeddings prevalent in neural network research (e.g. word2vec and transformer-based models like BERT) offer a promising, and as yet not fully explored, alternative. This paper describes a large-scale evaluation of the effectiveness of different text representation mechanisms for active learning across 8 datasets from varied domains. This evaluation shows that using representations based on modern word embeddings---especially BERT---, which have not yet been widely used in active learning, achieves a significant improvement over more commonly used vector-based methods like bag of words.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge